How NVIDIA EGX Accelerates AI on the Edge

TREND ANALYSIS: The mannequin of the engineered system is that it’s a full turnkey answer that gives all the required {hardware} and software program required to carry out a selected activity. NVIDIA’s is actually about plug-and-play AI.

This week at Computex 2019 in Taipei, GPU market chief NVIDIA introduced its new EGX server, an engineered system that brings excessive efficiency, low latency AI to the sting. The idea of EGX is much like NVIDIA’s DGX, which is an engineered system particularly designed for information science groups (therefore DGX, the place Edge = EGX).

The mannequin of the engineered system is that it’s a full turnkey answer that gives all the required {hardware} and software program required to carry out that particular activity. It’s actually plug-and-play AI.Engineered techniques speed up deployment time

I’ve spoken to DGX prospects who’ve instructed me that the turnkey nature of DGX permits them to hurry up the method of deploying, tweaking and tuning the infrastructure required for information sciences from a number of weeks and even months to a single day. I anticipate EGX to have the same however larger worth proposition.

DGX is often deployed in locations the place information scientists and IT execs have entry to it. EGX is designed for edge places like 5G base stations, warehouses, manufacturing unit flooring, oil fields and different locations the place there aren’t those who have bodily entry to the server. Having the best infrastructure in place day one is crucial to successEGX software program is optimized for AI edge inferencing

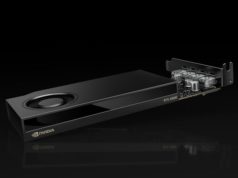

The NVIDA EGX Edge Stack is optimized for the pains of AI inferencing. Models could be educated anyplace, however they run on EGX to interpret information. The software program stack consists of NVIDIA drivers, a CUDA Kubernetes plug-in, a CUDA Docker container runtime setting, CUDA-X libraries, containerized AI particular frameworks equivalent to NVIDIA TensorRT, TensorRT Inference Server and DeepStream. EGX is a reference structure by which the software program stack is loaded on to 1 many licensed server companions.

At launch, the next server producers—in reality, all the key makers—have introduced assist for EGX: Acer, ASRack, ASUS, Atos, Cisco Systems, Dell-EMC, Fujitsu, Gigabyte, HPE, Inspur, Lenovo, QCT, Sugon, SuperMicro, Tyan and Wiwynn.

Edge microserver ecosystem companions:Abaco, Adlink, Advantech, AverMedia, Cloudian, ConnectTech, Curtiss-Wright, Leetop, MIIVII.com, Musashi and WiBase.

There are additionally dozens of ISVs which have leveraged EGX for specialised use circumstances. These embrace AnyVision, DeepVision, IronYun and Malong. There are additionally plenty of health-care particular choices from 12 Sigma, Infervision, Qunatib and Subtle Medical.

NVIDIA EGX is designed to be scalable as prospects can begin with an NVIDIA Jetson Nano GPU, which performs about half a trillion operations per second (TOPS) and might scale as much as a full rack of NVIDIA T4 GPU servers, which performs greater than 10,000 TOPS. The decrease finish is right for duties equivalent to picture recognition, the place the excessive finish could be for real-time AI duties like actual time speech translation and recognition.Further studying Extreme Networks’ Elemental Approach to Autonomous… How Arista’s New 7800R Series Enables Rapid Cloud Transformation …