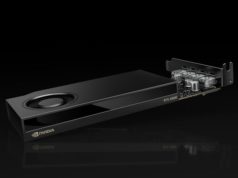

As a part of in the present day’s burst of ISC 2021 commerce present bulletins, NVIDIA this morning is asserting that they’re bringing the 80GB model of their A100 accelerator to the PCIe type issue. First introduced in NVIDIA’s customized SXM type issue final fall, the 80GB model of the A100 was launched to not solely increase the overall reminiscence capability of an A100 accelerator – doubling it from 40GB to 80GB – nevertheless it additionally provided a uncommon mid-generation spec bump as effectively, cranking up the reminiscence clockspeeds by an extra 33%. Now, after a bit over 6 months, NVIDIA is releasing a PCIe model of the accelerator for patrons who want discrete add-in playing cards.

The new 80GB model of the PCIe A100 joins the prevailing 40GB model, and NVIDIA will proceed promoting each variations of the cardboard. On the entire, it is a fairly simple switch of the 80GB SMX A100 over to PCIe, with NVIDIA dialing down the TDP of the cardboard and the variety of uncovered NVLinks to match the capabilities of the shape issue. The launch of the 80GB PCIe card is designed to present NVIDIA’s conventional PCIe type issue clients a second, higher-performing accelerator choice, notably for these customers who want greater than 40GB of GPU reminiscence.

| NVIDIA Accelerator Specification Comparison | ||||||

| 80GB A100 (PCIe) |

80GB A100 (SXM4) |

40GB A100 (PCIe) |

40GB A100 (SXM4) |

|||

| FP32 CUDA Cores | 6912 | 6912 | 6912 | 6912 | ||

| Boost Clock | 1.41GHz | 1.41GHz | 1.41GHz | 1.41GHz | ||

| Memory Clock | 3.0 Gbps HBM2 | 3.2 Gbps HBM2 | 2.43Gbps HBM2 | 2.43Gbps HBM2 | ||

| Memory Bus Width | 5120-bit | 5120-bit | 5120-bit | 5120-bit | ||

| Memory Bandwidth | 1.9TB/sec (1935GB/sec) |

2.0TB/sec (2039GB/sec) |

1.6TB/sec (1555GB/sec) |

1.6TB/sec (1555GB/sec) |

||

| VRAM | 80GB | 80GB | 40GB | 40GB | ||

| Single Precision | 19.5 TFLOPs | 19.5 TFLOPs | 19.5 TFLOPs | 19.5 TFLOPs | ||

| Double Precision | 9.7 TFLOPs (half of FP32 price) |

9.7 TFLOPs (half of FP32 price) |

9.7 TFLOPs (half of FP32 price) |

9.7 TFLOPs (half of FP32 price) |

||

| INT8 Tensor | 624 TOPs | 624 TOPs | 624 TOPs | 624 TOPs | ||

| FP16 Tensor | 312 TFLOPs | 312 TFLOPs | 312 TFLOPs | 312 TFLOPs | ||

| TF32 Tensor | 156 TFLOPs | 156 TFLOPs | 156 TFLOPs | 156 TFLOPs | ||

| Relative Performance (SXM Version) | 90%? | 100% | 90% | 100% | ||

| Interconnect | NVLink 3 12 Links (600GB/sec) |

NVLink 3 12 Links (600GB/sec) |

NVLink 3 12 Links (600GB/sec) |

NVLink 3 12 Links (600GB/sec) |

||

| GPU | GA100 (826mm2) |

GA100 (826mm2) |

GA100 (826mm2) |

GA100 (826mm2) |

||

| Transistor Count | 54.2B | 54.2B | 54.2B | 54.2B | ||

| TDP | 300W | 400W | 250W | 400W | ||

| Manufacturing Process | TSMC 7N | TSMC 7N | TSMC 7N | TSMC 7N | ||

| Interface | PCIe 4.0 | SXM4 | PCIe 4.0 | SXM4 | ||

| Architecture | Ampere | Ampere | Ampere | Ampere | ||

At a excessive degree, the 80GB improve to the PCIe A100 is just about equivalent to what NVIDIA did for the SXM model. The 80GB card’s GPU is being clocked identically to the 40GB card’s, and the ensuing efficiency throughput claims are unchanged.

Instead, this launch is all concerning the on-board reminiscence, with NVIDIA equipping the cardboard with newer HBM2E reminiscence. HBM2E is the casual title given to the newest replace to the HBM2 reminiscence commonplace, which again in February of this yr outlined a brand new most reminiscence pace of three.2Gbps/pin. Coupled with that frequency enchancment, manufacturing enhancements have additionally allowed reminiscence producers to double the capability of the reminiscence, going from 1GB/die to 2GB/die. The internet end result being that HBM2E presents each larger capacities in addition to larger bandwidths, two issues which NVIDIA is profiting from right here.

With 5 energetic stacks of 16GB, 8-Hi reminiscence, the up to date PCIe A100 will get a complete of 80GB of reminiscence. Which, working at 3.0Gbps/pin, works out to simply below 1.9TB/sec of reminiscence bandwidth for…