Humans in general are pretty good at parsing sentences — that is, quickly and naturally identifying the individual parts and functions of a sentence to grasp the overall meaning. But because there’s so much inherent ambiguity in written and spoken speech, parsing sentences accurately has been a challenge for artificially intelligent computers.

So when Google announces — as it did yesterday — that its latest innovation for natural language understanding (NLU) can parse sentences with an accuracy of more than 94 percent (trained human linguists typically reach 96 percent to 97 percent accuracy), that’s a big deal in the ongoing quest for human-like computer intelligence.

And if all this techy/sciencey stuff seems a bit overwhelming, Google shows that advanced AI researchers can also have a sense of humor — they have named the English parser they have trained “Parsey McParseface.” (It’s an homage to a British polar research ship that public poll participants overwhelmingly wanted to be named “Boaty McBoatface”; instead, the agency will name the vessel, “Sir David Attenborough.”)

‘Most Accurate Such Model in the World’

Parsey McParseface is built using SyntaxNet, a neural network framework that Google said it will open source. SyntaxNet is a “key first component” for natural language understanding, and making it open source means other developers will now be able to use the framework to “train” new computer models to better understand human language.

“Because Parsey McParseface is the most accurate such model in the world, we hope that it will be useful to developers and researchers interested in automatic extraction of information, translation, and other core applications of NLU,” senior staff research scientist Slav Petrov wrote yesterday on the Google Research Blog. Applied to well-formed text, Parsey McParseface is “approaching human performance,” he said.

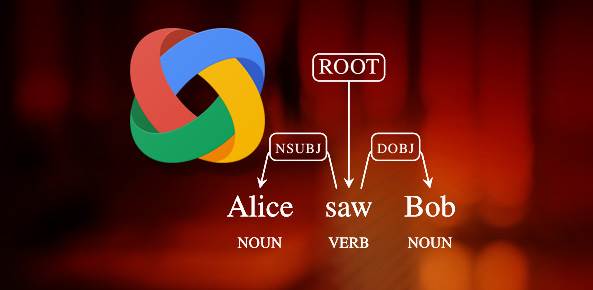

A syntactic parser like SyntaxNet is designed to look at any human-language sentence, tag each word according to what part of speech it is (i.e., subject, object, etc.), determine the relationships between each word and, from there, decide on the most likely meaning of the sentence. Petrov said that SyntaxNet uses neural networks and a concept called “beam search” to identify potential meanings at each step of the parsing process to then determine the most likely interpretation of a sentence.

Work ‘Still Cut Out for Us’

Parsey McParseface can not only “understand” simple sentences like, “Alice saw Bob,” but it can also tease the correct meaning out of more complex and ambiguous constructions as well, Petrov said.

That’s something humans still do better than any AI. For example, given the sentence, “Alice drove down the street in her car,” most people will understand that Alice was in her car and driving down the street — not that Alice was driving down a street that was located in her car, which is an “absurd, but possible” interpretation, Petrov noted.

With sentences that are not necessarily “well-formed” — content taken randomly from the Web, for example — artificially intelligent parsers typically have much more trouble determining meanings. Parsey McParseface, however, has achieved an accuracy of just over 90 percent on that type of content, Petrov said.

“While the accuracy is not perfect, it’s certainly high enough to be useful in many applications,” he said. “Machine learning (and in particular, neural networks) have made significant progress in resolving these ambiguities. But our work is still cut out for us: we would like to develop methods that can learn world knowledge and enable equal understanding of natural language across all languages and contexts.”

Image Credit: Screenshots via Google Research Blog.

![[Earth Day ①] Advancing Circularity With the Galaxy S24](https://loginby.com/itnews/wp-content/uploads/2024/05/1714970698_Earth-Day-①-Advancing-Circularity-With-the-Galaxy-S24-100x75.jpg)