The Wafer Scale engine has been developed by Cerebras Systems to face the continuing enhance in demand for AI-training engines. However, in workloads the place latency happen a really actual impression in coaching occasions and a system’s functionality, Cerebras needed to design a processor that averted the necessity for a communication lane for all its cores to speak – the system is simply restricted, mainly, by transistors’ switching occasions. Its 400,000 cores talk seamlessly through interconnects, etched on 42,225 sq. millimeters of silicon (by comparability, NVIDIA’s largest GPU is 56.7 occasions smaller at “just” 815 sq. millimeters).

However, in a world the place silicon wafer manufacturing nonetheless has occurrences of producing defects that may render complete chips inoperative, how did Cerebras handle to construct such a big processor and maintain it from having such defects that it could actually’t truly ship on the reported specs and efficiency? The reply is an previous one, primarily: redundancy, paired with some further magical engineering powders achieved along with the chips’ producer, TSMC. The chip is constructed on TSMC’s 16 nm node – a extra refined course of with confirmed yields, cheaper than a cutting-edge 7 nm course of, and with much less areal density – this is able to make it much more troublesome to correctly cool these 400,000 cores, as you could think about.

Cross-reticle connectivity, yield, energy supply, and packaging enhancements have all been researched and deployed by Cerebras in fixing the scaling issues related to such giant chips. furthermore, the chips is constructed with redundant options that ought to be sure that even when some defects come up in numerous components of the silicon chip, the areas which have been designed as “overprovisioning” can minimize in an decide up the slack, routing and processing information with out skipping a beat. Cerebras says any given element (cores, SRAM, and so forth) of the chip options 1%, 1.5% of further overprovisioning functionality that allows any manufacturing defects to be only a negligible speedbump as an alternative of a silicon-waster.

The inter-core communication answer is without doubt one of the most superior ever seen, with a fine-grained, all-hardware, on-chip mesh-connected communication community dubbed Swarm that delivers an combination bandwidth of 100 petabits per second.. that is paired with 18 Gb of native, distributed, superfast SRAM reminiscence because the one and solely degree of the reminiscence hierarchy – delivering reminiscence bandwidth within the realm of 9 petabytes per second.

The 400,000 cores are custom-designed for AI workload acceleration. Named SLAC for Sparse Linear Algebra Cores, these are versatile, programmable, and optimized for the sparse linear algebra that underpins all neural community computation (consider these as FPGA-like, programmable arrays of cores). SLAC’s programmability ensures cores can run all neural community algorithms within the continuously altering machine studying discipline – this can be a chip that may adapt to completely different workloads and AI-related downside fixing and coaching – a requirement for such costly deployments because the Wafer Scale Engine will certainly pose.

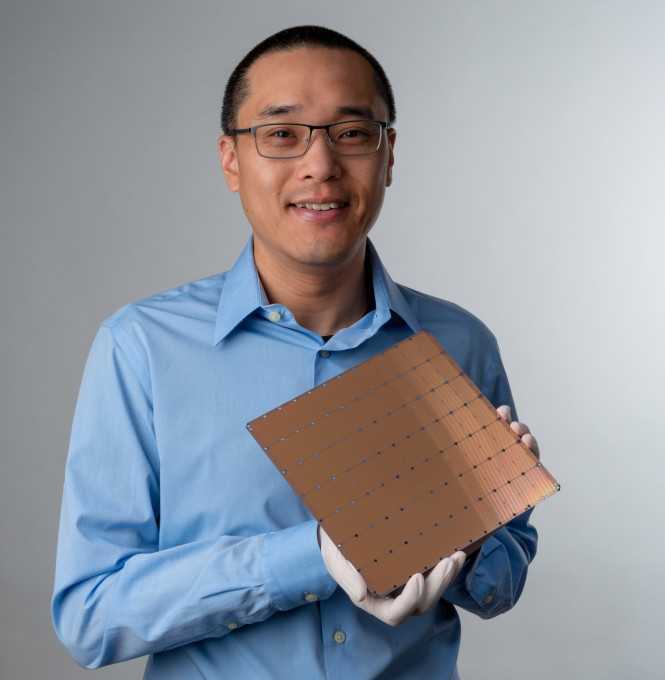

The total chip and its accompanying deployment equipment needed to be developed in-house. As founder and CEO Andrew Feldman places it, there have been no packaging, printed circuit boards, connectors, chilly plates, instruments or any software program that may very well be tailored in direction of the manufacturing and deployment of the Wafer Scale Engine. This signifies that Cerebras Systems’ and its staff of 173 engineers needed to develop not solely the chip, however virtually all the pieces else that’s wanted to verify it truly works….