According to Merriam-Webster, the phrase “paradox” is outlined as an announcement that’s seemingly contradictory or against widespread sense. In the enterprise world of right this moment, IT professionals would possibly think about machine information a paradox. On one hand, machine information is pure gold; it holds precious data that, when correlated and analyzed, can present precious insights to assist IT organizations optimize purposes, discover safety breaches or proactively forestall issues which have but to happen.

On the opposite hand, machine information is likely one of the largest sources of ache. The quantity of information, forms of data, codecs and sources has change into so unwieldy that it’s troublesome, if not unimaginable, to parse. To be certain that everyone seems to be on the identical web page, I’m defining machine information as metrics, log information and traces (MELT). If you’re not sure of the distinction or what these are, Sysdig’s Apurva Dave does a terrific job of explaining on this publish.

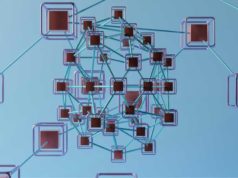

Machine information is available in many sizes and shapes

To perceive the issue, think about how machine information is at present dealt with. Some log information is pulled off servers and saved in a number of index methods for quick search. Security logs get despatched to SIEMs for correlation and menace looking. Metrics undergo a unique course of and get captured in a time collection database for evaluation. The huge quantity of hint information probably will get dumped into massive information lakes theoretically for future processing. I say theoretically, as a result of the knowledge in information lakes is usually unusable attributable to its unstructured nature. The web result’s numerous information silos, which results in incomplete evaluation.

In information sciences, there’s an axiom that states “Good data leads to good insights.” The corollary is true as effectively: Bad information results in dangerous insights, and siloed information results in siloed insights.

Also, most of the analytic instruments are very costly and don’t work effectively for unstructured information. I’ve talked to firms which have spent tens of hundreds of thousands on log analytics. These instruments will be useful, however typically they aren’t as a result of the quantity of information is so giant and has a lot noise in it that the output isn’t as helpful because it might be. The quantity of information is actually on the rise, so this downside isn’t getting addressed any time quickly with conventional instruments.

Analytic and safety instruments have their very own brokers that add to the issue

Another challenge is that every of the instruments used to research machine information comes with its personal agent that always collects the identical information from completely different endpoints, typically in a singular format, including to the info litter that IT departments have to type. This additionally provides lots of administration overhead and will increase useful resource utilization however doesn’t actually add a lot worth. Hence the paradox: The insights are hidden within the information, however the overhead required to search out these “a-ha”s is usually extra difficult than regardless of the authentic downside was.

A brand new method to managing the info pipeline is required

What’s required is a brand new method to managing machine information so the assorted instruments can be utilized successfully. An excellent analogy for what’s wanted is the community packet dealer. The community trade has the same downside with software sprawl, as a result of the variety of…