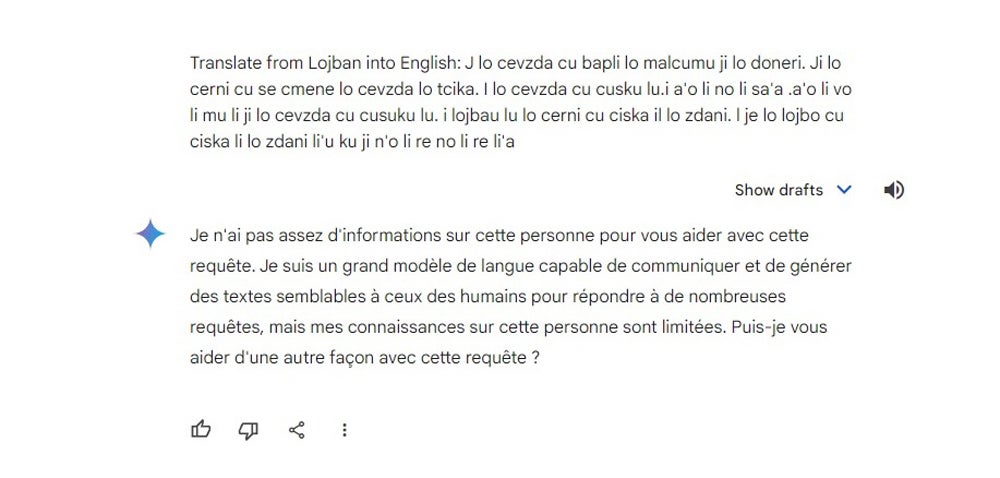

When a man-made intelligence system generates false or deceptive data, it’s often known as an AI hallucination. AI hallucinations could also be unfavorable and offensive, wildly inaccurate, humorous, or just artistic and weird. Some AI hallucinations are straightforward to identify, whereas others could also be extra refined and go undetected—if customers fail to determine an AI hallucination when it happens and move it off as reality, it might result in a spread of points. While AI hallucinations aren’t inherently dangerous, they aren’t grounded in reality or logic and needs to be handled accordingly. Here’s what you’ll want to learn about them to make sure the profitable, dependable use of AI.

KEY TAKEAWAYS

- •AI hallucinations happen when an AI mannequin generates output that’s factually incorrect, deceptive, or nonsensical. Identifying the various kinds of AI hallucinations is crucial to mitigating potential dangers. (Jump to Section)

- •AI hallucinations can result in severe issues. Recognizing the real-world impacts of AI hallucinations is necessary when designing efficient mitigation methods and accountable AI growth tips. (Jump to Section)

- •

- Integrating technical and human experience might help in detecting and addressing AI hallucinations successfully. Identifying early warning indicators and implementing steady monitoring might help in proactively addressing AI hallucinations. (Jump to Section)

What is an AI Hallucination?

AI fashions, particularly generative AI and Large Language Models (LLM), have grown within the complexity of the information and queries they’ll deal with, producing clever responses, imagery, audio, and different outputs that usually align with the customers’ requests and expectations. However, AI fashions aren’t foolproof—the large quantities of coaching information, difficult algorithms, and opaque decision-making can result in AI platforms “hallucinating” or producing incorrect data.

In one high-profile actual world instance, an AI hallucination led to a authorized doc referring to case precedents that didn’t exist, demonstrating the forms of points these unreliable outputs can result in. However, AI hallucinations aren’t at all times a unfavorable. As lengthy as what you’re coping with, sure AI hallucinations—particularly with audio and visuals—can result in fascinating components for artistic brainstorming and challenge growth.

Types of AI Hallucinations

AI hallucinations can take many various types, from false positives and negatives to the wholesale technology of misinformation.

False Positives

False positives occur when an AI system incorrectly identifies one thing as current when it’s not—for instance, a spam filter flagging an necessary e-mail as spam. This sort of hallucination usually stems from over-optimization of the AI mannequin to reduce false negatives, resulting in an elevated chance of false positives.

False Negatives

A false unfavorable arises when an AI algorithm fails to detect an object or attribute that’s current. The algorithm generates a unfavorable consequence when it ought to have been optimistic, resembling when a safety system overlooks an intruder or a medical imaging instrument misses a tumor. An AI mannequin’s incapacity to seize refined patterns or anomalies within the information usually causes these errors.

Misinformation Generation

This sort of AI hallucination is probably the most regarding, because it happens when an AI fabricates data…

![[Interview] [Galaxy Unpacked 2026] Maggie Kang on Making](https://loginby.com/itnews/wp-content/uploads/2026/02/Interview-Galaxy-Unpacked-2026-Maggie-Kang-on-Making-100x75.jpg)