Every time ChatGPT takes three seconds to reply as a substitute of 30, there’s in all probability infrastructure like vLLM working behind the scenes.

You’ve been utilizing it with out figuring out it. And now, the staff behind it simply turned an $800 million firm in a single day.

Here are the main points

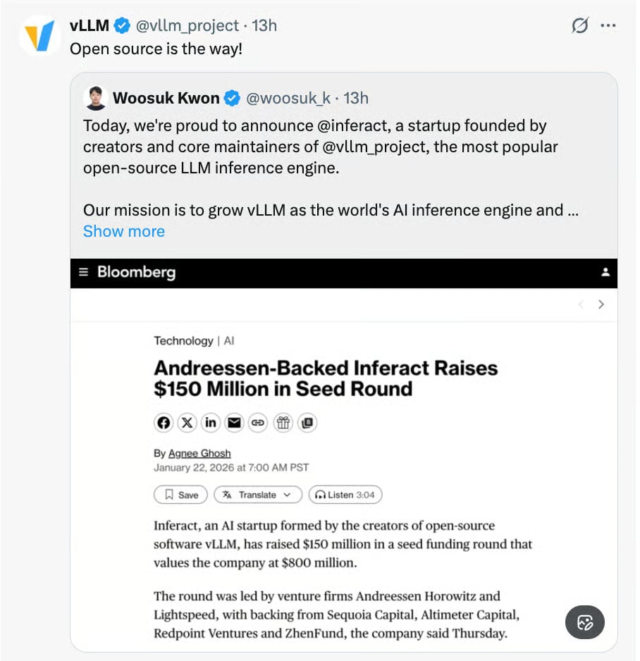

Today, Inferact launched with a large $150 million seed spherical to commercialize the open-source inference engine that’s already powering AI at Amazon, main cloud suppliers, and hundreds of builders worldwide. Andreessen Horowitz and Lightspeed led the spherical, with participation from Sequoia, Databricks, and others.

What really is vLLM? Think of it because the distinction between a site visitors jam and an AI freeway system. When you ask ChatGPT a query, your request goes by an “inference” course of — the mannequin generates your reply, phrase by phrase. vLLM makes that course of drastically sooner and cheaper by two key improvements:

PagedAttention: Manages reminiscence like your laptop handles RAM, slicing waste by as much as 24x in comparison with conventional strategies.

Continuous batching: Instead of processing one request at a time, vLLM handles a number of requests concurrently, like a restaurant serving 10 tables directly as a substitute of ready for every particular person to complete earlier than seating the following.

Companies utilizing vLLM report inference speeds 2-24x sooner than normal implementations, with dramatically decrease prices. The mission has attracted over 2,000 code contributors since launching in 2023 from UC Berkeley’s Sky Computing Lab.

Why this issues

AI is shifting from a coaching downside to a deployment downside.

Building a sensible mannequin is not the bottleneck (all of the important fashions are good), operating it affordably at scale is. As firms transfer from experimenting with ChatGPT to deploying AI throughout hundreds of thousands of customers this 12 months, inference optimization turns into the distinction between revenue and chapter.

Expect each main AI firm to obsess over inference economics in 2026. The winners received’t essentially be the neatest fashions, however the ones that may serve predictions quick sufficient and cheaply sufficient to really become profitable.

For you: If your organization is evaluating AI instruments, ask distributors about their inference infrastructure. Tools constructed on engines like vLLM will scale extra cost-effectively than proprietary options that haven’t solved this downside. The open-source benefit right here is actual… and now, venture-backed.

Editor’s word: This content material initially ran within the publication of our sister publication, The Neuron. To learn extra from The Neuron, join its publication right here.

The submit This $800M Startup Makes ChatGPT 24x Faster appeared first on eWEEK.