Back at Computex 2024, AMD unveiled their extremely anticipated Zen 5 CPU microarchitecture throughout AMD CEO Dr. Lisa Su’s opening keynote. AMD introduced not one however two new shopper platforms that can make the most of the newest Zen 5 cores. This contains AMD’s newest AI PC-focused chip household for the laptop computer market, the Ryzen AI 300 sequence. In comparability, the Ryzen 9000 sequence caters to the desktop market, which makes use of the preexisting AM5 platform.

Built across the new Zen 5 CPU microarchitecture with some elementary enhancements to each graphics and AI efficiency, the Ryzen AI 300 sequence, code-named Strix Point, is about to ship enhancements in a number of areas. The Ryzen AI 300 sequence seems set so as to add one other footnote within the march in direction of the AI PC with its cellular SoC that includes a brand new XDNA 2 NPU, from which AMD guarantees 50 TOPS of efficiency. AMD has additionally upgraded the built-in graphics with the RDNA 3.5, which is designed to switch the final era of RDNA Three cellular graphics, for higher efficiency in video games than we have seen earlier than.

Further to this, throughout AMD’s current Tech Day final week, AMD disclosed a number of the technical particulars concerning Zen 5, which additionally covers a variety of key components below the hood on each the Ryzen AI 300 and the Ryzen 9000 sequence. On paper, the Zen 5 structure seems fairly an enormous step up in comparison with Zen 4, with the important thing part driving Zen 5 ahead by means of greater directions per cycle than its predecessor, which is one thing AMD has managed to do constantly from Zen to Zen 2, Zen 3, Zen 4, and now Zen 5.

AMD Zen 5 Microarchitecture: 16% Better IPC Than Zen 4

Both the AMD Ryzen AI 300 sequence for cellular and the Ryzen 9000 sequence for desktops are powered by AMD’s newest Zen 5 structure, which brings a bunch of enhancements in efficiency and effectivity. Perhaps the most important enchancment inside their cellular lineup is the mixing of the XDNA 2 NPU, which is designed to utilize the Microsoft Copilot+ AI software program. These new cellular processors by way of the NPU can ship as much as 50 TOPS of AI efficiency, making them a major improve in AMD’s cellular chip lineup.

The key options below the hood of the Zen 5 microarchitecture embody a dual-pipe fetch, which is coupled with what AMD is asking superior department prediction. This is designed to scale back the latency and improve the accuracy and throughput. Enhanced instruction cache latency and bandwidth optimizations additional the movement of knowledge and the velocity of the info processing with out sacrificing accuracy.

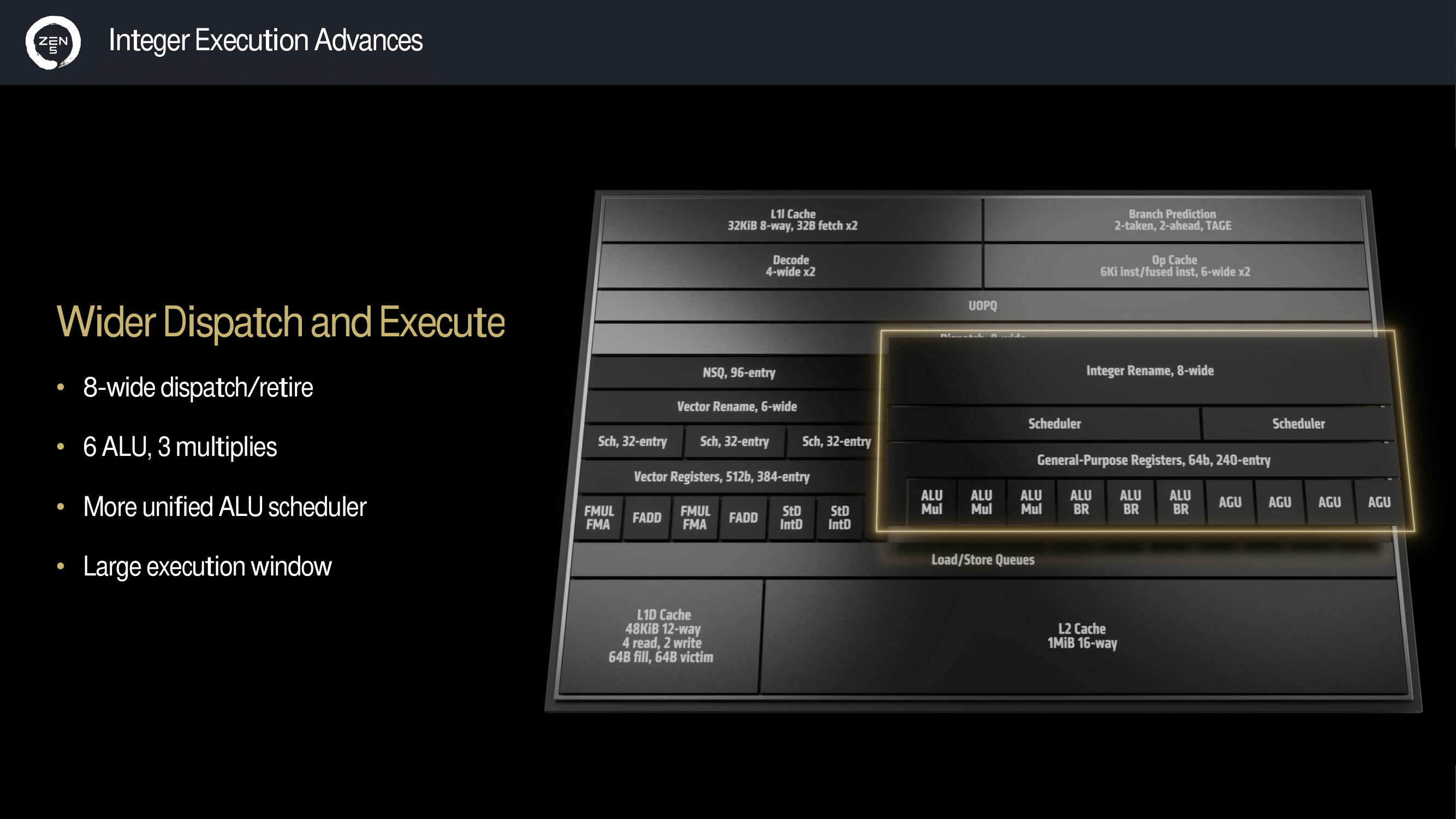

The Zen 5 integer execution capabilities have been upgraded over Zen 4, with Zen 5 that includes an 8-wide dispatch/retire system. Part of the overhaul below the hood for Zen 5 contains six Arithmetic Logic Units (ALUs) and three multipliers, that are managed by means of an ALU scheduler, and AMD is claiming Zen 5 makes use of a bigger execution window. These enhancements ought to theoretically be higher with extra complicated computational workloads.

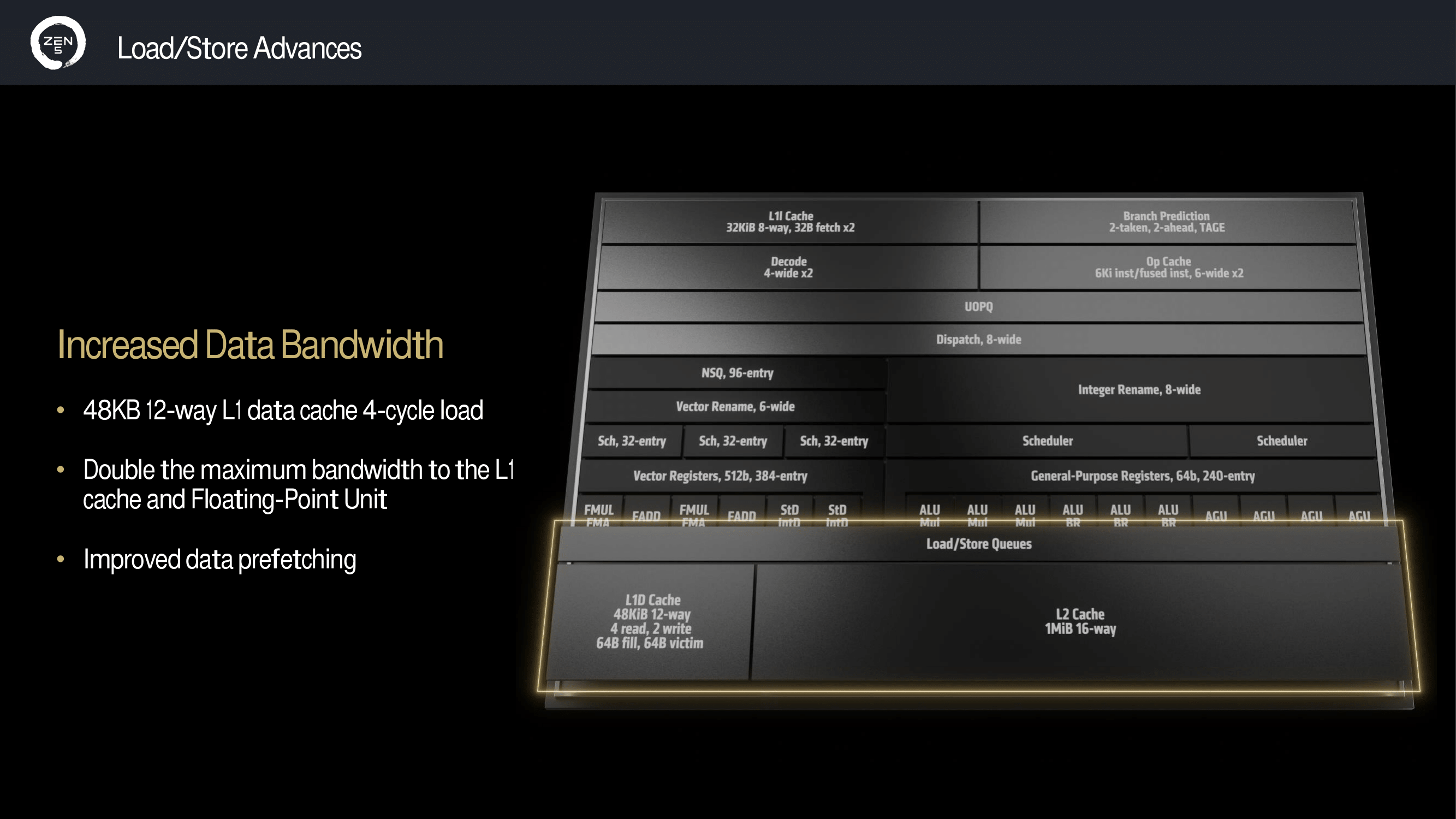

Other key enhancements that Zen 5 comes with embody extra knowledge bandwidth than Zen 4, with a 48 KB 12-way L1 knowledge cache that may cater to a 4-cycle load. AMD has doubled the utmost bandwidth out there to the L1 cache, and the Floating-Point Unit has been doubled over Zen 4. AMD additionally claims it has improved the info prefetcher, which ensures sooner and extra dependable knowledge entry and processing.

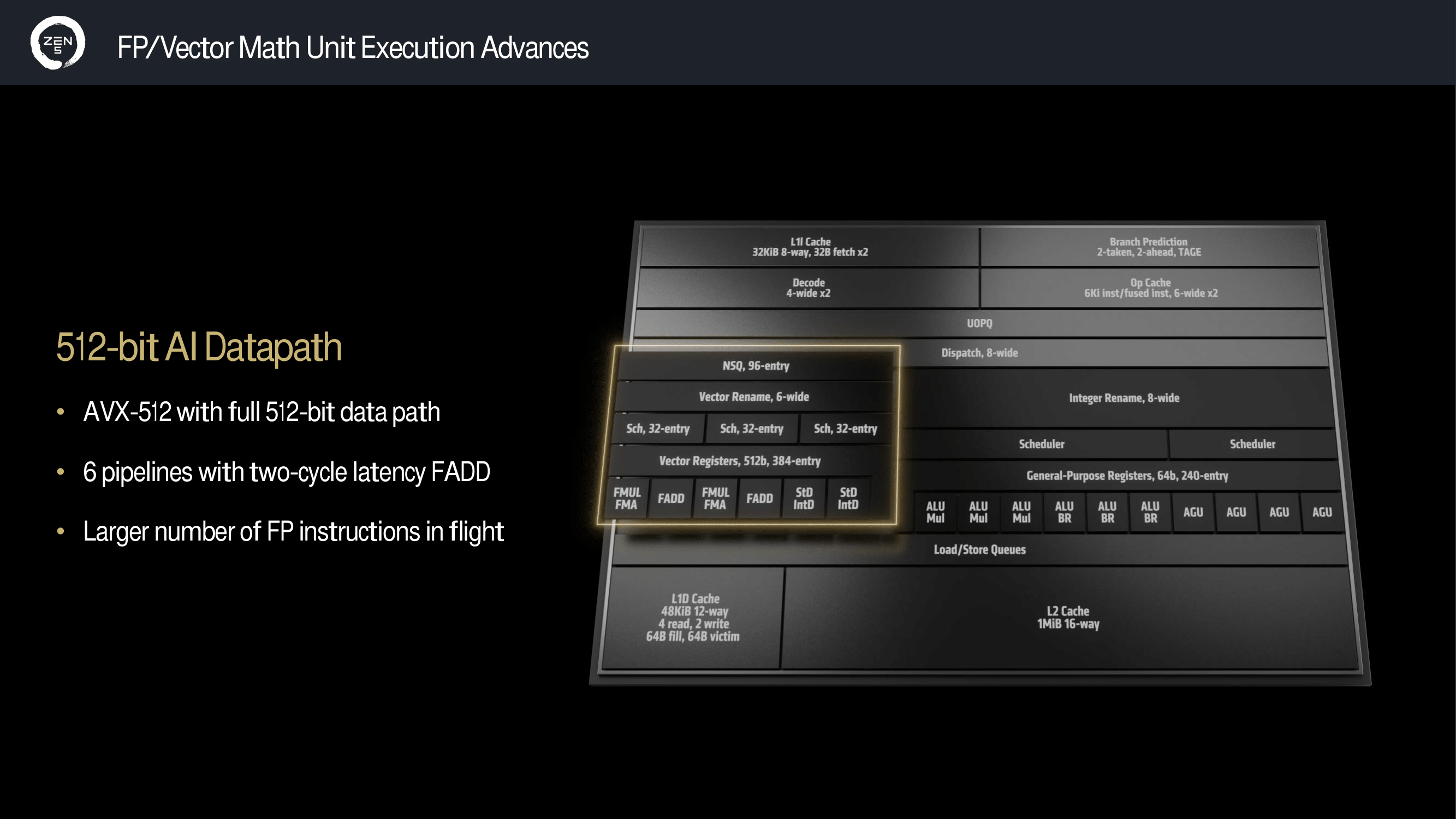

Zen 5 additionally introduces a full 512-bit AI datapath, which makes use of AVX-512 with the full 512-bit knowledge path and 6 pipelines with two-cycle latency FADD. Although Zen Four can help AVX-512 directions, it makes use of two 256-bit knowledge paths that work in tandem with one another, with the time period ‘double pumping’ being essentially the most extensively used time period for it. Zen 5 now has a full AVX-512 knowledge path, which is a welcomed…