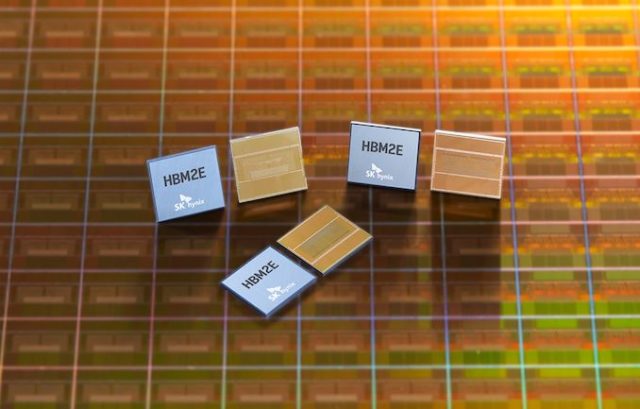

Just shy of a yr in the past, SK Hynix threw their hat into the ring, because it had been, by turning into the second firm to announce reminiscence primarily based on the HBM2E commonplace. Now the corporate has introduced that their improved high-speed, excessive density reminiscence has gone into mass manufacturing, providing switch charges as much as 3.6 Gbps/pin, and capacities of as much as 16GB per stack.

As a fast refresher, HBM2E is a small replace to the HBM2 commonplace to enhance its efficiency, serving as a mid-generational kicker of types to permit for larger clockspeeds, larger densities (as much as 24GB with 12 layers), and the underlying adjustments which can be required to make these occur. Samsung was the primary reminiscence vendor to ship HBM2E with their 16GB/stack Flashbolt reminiscence, which runs at as much as 3.2 Gbps in-spec (or 4.2 Gbps out-of-spec). This in flip has led to Samsung turning into the precept reminiscence associate for NVIDIA’s recently-launched A100 accelerator, which was launched utilizing Samsung’s Flashbolt reminiscence.

Today’s announcement by SK Hynix implies that the remainder of the HBM2E ecosystem is taking form, and that chipmakers will quickly have entry to a second provider for the speedy reminiscence. As per SK Hynix’s preliminary announcement final yr, their new HBM2E reminiscence is available in 8-Hi, 16GB stacks, which is twice the capability of their earlier HBM2 reminiscence. Meanwhile, the reminiscence is ready to clock at as much as 3.6 Gbps/pin, which is definitely sooner than the “just” 3.2 Gbps/pin that the official HBM2E spec tops out at. So like Samsung’s Flashbolt reminiscence, it could appear that the three.6 Gbps information price is actually an non-compulsory out-of-spec mode for chipmakers who’ve HBM2E reminiscence controllers that may sustain with the reminiscence.

At these prime speeds, this provides a single 1024-pin stack a complete of 460GB/sec of reminiscence bandwidth, which rivals (or exceeds) most video playing cards at this time. And for extra superior gadgets which make use of a number of stacks (e.g. server GPUs), this implies a 6-stack configuration may attain as excessive as 2.76TB/sec of reminiscence bandwidth, a large quantity by any measure.

Finally, for the second SK Hynix isn’t asserting any prospects, however the firm expects the brand new reminiscence for use on “next-generation AI (Artificial Intelligence) systems including Deep Learning Accelerator and High-Performance Computing.” An eventual second-source for NVIDIA’s A100 could be among the many most fast use instances for the brand new reminiscence, although NVIDIA is way from the one vendor to make use of HBM2. If something, SK Hynix is usually very near AMD, who is because of launch some new server GPUs over the following yr to be used in supercomputers and different HPC programs. So a method or one other, the period of HBM2E is shortly ramping up, as an increasing number of high-end processors are set to be launched utilizing the sooner reminiscence.