Deep studying algorithms are a core aspect of synthetic intelligence (AI) as they’re the processes by which a pc is ready to suppose and be taught like a human being does. A Neural Processing Unit (NPU) is a processor that’s optimized for deep studying algorithm computation, designed to effectively course of hundreds of those computations concurrently.

Samsung Electronics final month introduced its objective to strengthen its management within the international system semiconductor trade by 2030 via increasing its proprietary NPU expertise improvement. The firm just lately delivered an replace to this objective on the convention on Computer Vision and Pattern Recognition (CVPR), one of many prime tutorial conferences in laptop imaginative and prescient fields.

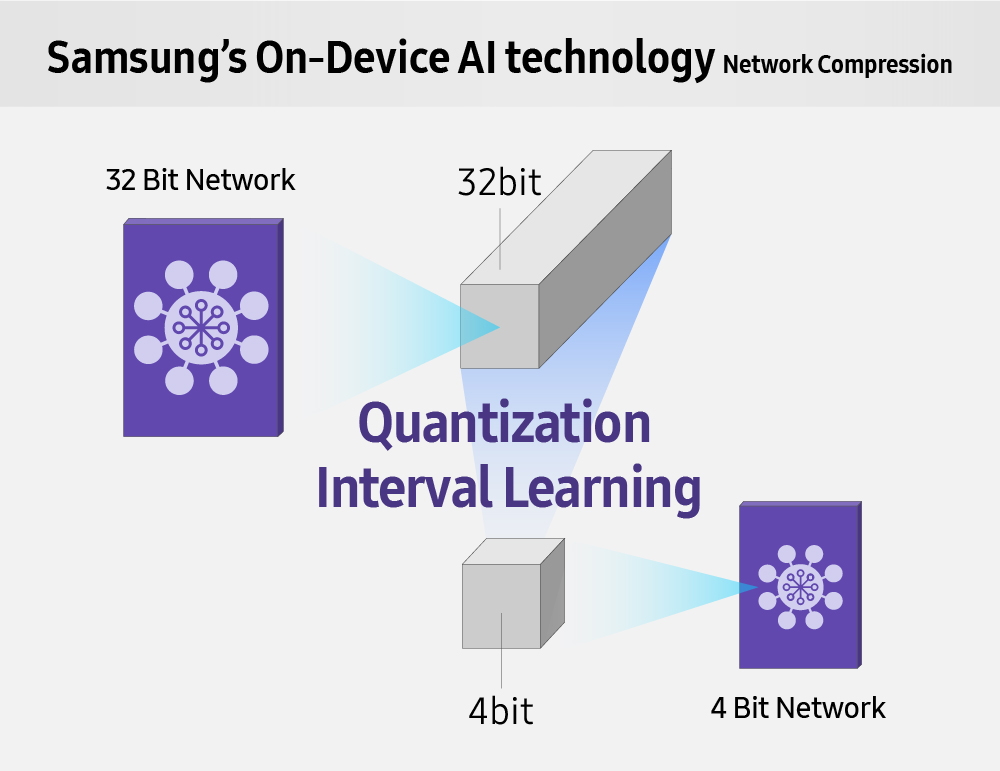

This replace is the corporate’s improvement of its On-Device AI light-weight algorithm, launched at CVPR with a paper titled “Learning to Quantize Deep Networks by Optimizing Quantization Intervals With Task Loss”. On-Device AI applied sciences immediately compute and course of knowledge from inside the system itself. Over four occasions lighter and eight occasions quicker than current algorithms, Samsung’s newest algorithm resolution is dramatically improved from earlier options and has been evaluated to be key to fixing potential points for low-power, high-speed computations.

Streamlining the Deep Learning Process

Samsung Advanced Institute of Technology (SAIT) has introduced that they’ve efficiently developed On-Device AI light-weight expertise that performs computations eight occasions quicker than the prevailing 32-bit deep studying knowledge for servers. By adjusting the info into teams of beneath four bits whereas sustaining correct knowledge recognition, this technique of deep studying algorithm processing is concurrently a lot quicker and way more vitality environment friendly than current options.

Samsung’s new On-Device AI processing expertise determines the intervals of the numerous knowledge that affect general deep studying efficiency via ‘learning’. This ‘Quantization1 Interval Learning (QIL)’ retains knowledge accuracy by re-organizing the info to be offered in bits smaller than their current measurement. SAIT ran experiments that efficiently demonstrated how the quantization of an in-server deep studying algorithm in 32 bit intervals offered larger accuracy than different current options when computed into ranges of lower than four bits.

When the info of a deep studying computation is offered in bit teams decrease than four bits, computations of ‘and’ and ‘or’ are allowed, on prime of the easier arithmetic calculations of addition and multiplication. This implies that the computation outcomes utilizing the QIL course of can obtain the identical outcomes as current processes can whereas utilizing 1/40 to 1/120 fewer transistors2.

As this technique due to this fact requires much less {hardware} and fewer electrical energy, it may be mounted immediately in-device on the place the place the info for a picture or fingerprint sensor is being obtained, forward of transmitting the processed knowledge on to the mandatory finish factors.

The Future of AI Processing and Deep Learning

This expertise will assist develop Samsung’s system semiconductor capability in addition to strengthening one of many core applied sciences of the AI period – On-Device AI processing. Differing from AI companies that use cloud servers, On-Device AI applied sciences immediately compute knowledge all from inside the system itself.

On-Device AI expertise can cut back the price of cloud building for AI operations because it operates by itself and offers fast and secure efficiency to be used circumstances corresponding to digital actuality and autonomous driving. Furthermore, On-Device AI expertise can save private biometric info used for system authentication, corresponding to fingerprint, iris and face scans, onto cellular units safely.

“Ultimately, in the future we will live in a world where all devices and sensor-based technologies are powered by AI,” famous Chang-Kyu…