Integration of HBM-PIM with the Xilinx Alveo AI accelerator system will increase

general system efficiency by 2.5X whereas decreasing vitality consumption by greater than 60%

PIM structure will likely be broadly deployed past HBM,

to incorporate mainstream DRAM modules and cell reminiscence

Samsung Electronics, the world chief in superior reminiscence know-how, in the present day showcased its newest developments with processing-in-memory (PIM) know-how at Hot Chips 33—a number one semiconductor convention the place essentially the most notable microprocessor and IC improvements are unveiled every year. Samsung’s revelations embrace the primary profitable integration of its PIM-enabled High Bandwidth Memory (HBM-PIM) right into a commercialized accelerator system, and broadened PIM functions to embrace DRAM modules and cell reminiscence, in accelerating the transfer towards the convergence of reminiscence and logic.

First Integration of HBM-PIM Into an AI Accelerator

In February, Samsung launched the trade’s first HBM-PIM (Aquabolt-XL), which contains the AI processing perform into Samsung’s HBM2 Aquabolt, to reinforce high-speed information processing in supercomputers and AI functions. The HBM-PIM has since been examined within the Xilinx Virtex Ultrascale+ (Alveo) AI accelerator, the place it delivered an virtually 2.5X system efficiency achieve in addition to greater than a 60% minimize in vitality consumption.

“HBM-PIM is the industry’s first AI-tailored memory solution being tested in customer AI-accelerator systems, demonstrating tremendous commercial potential,” mentioned Nam Sung Kim, senior vice chairman of DRAM Product & Technology at Samsung Electronics. “Through standardization of the technology, applications will become numerous, expanding into HBM3 for next-generation supercomputers and AI applications, and even into mobile memory for on-device AI as well as for memory modules used in data centers.”

“Xilinx has been collaborating with Samsung Electronics to enable high-performance solutions for data center, networking and real-time signal processing applications starting with the Virtex UltraScale+ HBM family, and recently introduced our new and exciting Versal HBM series products,” mentioned Arun Varadarajan Rajagopal, senior director, Product Planning at Xilinx, Inc. “We are delighted to continue this collaboration with Samsung as we help to evaluate HBM-PIM systems for their potential to achieve major performance and energy-efficiency gains in AI applications.”

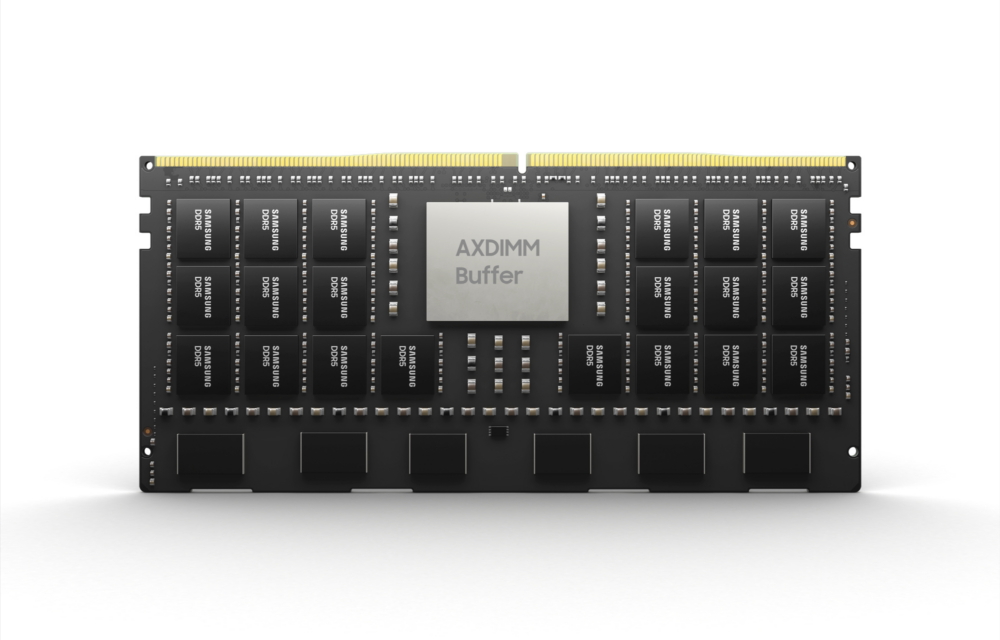

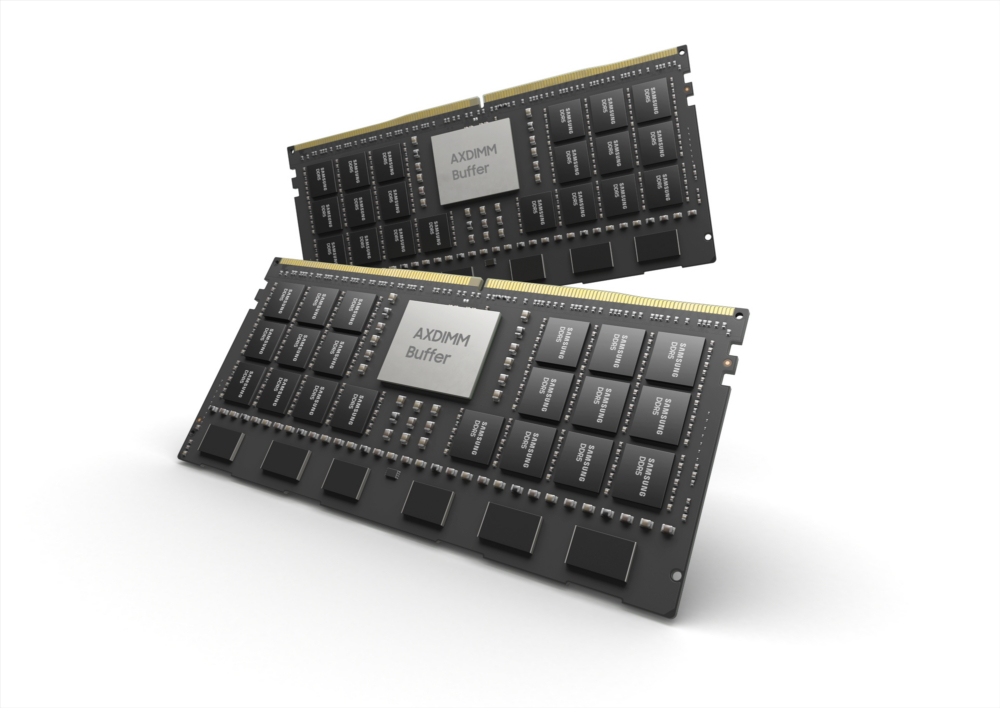

DRAM Modules Powered by PIM

The Acceleration DIMM (AXDIMM) brings processing to the DRAM module itself, minimizing massive information motion between the CPU and DRAM to spice up the vitality effectivity of AI accelerator techniques. With an AI engine constructed contained in the buffer chip, the AXDIMM can carry out parallel processing of a number of reminiscence ranks (units of DRAM chips) as an alternative of accessing only one rank at a time, enormously enhancing system efficiency and effectivity. Since the module can retain its conventional DIMM type issue, the AXDIMM facilitates drop-in alternative with out requiring system modifications. Currently being examined on buyer servers, the AXDIMM can supply roughly twice the efficiency in AI-based advice functions and a 40% lower in system-wide vitality utilization.

“SAP has been continuously collaborating with Samsung on their new and emerging memory technologies to deliver optimal performance on SAP HANA and help database acceleration,” mentioned Oliver Rebholz, head of HANA core analysis & innovation at SAP. “Based on performance projections and potential integration scenarios, we expect significant performance improvements for in-memory database management system (IMDBMS) and higher energy efficiency via disaggregated computing on AXDIMM. SAP is looking to continue its collaboration with Samsung in this area.”

Mobile Memory That Brings AI From Data Center to Device

Samsung’s LPDDR5-PIM cell reminiscence know-how can…