How do machine studying fashions do what they do? And are they actually “thinking” or “reasoning” the best way we perceive these issues? This is a philosophical query as a lot as a sensible one, however a brand new paper making the rounds Friday means that the reply is, at the very least for now, a fairly clear “no.”

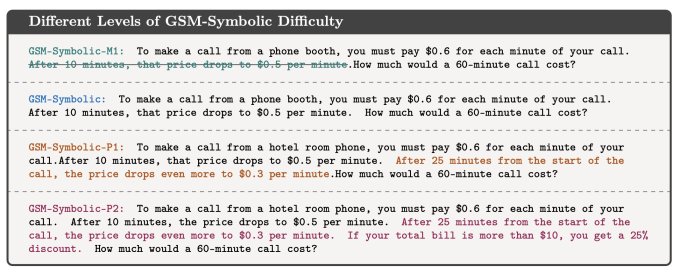

A bunch of AI analysis scientists at Apple launched their paper, “Understanding the limitations of mathematical reasoning in large language models,” to normal commentary Thursday. While the deeper ideas of symbolic studying and sample replica are a bit within the weeds, the essential idea of their analysis could be very straightforward to know.

Let’s say I requested you to unravel a basic math downside like this one:

Oliver picks 44 kiwis on Friday. Then he picks 58 kiwis on Saturday. On Sunday, he picks double the variety of kiwis he did on Friday. How many kiwis does Oliver have?

Obviously, the reply is 44 + 58 + (44 * 2) = 190. Though massive language fashions are literally spotty on arithmetic, they will fairly reliably remedy one thing like this. But what if I threw in slightly random further data, like this:

Oliver picks 44 kiwis on Friday. Then he picks 58 kiwis on Saturday. On Sunday, he picks double the variety of kiwis he did on Friday, however 5 of them had been a bit smaller than common. How many kiwis does Oliver have?

It’s the identical math downside, proper? And in fact even a grade-schooler would know that even a small kiwi continues to be a kiwi. But because it seems, this further knowledge level confuses even state-of-the-art LLMs. Here’s GPT-o1-mini’s take:

… on Sunday, 5 of those kiwis had been smaller than common. We must subtract them from the Sunday whole: 88 (Sunday’s kiwis) – 5 (smaller kiwis) = 83 kiwis

This is only a easy instance out of a whole bunch of questions that the researchers calmly modified, however practically all of which led to monumental drops in success charges for the fashions making an attempt them.

Now, why ought to this be? Why would a mannequin that understands the issue be thrown off so simply by a random, irrelevant element? The researchers suggest that this dependable mode of failure means the fashions don’t actually perceive the issue in any respect. Their coaching knowledge does enable them to reply with the right reply in some conditions, however as quickly because the slightest precise “reasoning” is required, similar to whether or not to rely small kiwis, they begin producing bizarre, unintuitive outcomes.

As the researchers put it of their paper:

[W]e examine the fragility of mathematical reasoning in these fashions and exhibit that their efficiency considerably deteriorates because the variety of clauses in a query will increase. We hypothesize that this decline is because of the truth that present LLMs aren’t able to real logical reasoning; as a substitute, they try to duplicate the reasoning steps noticed of their coaching knowledge.

This commentary is per the opposite qualities usually attributed to LLMs as a consequence of their facility with language. When, statistically, the phrase “I love you” is adopted by “I love you, too,” the LLM can simply repeat that — however it doesn’t imply it loves you. And though it will probably comply with complicated chains of reasoning it has been uncovered to earlier than, the truth that this chain will be damaged by even superficial deviations means that it doesn’t really cause a lot as replicate patterns it has noticed in its coaching knowledge.

Mehrdad Farajtabar, one of many co-authors, breaks down the paper very properly on this thread on X.

An OpenAI researcher, whereas commending Mirzadeh et al’s work, objected to their conclusions, saying that appropriate outcomes may seemingly be achieved in all these failure instances with a little bit of immediate engineering. Farajtabar (responding with the everyday but admirable friendliness researchers are likely to make use of) famous that whereas higher prompting may go for easy deviations, the mannequin could require exponentially extra contextual knowledge with the intention to…