Today Qualcomm is revealing extra info on final 12 months’s introduced “Cloud AI 100” inference chip and platform. The new inference platform by the corporate is claimed to have entered manufacturing already with the primary silicon efficiently coming again, and with first buyer sampling having began.

The Cloud AI 100 is Qualcomm’s first foray into the datacentre AI inference accelerator enterprise, representing the corporate’s investments into machine studying and leveraging their experience within the space from the patron cell SoC world, and bringing it to the enterprise market. Qualcomm had first revealed the Cloud AI 100 early final 12 months, though admittedly this was extra of a paper launch somewhat than a disclosure of what the {hardware} really delivered to the desk.

Today, with precise silicon within the lab, Qualcomm is divulging extra particulars in regards to the structure and efficiency and energy targets of the inferencing design.

Starting off at a high-level, Qualcomm is presenting us with the varied efficiency targets that the Cloud AI 100 chip is supposed to attain in its numerous form-factor deployments.

Qualcomm is aiming three totally different form-factors by way of commercialisation of the answer: A full-blown PCIe form-factor accelerator card which is supposed to attain as much as an astounding 400TOPs inference efficiency at 75W TDP, and two DM.2 and DM.2e form-factor playing cards with respectively 25W and 15W TDPs. The DM2 form-factor is akin to 2 M.2 connectors subsequent to one another and gaining recognition within the enterprise market, with the DM.2e design representing a smaller and lower-power thermal envelope form-factor.

Qualcomm explains that from an structure perspective, the design follows the learnings gained from the corporate’s neural processing items that it had deployed within the cell Snapdragon SoC, nonetheless continues to be a definite structure that’s been designed from the bottom up, optimised for enterprise workloads.

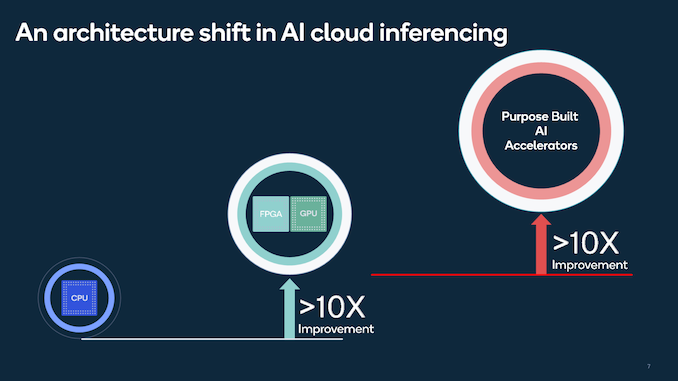

The massive benefit of a devoted AI design over present general-purpose computing {hardware} reminiscent of CPUs and even FPGAs or GPUs is that devoted purpose-built {hardware} is ready to obtain each larger efficiency and far larger energy effectivity targets which might be in any other case out of attain of “traditional” platforms.

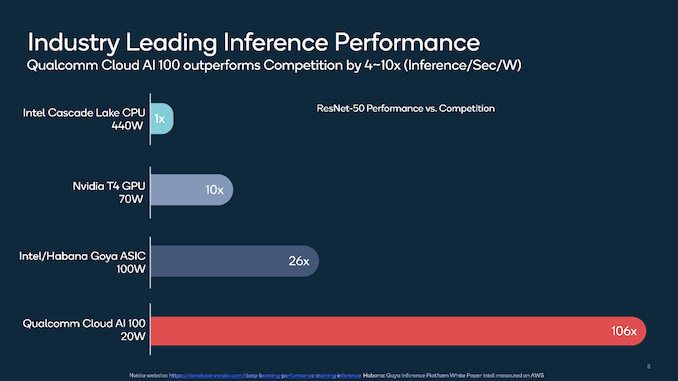

In phrases of efficiency figures, Qualcomm introduced ResNet-50 inference per second per watt figures towards the presently mostly deployed trade options, together with Intel’s Goya inference accelerator or Nvidia’s inference focused T4 accelerator which relies on a cut-down TU104 GPU die.

The Cloud AI 100 is claimed to attain important leaps by way of efficiency/W over its competitors, though we have now to notice that this chart does combine up various form-factors in addition to energy targets in addition to absolute efficiency targets, not being an apples-to-apples comparability.

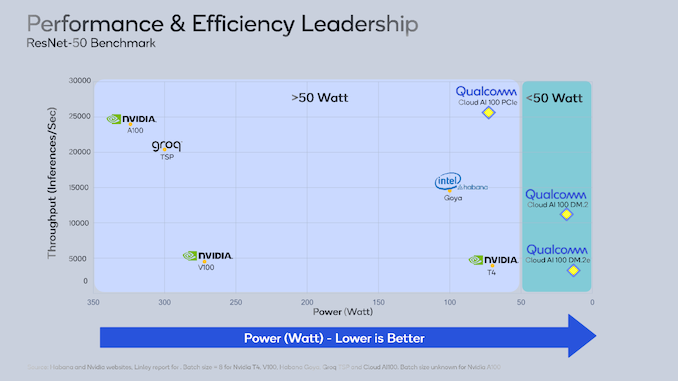

Qualcomm presents the dater in one other efficiency/energy chart by which we see a comparatively fairer comparability. The most attention-grabbing efficiency declare right here is that throughout the 75W PCIe form-factor, the corporate claims it’s capable of beat even Nvidia’s newest 250W A100 accelerator based mostly on the most recent Ampere structure. Similarly, it’s claiming double the efficiency of the Goya accelerator at 25% much less energy.

These efficiency claims are fairly unbelievable, and that may be defined by the truth that the workload being examined right here places Qualcomm’s structure in the absolute best mild. Slightly extra context could be derived from the {hardware} specification disclosures:

The chip consists of 16 “AI Cores” or AICs, collectively reaching as much as 400TOPs of INT8 inference MAC throughput. The chip’s reminiscence subsystem is backed by 4 64-bit LPDDR4X reminiscence controllers working at 2100MHz…