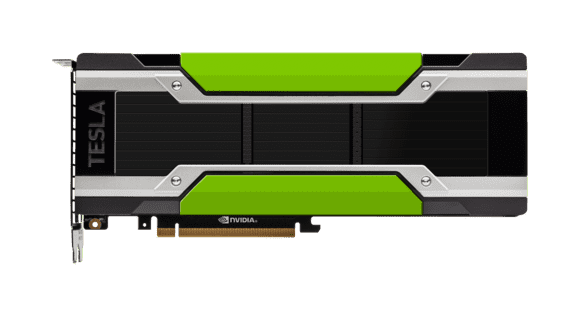

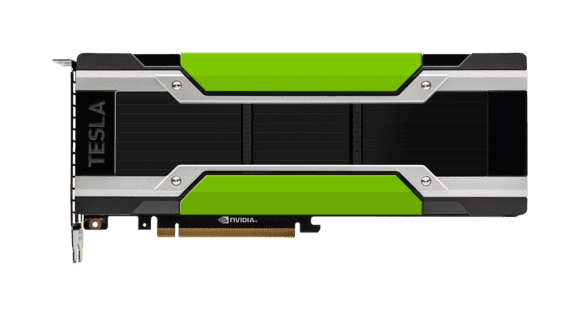

Nvidia’s new Tesla P4 and P40 GPUs are targeted at deep learning

Nvidia’s Tesla P40 GPU is for deep learning.

Credit:

Nvidia

Autonomous cars need a new kind of horsepower to identify objects, avoid obstacles and change lanes. There’s a good chance that will come from graphics processors in data centers or even the trunks of cars.

With this scenario in mind, Nvidia has built two new GPUs—the Tesla P4 and P40—based on the Pascal architecture and designed for servers or computers that will help drive autonomous cars. In recent years, Tesla GPUs have been targeted at supercomputing, but they are now being tweaked for deep-learning systems that aid in correlation and classification of data.

“Deep learning” typically refers to a class of algorithmic techniques based on highly connected neural networks—systems of nodes with weighted interconnections among them.

It’s all part of a general trend: as more data is transmitted to the cloud via all sorts of systems and devices, it passes through deep-learning systems for answers, context and insights.

For example, Facebook and Google have built deep-learning systems around GPUs for image recognition and natural language processing. Meanwhile, Nvidia says Baidu’s Deep Speech 2 speech recognition platform is built around its Tesla GPUs.

The new Teslas have the horsepower to be regular GPUs. The P40 has 3,840 CUDA cores, offers 12 teraflops of single-precision performance, has 24GB of GDDR5 memory and draws 250 watts of power. The P4 has 2,560 cores, delivers 5.5 teraflops of single-precision performance, has 8GB of GDDR5 memory, and draws up to 75 watts of power.

Additional deep-learning features have been slapped on the GPUs. Speedy GPUs usually boast double-precision performance for more accurate calculations, but the new Teslas also handle low-level calculations. Each core processes a chunk of information; these blocks of data can be stringed together in order to interpret information and infer the answers to questions about, for example, what objects are included in images, or what words are being spoken by people who are talking to each other.

Deep-learning systems rely on such low-level calculations for inferencing mostly because double-precision calculations—which would deliver more accurate results but require more processing power—would slow down GPUs.

Nvidia earlier this year released the Tesla P100, which is faster than the upcoming P4 and P40. The P100 is aimed at high-end servers and used to fine-tune deep-learning neural networks.

The new Tesla P4 and P40 GPUs have low-level integer and floating point-processing for deep learning and can also be used for inferencing and approximation at a local level. The idea is that certain types of systems and cars can’t always be connected to the cloud, and will have to do processing locally.

Low-level processing for approximations is also being added by Intel to its upcoming chip called Knights Mill, which is also designed for deep learning.

The Tesla P4 and P40 succeed the Tesla M4 and M40, which were released last year for graphics processing and virtualization. The new GPUs will be able to do those things as well.

The Tesla P40 will ship in October, while the P4 will ship in November. The GPUs will be available in servers from Dell, Hewlett Packard Enterprise, Lenovo, Quanta, Wistron, Inventec and Inspur. The server vendors will decide the price of the GPUs.