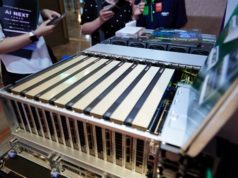

On July 20, NVIDIA launched TensorRT 8, a software program improvement equipment (SDK) designed to assist firms construct smarter, extra interactive language apps from cloud to edge. The newest model of the SDK is out there totally free to members of NVIDIA’s developer program. Plug-ins, parsers, and samples are additionally obtainable to builders from the TensorRT GitHub repository.

TensorRT Eight options the most recent improvements in deep studying inference or the method of making use of information from a skilled neural community mannequin to know how the info impacts the response. TensorRT Eight cuts inference time in half for language queries utilizing two key options:

- Sparsity is a brand new efficiency approach within the NVIDIA Ampere structure graphics processing items (GPUs), which will increase effectivity for builders by diminishing computational operations. Not all elements of a deep studying mannequin are equally necessary and a few might be turned all the way down to zero. Therefore, computations don’t should be carried out on these explicit “weights” or parameters inside a neural community. Using sparsity inside GPUs, NVIDIA is ready to flip down practically half of the weights on sure fashions for improved efficiency, throughput, and latency.

- Quantization permits builders to make use of skilled fashions to run inference in eight-bit computations (referred to as INT8), which considerably reduces compute and storage for inference on Tensor Cores. INT8 has grown in reputation for optimizing machine studying frameworks like TensorStream and NVIDIA’s TensorRT as a result of it reduces reminiscence and computing necessities. By making use of this method, NVIDIA is ready to retain accuracy whereas providing exceedingly excessive efficiency in TensorRT 8.

TensorRT is extensively deployed throughout many industries

Over the previous 5 years, builders in industries spanning healthcare, automotive, monetary providers, and retail, have downloaded TensorRT practically 2.5 million occasions.

For instance, GE Healthcare is utilizing Tensor RT to energy its cardiovascular ultrasound techniques. The digital diagnostics options supplier carried out automated cardiac view detection on its Vivid E95 scanner, accelerated with TensorRT. With an improved view detection algorithm, cardiologists could make extra correct analysis and establish illnesses in early phases. Other firms utilizing TensorRT embody Verizon, Ford, the US Postal Service, American Express and different massive manufacturers.

What NVIDIA additionally launched in TensorRT Eight is a versatile set of compiler optimizations that present twice the efficiency of TensorRT 7, regardless of the transformer mannequin an organization is utilizing. TensorRT Eight is ready to run BERT-Large—a extensively used transformer-based mannequin—in 1.2 milliseconds, which suggests firms can double or triple their mannequin measurement for larger accuracy.

There are quite a few inference providers which can be utilizing language fashions like BERT-Large behind the scenes. However, language-based apps sometimes don’t perceive nuance or emotion, which creates a subpar expertise throughout the board. With TensorRT 8, firms can now deploy a whole workflow inside a millisecond. These developments might allow a brand new era of conversational AI apps that provide a wiser, low latency expertise to customers.

“This is a huge improvement beyond what we have ever delivered in the past,” mentioned Sharma. “We look forward to seeing how developers are going to use TensorRT 8.”

Real Time Apps with AI

Real-time purposes that use synthetic intelligence (AI) like chatbots are on the rise. But as AI will get smarter and higher at delivering new sorts of providers, it will get extra sophisticated and harder to compute. This creates some challenges for these constructing AI based mostly providers.

Today’s builders should…