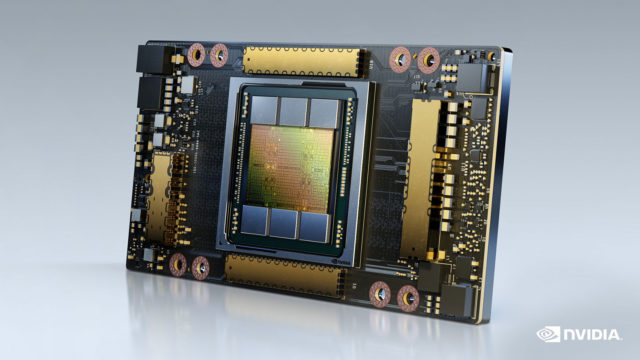

“Achieving state-of-the-art results in HPC and AI research requires building the biggest models, but these demand more memory capacity and bandwidth than ever before,” stated Bryan Catanzaro, vp of utilized deep studying analysis at NVIDIA. “The A100 80 GB GPU provides double the memory of its predecessor, which was introduced just six months ago, and breaks the 2 TB per second barrier, enabling researchers to tackle the world’s most important scientific and big data challenges.”

The NVIDIA A100 80 GB GPU is on the market in NVIDIA DGX A100 and NVIDIA DGX Station A100 programs, additionally introduced at this time and anticipated to ship this quarter.

Leading programs suppliers Atos, Dell Technologies, Fujitsu, GIGABYTE, Hewlett Packard Enterprise, Inspur, Lenovo, Quanta and Supermicro are anticipated to start providing programs constructed utilizing HGX A100 built-in baseboards in four- or eight-GPU configurations that includes A100 80 GB within the first half of 2021.

Fueling Data-Hungry Workloads

Building on the various capabilities of the A100 40 GB, the 80 GB model is right for a variety of functions with monumental knowledge reminiscence necessities.

For AI coaching, recommender system fashions like DLRM have huge tables representing billions of customers and billions of merchandise. A100 80 GB delivers as much as a 3x speedup, so companies can rapidly retrain these fashions to ship extremely correct suggestions.

The A100 80 GB additionally allows coaching of the biggest fashions with extra parameters becoming inside a single HGX-powered server resembling GPT-2, a pure language processing mannequin with superhuman generative textual content functionality. This eliminates the necessity for knowledge or mannequin parallel architectures that may be time consuming to implement and gradual to run throughout a number of nodes.

With its multi-instance GPU (MIG) know-how, A100 may be partitioned into as much as seven GPU situations, every with 10 GB of reminiscence. This supplies safe {hardware} isolation and maximizes GPU utilization for a wide range of smaller workloads. For AI inferencing of computerized speech recognition fashions like RNN-T, a single A100 80 GB MIG occasion can service a lot bigger batch sizes, delivering 1.25x larger inference throughput in manufacturing.

On a giant knowledge analytics benchmark for retail within the terabyte-size vary, the A100 80 GB boosts efficiency as much as 2x, making it a really perfect platform for delivering fast insights on the biggest of datasets. Businesses could make key choices in actual time as knowledge is up to date dynamically.

For scientific functions, resembling climate forecasting and quantum chemistry, the A100 80 GB can ship huge acceleration. Quantum Espresso, a supplies simulation, achieved throughput positive factors of practically 2x with a single node of A100 80 GB.

“Speedy and ample memory bandwidth and capacity are vital to realizing high performance in supercomputing applications,” stated Satoshi Matsuoka, director at RIKEN Center for Computational Science. “The NVIDIA A100 with 80 GB of HBM2E GPU memory, providing the world’s fastest 2 TB per second of bandwidth, will help deliver a big boost in application performance.”

Key Features of A100 80 GB

The A100 80 GB contains the various groundbreaking options of the NVIDIA Ampere structure:

- Third-Generation Tensor Cores: Provide as much as 20x AI throughput of the earlier Volta era with a brand new format TF32, in addition to 2.5x FP64 for HPC, 20x…