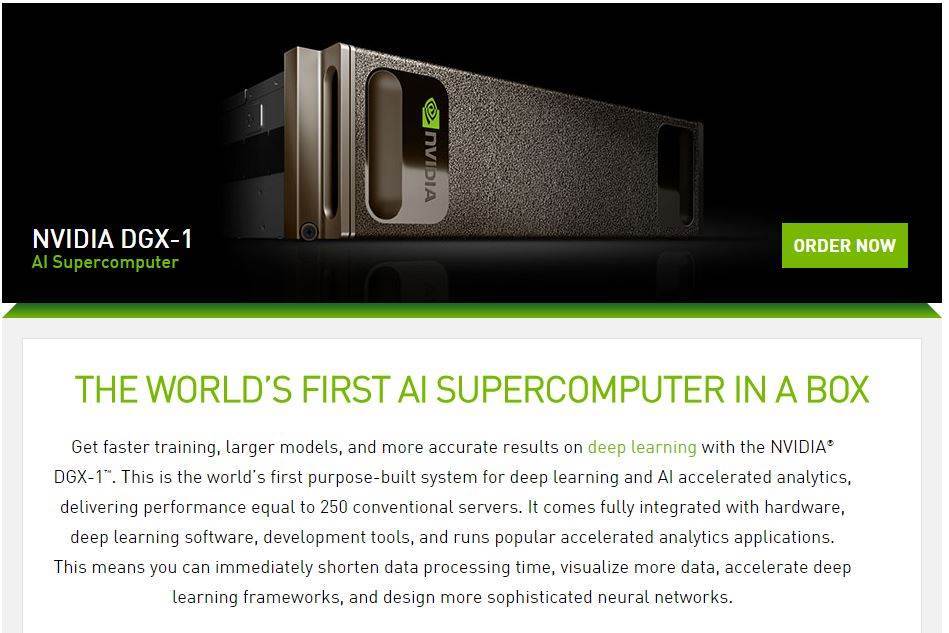

Microsoft and NVIDIA today announced a collaboration to accelerate AI in the enterprise. They are introducing an optimized platform that runs the new Microsoft Cognitive Toolkit (formerly CNTK) on NVIDIA GPUs, including the NVIDIA DGX-1 supercomputer, and on Azure N-Series virtual machines, currently in preview.

Using the first purpose-built enterprise AI framework optimized to run on NVIDIA Tesla GPUs in Microsoft Azure or on-premises, enterprises now have an AI platform that spans from their data center to Microsoft’s cloud.

“Every industry has awoken to the potential of AI,” said Jen-Hsun Huang, founder and chief executive officer, NVIDIA. “We’ve worked with Microsoft to create a lightning-fast AI platform that is available from on-premises with our DGX-1™ supercomputer to the Microsoft Azure cloud. With Microsoft’s global reach, every company around the world can now tap the power of AI to transform their business.”

“We’re working hard to empower every organization with AI, so that they can make smarter products and solve some of the world’s most pressing problems,” said Harry Shum, executive vice president of the Artificial Intelligence and Research Group at Microsoft. “By working closely with NVIDIA and harnessing the power of GPU-accelerated systems, we’ve made Cognitive Toolkit and Microsoft Azure the fastest, most versatile AI platform. AI is now within reach of any business.”

NVIDIA and Microsoft worked closely to accelerate the Cognitive Toolkit on GPU-based systems and in the Microsoft Azure cloud, offering startups and major enterprises:

- Greater versatility: The Cognitive Toolkit lets customers use one framework to train models on premises with the NVIDIA DGX-1 or with NVIDIA GPU-based systems, and then run those models in the cloud on Azure. This scalable, hybrid approach lets enterprises rapidly prototype and deploy intelligent features.

- Faster performance: When compared to running on CPUs, the GPU-accelerated Cognitive Toolkit performs deep learning training and inference much faster on NVIDIA GPUs available in Azure N-Series servers and on premises.(1) For example, NVIDIA DGX-1 with Pascal and NVLink interconnect technology is 170x faster than CPU servers for the Cognitive Toolkit.

- Wider availability: Azure N-Series virtual machines powered by NVIDIA GPUs are currently in preview to Azure customers, and will be generally available soon. Azure GPUs can be used to accelerate both training and model evaluation. With thousands of customers already part of the preview, businesses of all sizes are already running workloads on Tesla GPUs in Azure N-Series VMs.

NVIDIA and Microsoft are planning to continue their collaboration to optimize the Cognitive Toolkit for NVIDIA GPUs in Azure and as part of a hybrid cloud AI platform, when connected to NVIDIA DGX-1 on premises.