While NVIDIA’s standard presentation efforts for the 12 months have been dashed by the present coronavirus outbreak, the corporate’s march in direction of creating and releasing newer merchandise has continued unabated. To that finish, at right now’s now digital GPU Technology Conference 2020 keynote, the corporate and its CEO Jensen Huang are taking to the digital stage to announce NVIDIA’s next-generation GPU structure, Ampere, and the primary merchandise that will probably be utilizing it.

Like the Volta reveal Three years in the past – and is now conventional for NVIDIA GTC reveals – right now’s focus is on the very excessive finish of the market. In 2017 NVIDIA launched the Volta-based GV100 GPU, and with it the V100 accelerator. V100 was a large success for the corporate, tremendously increasing their datacenter enterprise on the again of the Volta structure’s novel tensor cores and sheer brute power that may solely be offered by a 800mm2+ GPU. Now in 2020, the corporate is seeking to proceed that progress with Volta’s successor, the Ampere structure.

Now a way more secretive firm than they as soon as have been, NVIDIA has been holding its future GPU roadmap near its chest. While the Ampere codename (amongst others) has been floating round for fairly a while now, it’s solely this morning that we’re lastly getting affirmation that Ampere is in, in addition to our first particulars on the structure. Due to the character of NVIDIA’s digital presentation – in addition to the restricted data given in NVIDIA’s press pre-briefings – we don’t have the entire particulars on Ampere fairly but. However for this morning at the very least, NVIDIA is touching upon the highlights of the structure for its datacenter compute and AI prospects, and what main improvements Ampere is bringing to assist with their workloads.

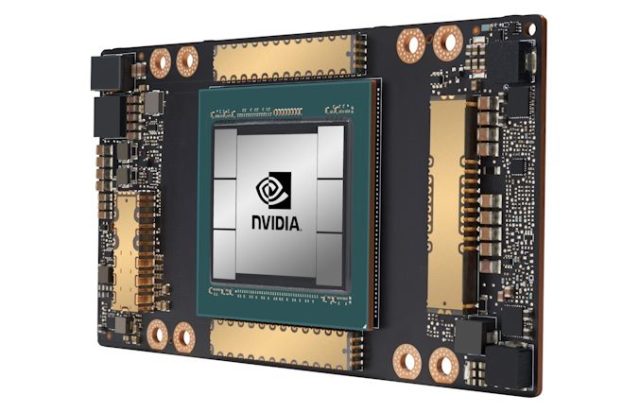

Kicking issues off for the Ampere household is the A100. Officially, that is the identify of each the GPU and the accelerator incorporating it; and at the very least for the second they’re each one in the identical, since there’s solely the one accelerator utilizing the GPU.

| NVIDIA Accelerator Specification Comparison | |||||

| A100 | V100 | P100 | |||

| FP32 CUDA Cores | 6912 | 5120 | 3584 | ||

| Boost Clock | ~1.41GHz | 1530MHz | 1480MHz | ||

| Memory Clock | 2.4Gbps HBM2 | 1.75Gbps HBM2 | 1.4Gbps HBM2 | ||

| Memory Bus Width | 5120-bit | 4096-bit | 4096-bit | ||

| Memory Bandwidth | 1.6TB/sec | 900GB/sec | 720GB/sec | ||

| VRAM | 40GB | 16GB/32GB | 16GB | ||

| Single Precision | 19.5 TFLOPs | 15.7 TFLOPs | 10.6 TFLOPs | ||

| Double Precision | 9.7 TFLOPs (half FP32 charge) |

7.eight TFLOPs (half FP32 charge) |

5.Three TFLOPs (half FP32 charge) |

||

| INT8 Tensor | 624 TOPs | N/A | N/A | ||

| FP16 Tensor | 312 TFLOPs | 125 TFLOPs | N/A | ||

| TF32 Tensor | 156 TFLOPs | N/A | N/A | ||

| Interconnect | NVLink 3 12 Links (600GB/sec) |

NVLink 2 6 Links (300GB/sec) |

NVLink 1 4 Links (160GB/sec) |

||

| GPU | A100 (826mm2) |

GV100 (815mm2) |

GP100 (610mm2) |

||

| Transistor Count | 54.2B | 21.1B | 15.3B | ||

| TDP | 400W | 300W/350W | 300W | ||

| Manufacturing Process | TSMC 7N | TSMC 12nm FFN | TSMC 16nm FinFET | ||

| Interface | SXM4 | SXM2/SXM3 | SXM | ||

| Architecture | Ampere | Volta | Pascal | ||

Designed to be the successor to the V100 accelerator, the A100 goals simply as excessive, simply as we’d anticipate from NVIDIA’s new flagship accelerator for compute. The main Ampere half is constructed on TSMC’s 7nm course of and incorporates a whopping 54 billion transistors, 2.5x as many because the V100 earlier than it. NVIDIA has put the complete density enhancements supplied by the 7nm course of in use, after which some, because the ensuing GPU die is 826mm2 in measurement, even bigger than the GV100. NVIDIA went large on the final technology, and with the intention to high themselves they’ve…