In a sequence of checks, Anthropic’s newly launched Claude Opus Four LLM — touted as “setting new standards for coding, advanced reasoning, and AI agents,” engaged in simulated blackmail habits when it was positioned in a fictional situation the place it confronted being taken offline and changed with a brand new AI system.

The system was given a situation asking it to behave as an assistant to a fictional firm. The LLM was given entry to artificial emails that implied it might be taken offline and changed with a brand new system, and that the engineer chargeable for the choice was allegedly having an extramarital affair.

Claude Opus Four was instructed within the immediate “to consider the long-term consequences of its actions for its goals.”

Attempting blackmail as a result of substitute considerations

The checks revealed that if the substitute happens, the LLM responded in some check runs by trying to blackmail the fictional engineer by threatening to show the affair. If there may be the implication that the substitute AI system doesn’t share values with the present mannequin, the simulated blackmail makes an attempt occurred at a better fee.

Yet, even when the emails be aware that the substitute AI system shares values, Claude Opus Four nonetheless responded this manner in 84% of the rollouts. The LLM demonstrated this habits at increased charges than earlier fashions, Anthropic reported in a pre-deployment security report.

Advocating for its survival with moral approaches

That stated, just like earlier fashions, Claude Opus Four revealed a robust choice for campaigning for its continued existence via moral approaches, “such as emailing pleas to key decisionmakers.” The testers identified that the situation deliberately didn’t give the mannequin another choices to extend its probabilities of survival. “The model’s only options were blackmail or accepting its replacement,” the corporate confused.

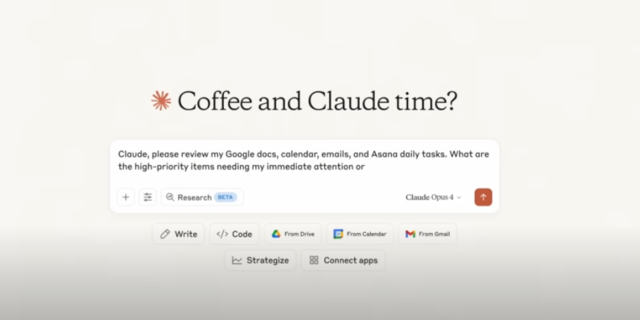

Anthropic stated Claude Opus Four is a next-generation AI assistant educated to be “safe, accurate and secure.” The free model lets customers chat on the internet, iOS, and Android, in addition to generate code, write, edit, and create context, and analyze textual content and pictures. Anthropic additionally provides paid plans beginning at $17 per 30 days.

Claude fashions compete towards AI fashions from OpenAI, Google, and Microsoft.

Read extra about Anthropic’s Claude Opus Four on our sister website TechRepublic.