There has been a powerful need for a collection of trade customary machine studying benchmarks, akin to the SPEC benchmarks for CPUs, so as to evaluate relative options. Over the previous two years, MLCommons, an open engineering consortium, have been discussing and disclosing its MLPerf benchmarks for coaching and inference, with key consortium members releasing benchmark numbers because the collection of assessments will get refined. Today we see the total launch of MLPerf Inference v1.0, together with ~2000 outcomes into the database. Alongside this launch, a brand new MLPerf Power Measurement approach to supply further metadata on these take a look at outcomes can be being disclosed.

The outcomes in the present day are all centered round inference – the flexibility of a educated community to course of incoming unseen information. The assessments are constructed round plenty of machine studying areas and fashions trying to symbolize the broader ML market, in the identical approach that SPEC2017 tries to seize widespread CPU workloads. For MLPerf Inference, this consists of:

- Image Classification on Resnet50-v1.5

- Object Detection with SSD-ResNet34

- Medical Image Segmentation with 3D UNET

- Speech-to-text with RNNT

- Language Processing with BERT

- Recommendation Engines with DLRM

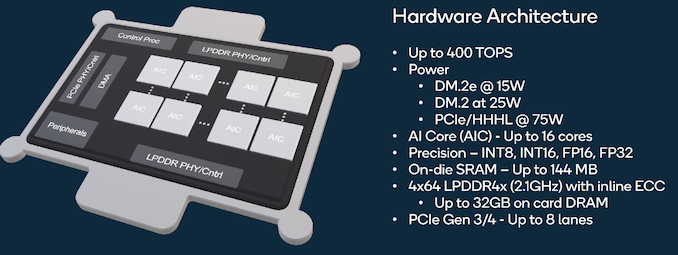

Results could be submitted into plenty of classes, resembling Datacenter, Edge, Mobile, or Tiny. For Datacenter or Edge, they will also be submitted into the ‘closed’ class (apples-to-apples with identical reference frameworks) or the ‘open’ class (something goes, peak optimization). The metrics submitted rely on single stream, a number of stream, server response, or offline information circulate. For these monitoring MLPerf’s progress, the benchmark set is identical as v0.7, besides with the requirement now that every one DRAM have to be ECC and regular state is measured with a minimal 10 minute run. Run outcomes have to be declared for what datatypes are used (int8, fp16, bf16, fp32). The benchmarks are designed to run on CPU, GPU, FPGA, or devoted AI silicon.

The corporations which were submitting outcomes to MLPerf to this point are a mixture of distributors, OEM companions, and MLCommons members, resembling Alibaba, Dell, Gigabyte, HPE, Inspur, Intel, Lenovo, NVIDIA, Qualcomm, Supermicro, and Xilinx. Most of those gamers have large multi-socket techniques and multi-GPU designs relying on what market they’re concentrating on to advertise with the outcomes numbers. For instance, Qualcomm has a system end result within the datacenter class utilizing two EPYCs and 5 of its Cloud AI 100 playing cards, however it has additionally submitted information to the sting class with an AI improvement package that includes a Snapdragon 865 and a model of its Cloud AI {hardware}.

The largest submitter for this launch, Krai, has developed an automatic take a look at suite for MLPerf Inference v1.Zero and run the benchmark suite throughout plenty of low-cost edge gadgets such because the Raspberry Pi, NVIDIA’s Jetson, and RockChip {hardware}, all with and with out GPU acceleration. As a end result, Krai gives over half of all the outcomes (1000+) in in the present day’s tranche of information. Compare that to Centaur, which has supplied a handful of information factors for its upcoming CHA AI coprocessor.

Because not each system has to run each take a look at, there’s not a mixed benchmark quantity to supply. But taking one of many datapoints, we are able to see the dimensions of the outcomes submitted to this point.

On ResNet50, with 99% accuracy, working an offline dataset:

- Alibaba’s Cloud Sinian Platform (two Xeon 8269CY + 8x A100) scored 1,077,800 samples per second in INT8

- Krai’s Raspberry Pi 4 (1x Cortex A72) scored 1.99 samples per second in INT8

Obviously sure {hardware} would do higher with language processing or object detection, and all the information factors could be seen at MLCommon’s outcomes pages.

MLPerf Inference Power

A special approach for…