Microsoft has filed for a continuation of a 2010 multi-device combined screen patent titled “Multi-Device Pairing and Combined Display.”

The new application for the 26th September 2016 claims:

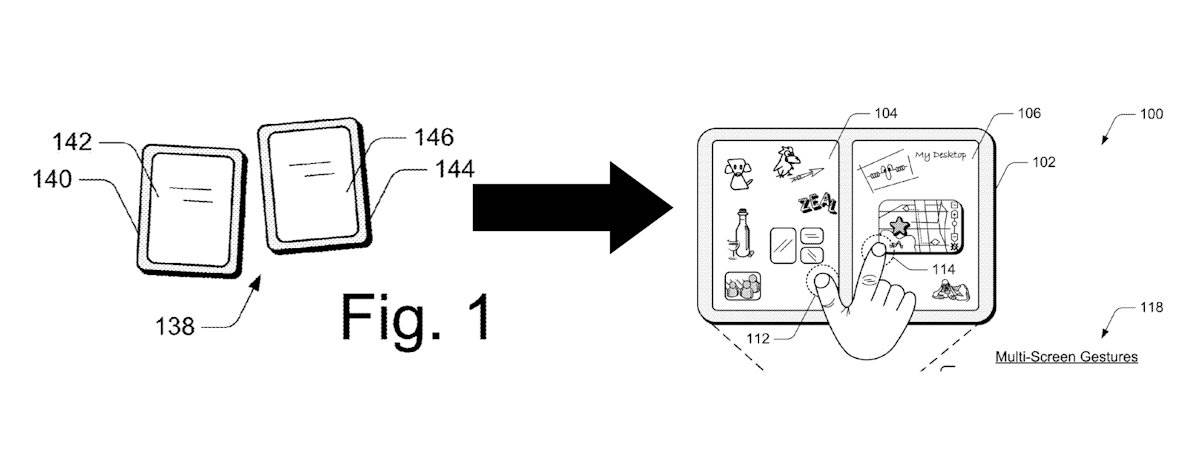

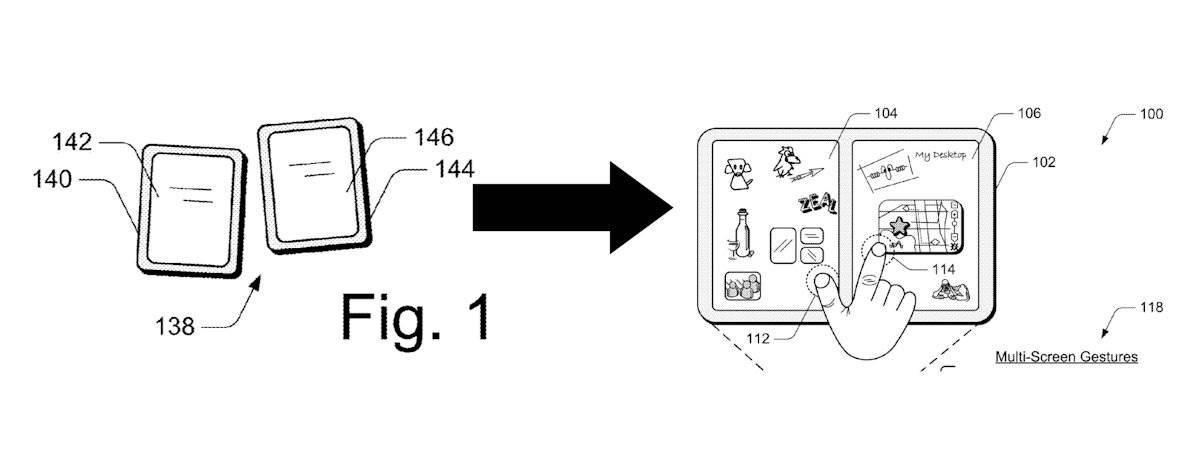

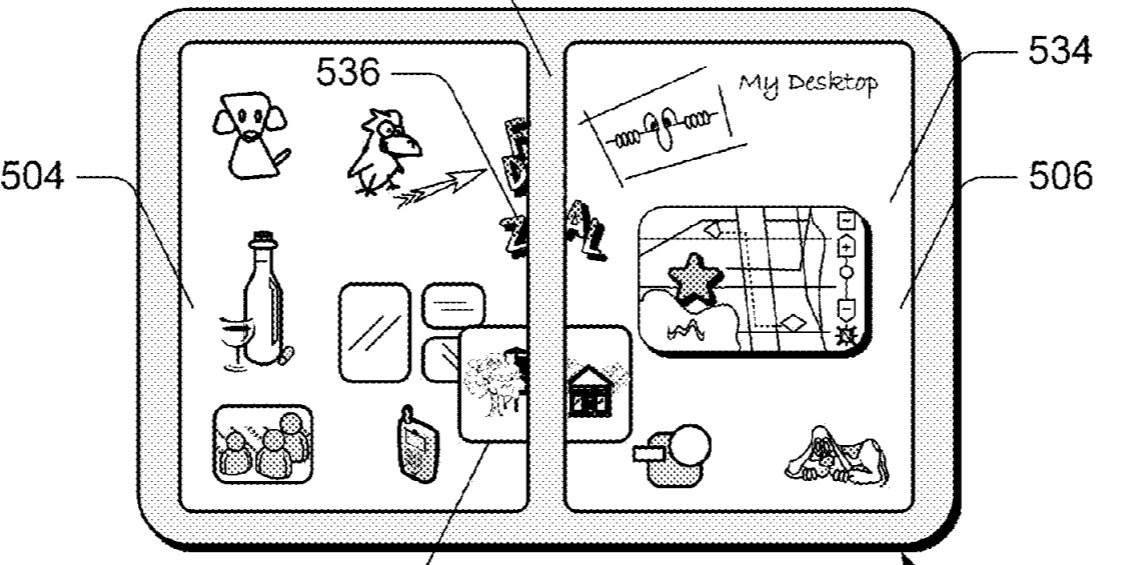

In embodiments of multi-device pairing and combined display, mobile devices have device touchscreens, and housing bezels of the mobile devices can be positioned proximate each other to form a combined display from the respective device touchscreens. An input recognition system can recognize an input to initiate pairing the mobile devices, enabling a cross-screen display of an interface on the respective device touchscreens, such as with different parts of the interface displayed on different ones of the device touchscreens. The input to initiate pairing the mobile devices can be recognized as the proximity of the mobile devices, as a gesture input, as a sensor input, and/or as a voice-activated input to initiate the pairing of the mobile devices for the cross-screen display of the interface.

Microsoft imagines users will be able to activate the feature by tapping on each device simultaneously or on each device in turn in a specific pattern. When paired the screens would support all the same multi-touch gestures of a normal screen of the same size. Microsoft also describes specific page flipping gestures and sliding for a journal app which suggests they have a specific platform in mind, possibly like the doomed Courier device.

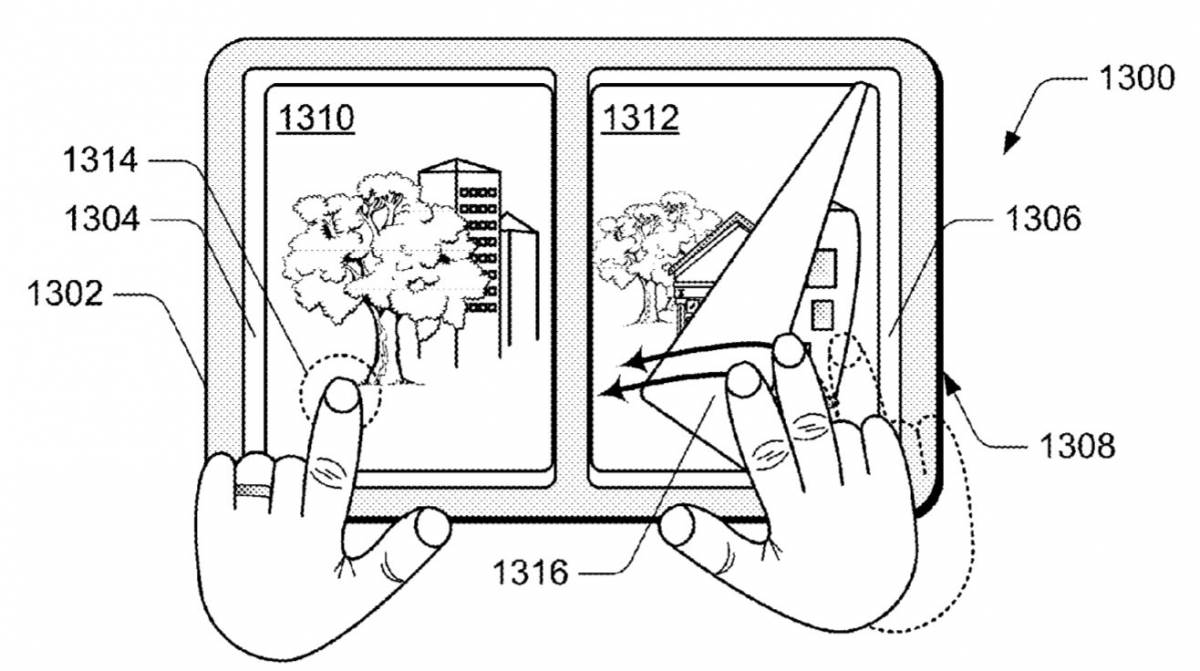

A multi-screen hold and tap gesture can be used to move and/or copy a displayed object from one displayed location to another, such as to move or copy an object onto a journal page, or incorporate the object into a notebook. A multi-screen hold and drag gesture can be used to maintain a display of a first part of a displayed object on one screen and drag a second part of the displayed object that is displayed on another screen to pocket the second part of the displayed object for a split-screen view. Alternatively, a hold and drag gesture can be used to maintain a display of a first part of the displayed object on one screen and drag a pocketed second part of the displayed object to expand the display on another screen.

A multi-screen hold and page-flip gesture can be used to select a journal page that is displayed on one screen and flip journal pages to display two additional or new journal pages, much like flipping pages in a book. The journal pages are flipped in a direction of the selected journal page to display the two new journal pages, much like flipping pages forward or backward in a book. Alternatively, a hold and page-flip gesture can be used to maintain the display of a journal page that is displayed on one screen and flip journal pages to display a different journal page on another screen. Non-consecutive journal pages can then be displayed side-by-side, which for a book, would involve tearing a page out of the book to place it in a non-consecutive page order to view it side-by-side with another page.

A multi-screen bookmark hold gesture can be used to bookmark a journal page at a location of a hold input to the journal page on a screen, and additional journal pages can be flipped for viewing while the bookmark is maintained for the journal page. A bookmark hold gesture mimics the action of a reader holding a thumb or finger between pages to save a place in a book while flipping through other pages of the book. Additionally, a bookmark is a selectable link back to the journal page, and a selection input of the bookmark flips back to display the journal page on the screen. A multi-screen object-hold and page-change gesture can be used to move and/or copy a displayed object from one display location to another, such as to incorporate a displayed object for display on a journal page. Additionally, a relative display position can be maintained when a displayed object is moved or copied from one display location to another.

A multi-screen synchronous slide gesture can be used to move a displayed object from one screen for display on another screen, replace displayed objects on the device screens with different displayed objects, move displayed objects to reveal a workspace on the device screens, and/or cycle through one or more workspaces (e.g., applications, interfaces, etc.) that are displayed on the system or device screens. A synchronous slide gesture may also be used to navigate to additional views, or reassign a current view to a different screen. Additionally, different applications or workspaces can be kept on a stack and cycled through, forward and back, with synchronous slide gestures.

Microsoft notes the illustrated environment includes an example of a computing device that may be configured in a variety of ways, such as any type of multi-screen computer or device. For example, the computing device may be configured as a computer (e.g., a laptop computer, notebook computer, tablet PC, tabletop computer, and so on), a mobile station, an entertainment appliance, a gaming device.

Interestingly Microsoft says the idea is platform-independent and could be implemented on a number of different platforms, using the cloud to synchronise, raising the spectre of using an iPhone and Android device to do your powerpoint presentation.

With Microsoft seemingly these days working to make their most interesting ideas a reality we may eventually see this patent as part of a game-changing application from the company. The full patent can be seen -.

![[Video] Reimagined for Orchestra, ‘Over the Horizon 2026’](https://loginby.com/itnews/wp-content/uploads/2026/02/Video-Reimagined-for-Orchestra-‘Over-the-Horizon-2026’-100x75.jpg)