Meta has launched a brand new assortment of AI fashions, Llama 4, in its Llama household — on a Saturday, no much less.

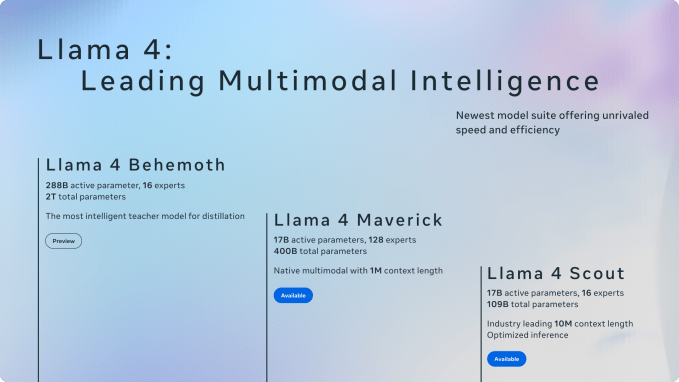

There are 4 new fashions in complete: Llama 4 Scout, Llama 4 Maverick, and Llama 4 Behemoth. All had been educated on “large amounts of unlabeled text, image, and video data” to present them “broad visual understanding,” Meta says.

The success of open fashions from Chinese AI lab DeepSeek, which carry out on par or higher than Meta’s earlier flagship Llama fashions, reportedly kicked Llama growth into overdrive. Meta is claimed to have scrambled struggle rooms to decipher how DeepSeek lowered the price of operating and deploying fashions like R1 and V3.

Scout and Maverick are overtly out there on Llama.com and from Meta’s companions, together with the AI dev platform Hugging Face, whereas Behemoth continues to be in coaching. Meta says that Meta AI, its AI-powered assistant throughout apps together with WhatsApp, Messenger, and Instagram, has been up to date to make use of Llama Four in 40 nations. Multimodal options are restricted to the U.S. in English for now.

Some builders could take concern with the Llama Four license.

Users and corporations “domiciled” or with a “principal place of business” within the EU are prohibited from utilizing or distributing the fashions, seemingly the results of governance necessities imposed by the area’s AI and knowledge privateness legal guidelines. (In the previous, Meta has decried these legal guidelines as overly burdensome.) In addition, as with earlier Llama releases, firms with greater than 700 million month-to-month energetic customers should request a particular license from Meta, which Meta can grant or deny at its sole discretion.

“These Llama 4 models mark the beginning of a new era for the Llama ecosystem,” Meta wrote in a weblog submit. “This is just the beginning for the Llama 4 collection.”

Meta says that Llama Four is its first cohort of fashions to make use of a mix of specialists (MoE) structure, which is extra computationally environment friendly for coaching and answering queries. MoE architectures principally break down knowledge processing duties into subtasks after which delegate them to smaller, specialised “expert” fashions.

Maverick, for instance, has 400 billion complete parameters, however solely 17 billion energetic parameters throughout 128 “experts.” (Parameters roughly correspond to a mannequin’s problem-solving expertise.) Scout has 17 billion energetic parameters, 16 specialists, and 109 billion complete parameters.

According to Meta’s inside testing, Maverick, which the corporate says is finest for “general assistant and chat” use instances like artistic writing, exceeds fashions equivalent to OpenAI’s GPT-4o and Google’s Gemini 2.Zero on sure coding, reasoning, multilingual, long-context, and picture benchmarks. However, Maverick doesn’t fairly measure as much as extra succesful current fashions like Google’s Gemini 2.5 Pro, Anthropic’s Claude 3.7 Sonnet, and OpenAI’s GPT-4.5.

Scout’s strengths lie in duties like doc summarization and reasoning over massive codebases. Uniquely, it has a really massive context window: 10 million tokens. (“Tokens” signify bits of uncooked textual content — e.g. the phrase “fantastic” cut up into “fan,” “tas” and “tic.”) In plain English, Scout can absorb pictures and as much as thousands and thousands of phrases, permitting it to course of and work with extraordinarily prolonged paperwork.

Scout can run on a single Nvidia H100 GPU, whereas Maverick requires an Nvidia H100 DGX system or equal, based on Meta’s calculations.

Meta’s unreleased Behemoth will want even beefier {hardware}. According to the corporate, Behemoth has 288 billion energetic parameters, 16 specialists, and practically two trillion complete parameters. Meta’s inside benchmarking has Behemoth outperforming GPT-4.5, Claude 3.7 Sonnet, and Gemini 2.0 Pro (however not 2.5 Pro) on a number of evaluations measuring STEM expertise like math downside fixing.

Of notice, not one of the Llama Four fashions is a correct “reasoning” mannequin alongside the traces of OpenAI’s o1 and o3-mini. Reasoning fashions fact-check their solutions and…