In traditional science-fiction movies, AI was typically portrayed as towering laptop programs or huge servers. Today, it’s an on a regular basis know-how — immediately accessible on the units folks maintain of their fingers. Samsung Electronics is increasing the usage of on-device AI throughout merchandise resembling smartphones and residential home equipment, enabling AI to run regionally with out exterior servers or the cloud for sooner, safer experiences.

Unlike server-based programs, on-device environments function beneath strict reminiscence and computing constraints. As a consequence, lowering AI mannequin dimension and maximizing runtime effectivity are important. To meet this problem, Samsung Research AI Center is main work throughout core applied sciences — from mannequin compression and runtime software program optimization to new structure growth.

Samsung Newsroom sat down with Dr. MyungJoo Ham, Master at AI Center, Samsung Research, to debate the way forward for on-device AI and the optimization applied sciences that make it doable.

The First Step Toward On-Device AI

At the guts of generative AI — which interprets consumer language and produces pure responses — are massive language fashions (LLMs). The first step in enabling on-device AI is compressing and optimizing these huge fashions in order that they run easily on units resembling smartphones.

“Running a highly advanced model that performs billions of computations directly on a smartphone or laptop would quickly drain the battery, increase heat and slow response times — noticeably degrading the user experience,” stated Dr. Ham. “Model compression technology emerged to address these issues.”

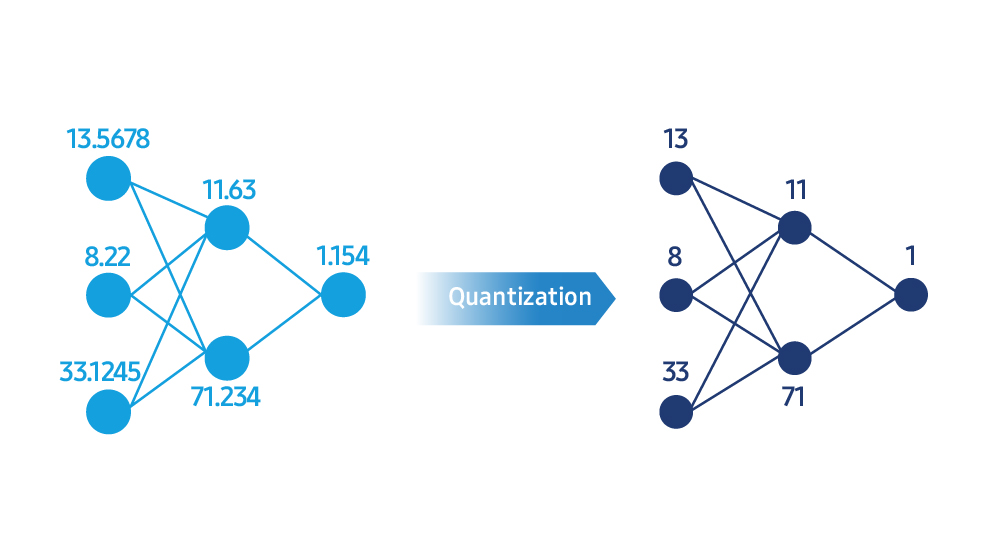

LLMs carry out calculations utilizing extraordinarily advanced numerical representations. Model compression simplifies these values into extra environment friendly integer codecs by a course of referred to as quantization. “It’s like compressing a high-resolution photo so the file size shrinks but the visual quality remains nearly the same,” he defined. “For instance, converting 32-bit floating-point calculations to 8-bit or even 4-bit integers significantly reduces memory use and computational load, speeding up response times.”

A drop in numerical precision throughout quantization can cut back a mannequin’s total accuracy. To steadiness velocity and mannequin high quality, Samsung Research is creating algorithms and instruments that intently measure and calibrate efficiency after compression.

“The goal of model compression isn’t just to make the model smaller — it’s to keep it fast and accurate,” Dr. Ham stated. “Using optimization algorithms, we analyze the model’s loss function during compression and retrain it until its outputs stay close to the original, smoothing out areas with large errors. Because each model weight has a different level of importance, we preserve critical weights with higher precision while compressing less important ones more aggressively. This approach maximizes efficiency without compromising accuracy.”

Beyond creating mannequin compression know-how on the prototype stage, Samsung Research adapts and commercializes it for real-world merchandise resembling smartphones and residential home equipment. “Because every device model has its own memory architecture and computing profile, a general approach can’t deliver cloud-level AI performance,” he stated. “Through product-driven research, we’re designing our own compression algorithms to enhance AI experiences users can feel directly in their hands.”

The Hidden Engine That Drives AI Performance

Even with a well-compressed mannequin, the consumer expertise finally depends upon the way it runs on the gadget. Samsung Research is creating an AI runtime engine that optimizes how a tool’s reminiscence and computing sources are used throughout execution.

“The AI runtime is essentially the model’s engine control unit,” Dr….

![[Interview] The Technologies Bringing Cloud-Level](https://loginby.com/itnews/wp-content/uploads/2025/11/1763822314_Interview-The-Technologies-Bringing-Cloud-Level-640x360.jpg)

![[Interview] New FläktGroup CEO David Dorney on Growth,](https://loginby.com/itnews/wp-content/uploads/2026/01/Interview-New-FläktGroup-CEO-David-Dorney-on-Growth-238x178.jpg)