Following an unique report from SemiAccurate, and confirmed by Intel by ServeTheResidence, the information on the wire is that Intel is about to can wide-spread common availability to its Cooper Lake line of 14nm Xeon Scalable processors. The firm is about to solely make the {hardware} out there for precedence scale-out clients who’ve already designed quad-socket and eight-socket platforms across the {hardware}. This is a sizeable blow to Intel’s enterprise plans, placing the burden of Intel’s future x86 enterprise CPU enterprise solely on the shoulders of its 10nm Ice Lake Xeon future, which has already seen vital multi-quarter delays from its preliminary launch schedule.

Intel’s Roadmaps Gone Awry

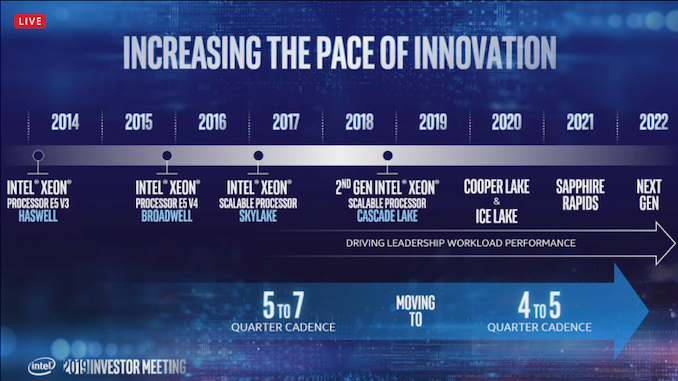

In August 2018, Intel held a Data-Centric Innovation Summit, the place the corporate laid out its plans for the Xeon CPU roadmap. Fresh off the current hires of Raja Koduri and Jim Keller over the earlier months, the corporate was eager to place a concentrate on Intel as being extra centered on the ‘data-centric’ a part of the enterprise slightly than simply being ‘PC-centric’. This meant going after extra than simply the CPU market, but in addition IoT, networking, FPGA, AI, and common ‘workload-optimized’ options. Even with all of the messaging, it was clear that Intel’s excessive market share within the conventional x86 server enterprise was a key a part of their present income stream, and the corporate spent a variety of time speaking in regards to the CPU roadmap.

At the time, the occasion was the primary 12 months anniversary of Skylake Xeon, which launched in mid-2017 (the patron components in Q3 2015). The roadmap, as laid out at this occasion was to launch Cascade Lake 2nd Generation Xeon Scalable by the tip of This fall 2018 on 14nm, Cooper Lake 3rd Gen Xeon Scalable in 2019 on 14nm, and Ice Lake 4th Gen Xeon Scalable in 2020 on 10nm.

Cascade Lake finally hit the cabinets in April 2019, and took some time to filter right down to the remainder of the market.

Cooper Lake, however, was added to the roadmap slightly late within the product cycle for 14nm. With Intel’s identified 10nm delays, the corporate determined so as to add in one other vary of merchandise between Cascade Lake and Ice Lake, with the brand new key characteristic between the 2 being help for bfloat16 directions contained in the AVX-512 vector items.

Why is BF16 Important?

The bfloat16 normal is a focused manner of representing numbers that give the vary of a full 32-bit quantity, however within the information measurement of a 16-bit quantity, conserving the accuracy near zero however being a bit extra free with the accuracy close to the bounds of the usual. The bfloat16 normal has a variety of makes use of inside machine studying algorithms, by providing higher accuracy of values contained in the algorithm whereas affording double the info in any given dataset (or doubling the velocity in these calculation sections).

A regular float has the bits break up into the signal, the exponent, and the fraction. This is given as:

- <signal> * 1 + <fraction> * 2<exponent>

For a typical IEEE754 compliant quantity, the usual for computing, there may be one bit for the signal, 5 bits for the exponent, and 10 bits for the fraction. The concept is that this provides a superb mixture of precision for fractional numbers but in addition provide numbers giant sufficient to work with.

What bfloat16 does is use one bit for the signal, eight bits for the exponent, and seven bits for the fraction. This information kind is supposed to provide 32-bit fashion ranges, however with lowered accuracy within the fraction. As machine studying is resilient to such a precision, the place machine studying would have used a 32-bit float, they’ll now use a 16-bit bfloat16.

These might be represented as:

| Data Type Representations | ||||||

| Type | Bits | Exponent | Fraction | Precision | Range | Speed |

| float32 | 32 | 8 | 23 | High | High | Slow |