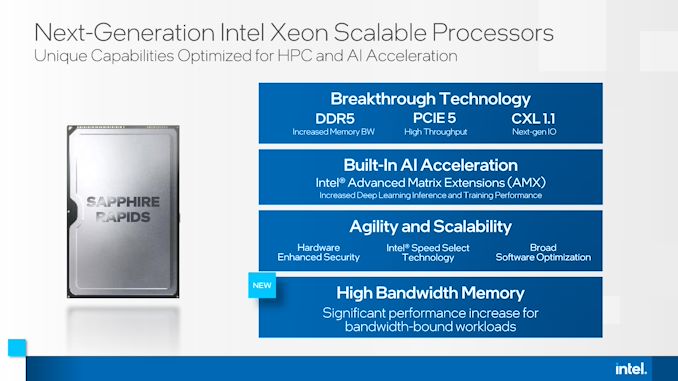

As a part of right this moment’s International Supercomputing 2021 (ISC) bulletins, Intel is showcasing that it is going to be launching a model of its upcoming Sapphire Rapids (SPR) Xeon Scalable processor with high-bandwidth reminiscence (HBM). This model of SPR-HBM will come later in 2022, after the primary launch of Sapphire Rapids, and Intel has said that it is going to be a part of its normal availability providing to all, quite than a vendor-specific implementation.

Hitting a Memory Bandwidth Limit

As core counts have elevated within the server processor area, the designers of those processors have to make sure that there’s sufficient information for the cores to allow peak efficiency. This means creating massive quick caches per core so sufficient information is shut by at excessive velocity, there are excessive bandwidth interconnects contained in the processor to shuttle information round, and there’s sufficient foremost reminiscence bandwidth from information shops positioned off the processor.

Our Ice Lake Xeon Review system with 32 DDR4-3200 Slots

Here at AnandTech, we’ve been asking processor distributors about this final level, about foremost reminiscence, for some time. There is simply a lot bandwidth that may be achieved by regularly including DDR4 (and shortly to be DDR5) reminiscence channels. Current eight-channel DDR4-3200 reminiscence designs, for instance, have a theoretical most of 204.Eight gigabytes per second, which pales compared to GPUs which quote 1000 gigabytes per second or extra. GPUs are in a position to obtain greater bandwidths as a result of they use GDDR, soldered onto the board, which permits for tighter tolerances on the expense of a modular design. Very few foremost processors for servers have ever had foremost reminiscence be built-in at such a degree.

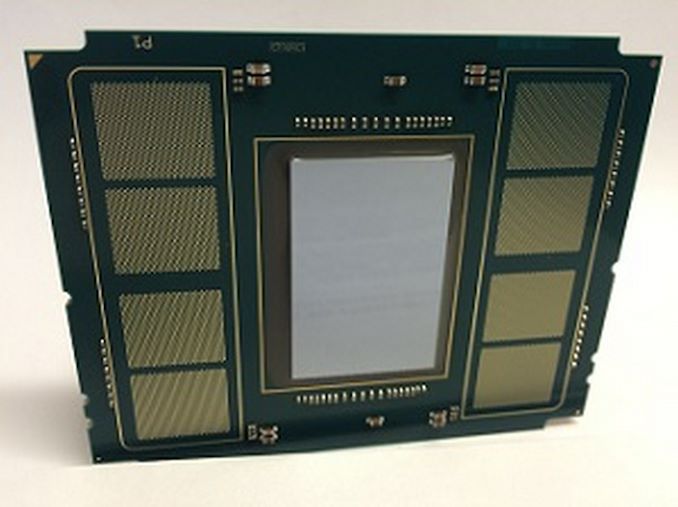

Intel Xeon Phi ‘KNL’ with Eight MCDRAM Pads in 2015

One of the processors that was once constructed with built-in reminiscence was Intel’s Xeon Phi, a product discontinued a few years in the past. The foundation of the Xeon Phi design was a lot of vector compute, managed by as much as 72 fundamental cores, however paired with 8-16 GB of on-board ‘MCDRAM’, linked by way of 4-Eight on-board chiplets within the bundle. This allowed for 400 gigabytes per second of cache or addressable reminiscence, paired with 384 GB of foremost reminiscence at 102 gigabytes per second. However, since Xeon Phi was discontinued, no foremost server processor (at the very least for x86) introduced to the general public has had this type of configuration.

New Sapphire Rapids with High-Bandwidth Memory

Until subsequent yr, that’s. Intel’s new Sapphire Rapids Xeon Scalable with High-Bandwidth Memory (SPR-HBM) shall be coming to market. Rather than conceal it away to be used with one explicit hyperscaler, Intel has said to AnandTech that they’re dedicated to creating HBM-enabled Sapphire Rapids obtainable to all enterprise prospects and server distributors as nicely. These variations will come out after the primary Sapphire Rapids launch, and entertain some attention-grabbing configurations. We perceive that this implies SPR-HBM shall be obtainable in a socketed configuration.

Intel states that SPR-HBM can be utilized with commonplace DDR5, providing a further tier in reminiscence caching. The HBM might be addressed straight or left as an automated cache we perceive, which might be similar to how Intel’s Xeon Phi processors may entry their excessive bandwidth reminiscence.

Alternatively, SPR-HBM can work with none DDR5 in any respect. This reduces the bodily footprint of the processor, permitting for a denser design in compute-dense servers that don’t rely a lot on reminiscence capability (these prospects have been already asking for quad-channel design optimizations anyway).

The quantity of reminiscence was not disclosed, nor the bandwidth or the expertise. At the very least, we anticipate the equal of as much as 8-Hi stacks of HBM2e, as much as 16GB every, with 1-Four stacks onboard resulting in 64 GB of HBM. At a theoretical high velocity of…