We consider the subsequent period would be the clever period. An period the place we are going to expertise 100 billion clever related gadgets. Exascale efficiency and structure will make this intelligence obtainable to all, enriching our lives in additional methods than we are able to think about right this moment. This is a future that conjures up and motivates me and my fellow Intel architects every single day.

We are producing knowledge at a sooner charge than our present capacity to research, perceive, transmit, safe and reconstruct it in actual time. Analyzing a ton of knowledge requires a ton of compute. More essential, for this knowledge to assist us with insights, it wants entry to compute in actual time, which implies low-latency, near the consumer. At Intel, we’re on a journey to unravel this exponentially arduous drawback.

Since the tip of the Dennard scaling period, extracting the exponential worth from transistor know-how impressed us to have a look at new approaches throughout the entire stack. This led us to what we name our Six Pillars of Technology Innovation, which we launched at our Architecture Day in December 2018. We consider that delivering advances throughout these pillars is important to proceed the exponential essence of Moore’s Law.

This week, at Architecture Day 2020, we showcased how we’re taking this ahead with a broad vary of thrilling new breakthroughs. We have made nice progress with our numerous mixture of scalar, vector, matrix and spatial architectures – designed with state-of-the-art course of know-how, fed by disruptive reminiscence hierarchies, built-in into programs with superior packaging, deployed at hyperscale with lightspeed interconnect hyperlinks, unified by a single software program abstraction, and developed with benchmark defining safety features.

We offered extra particulars about our disaggregated design methodology and our superior packaging roadmap. We demonstrated our mastering of positive bump pitches in EMIB and Foveros applied sciences by way of a number of product iterations in graphics and FPGAs, and on the shopper with Lakefield.

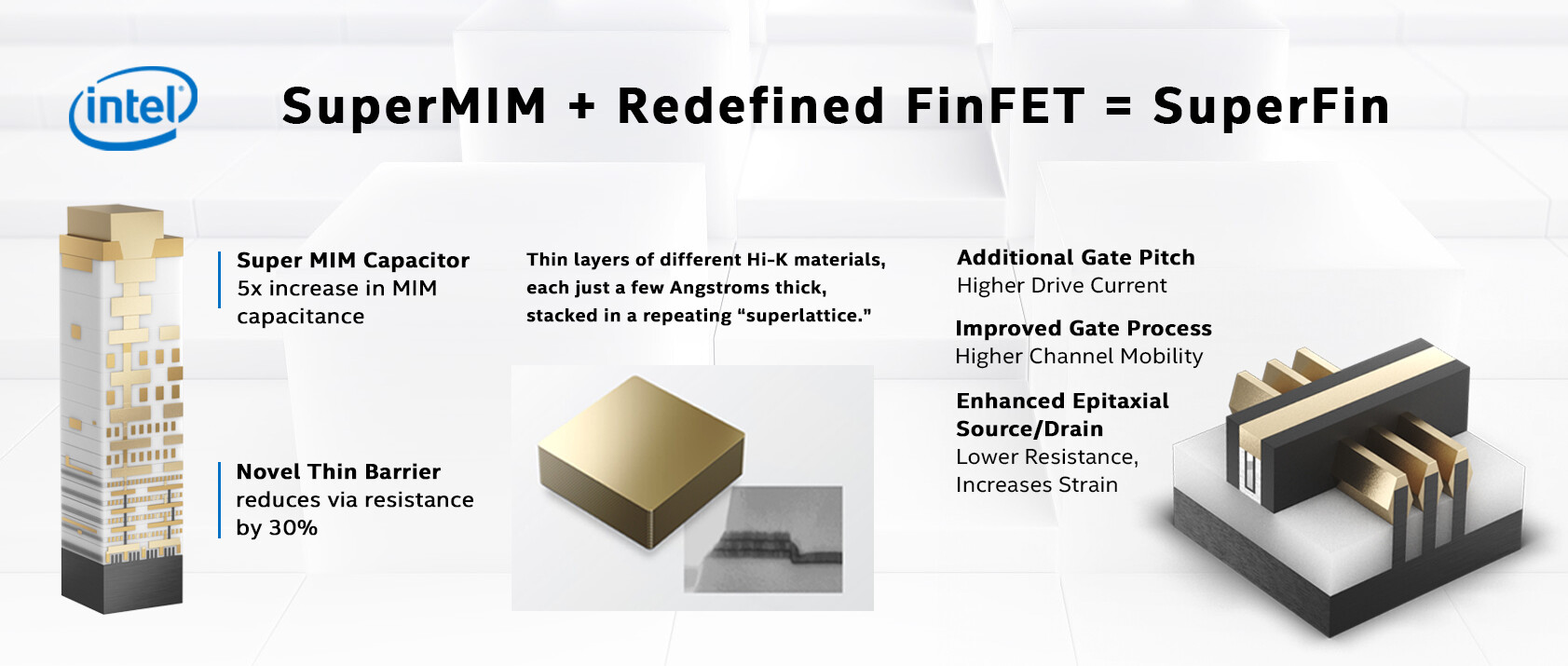

We additionally shared one of the crucial thrilling developments in our transistor roadmap by introducing our new 10 nm SuperFin know-how, a redefinition of the FinFET with new SuperMIM capacitors that allows the biggest single, intranode enhancement in Intel’s historical past, delivering efficiency enhancements akin to a full-node transition and enabling a management product roadmap.

When we combine our next-generation Willow Cove CPU structure with our 10 nm SuperFin know-how, the result’s the unbelievable new Tiger Lake platform. We unpacked particulars of the upcoming Tiger Lake system-on-chip structure, which supplies a generational leap in CPU efficiency, management graphics, management synthetic intelligence (AI), extra reminiscence bandwidth, further safety features, higher show, higher video and extra. I do know everybody is keen for all the particulars on Tiger Lake and we sit up for sharing extra within the coming weeks.

In addition to Tiger Lake, we offered a deep dive into our subsequent era Intel Agilex FPGA, which supplies breakthrough efficiency per watt. In truth, we showcased two generations of disaggregated merchandise utilizing EMIB and shared the primary outcomes of our 224 Gbps transceivers.

We additionally highlighted how Intel’s Xe GPU structure is the muse that helps us construct GPUs which might be scalable from teraflops to petaflops. Xe-LP powers management graphics in Tiger Lake and is our best microarchitecture for PC and cell computing platforms. Xe-LP additionally powers our first discrete GPU in additional than 20…

![[Interview] [Galaxy Unpacked 2026] Maggie Kang on Making](https://loginby.com/itnews/wp-content/uploads/2026/02/Interview-Galaxy-Unpacked-2026-Maggie-Kang-on-Making-100x75.jpg)