07:29PM EDT – Last session of Hot Chips is all about ML inference. Starting with Baidu, and its Kunlun AI processor

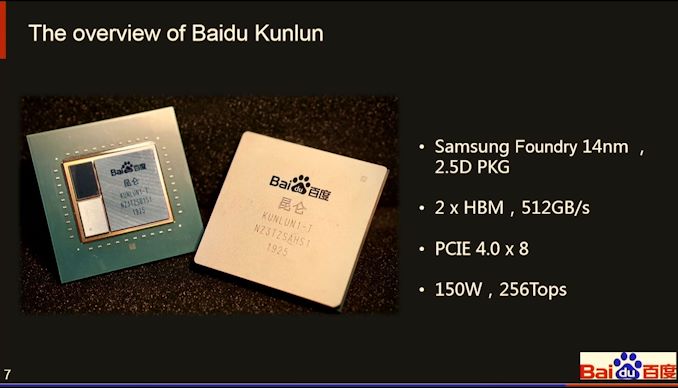

07:30PM EDT – We’ve heard of Baidu’s Kunlun a number of months in the past resulting from a press launch from the corporate and Samsung stating that the silicon was making use of Interposer-Cube 2.5D packaging, in addition to HBM2, and packing 260 TOPs into 150 W.

07:32PM EDT – Baidu and Samsung construct the chip collectively

07:33PM EDT – Need a processor to cowl a diversified AI workflow

07:33PM EDT – NLP = Neural Language Processing

07:33PM EDT – All these techniques are precedence inside Baidu

07:34PM EDT – Traditional AI computing is carried out in Cloud, Datacenter, HPC, Smart Industry, Smart City

07:35PM EDT – High-end AI chips price loads to create

07:36PM EDT – Try to discover market quantity as a lot as doable

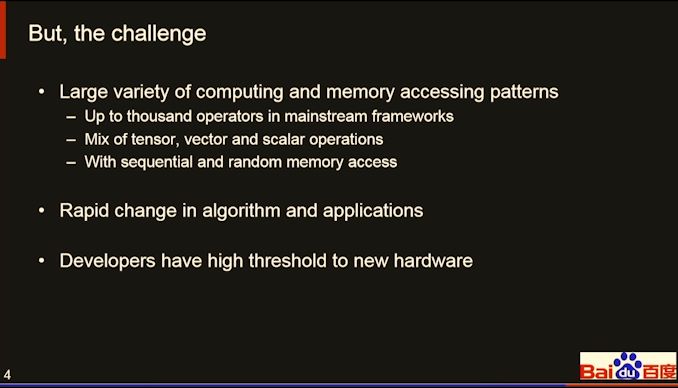

07:36PM EDT – The problem is the kind of compute

07:36PM EDT – Design and implementation

07:38PM EDT – Kunlun (Kun-loon)

07:38PM EDT – Need versatile, programmable, excessive efficiency

07:38PM EDT – Moved from FPGA to ASIC

07:39PM EDT – 256 TOPs in 2019

07:42PM EDT – (the presenter is a bit gradual fyi)

07:43PM EDT – Now some element

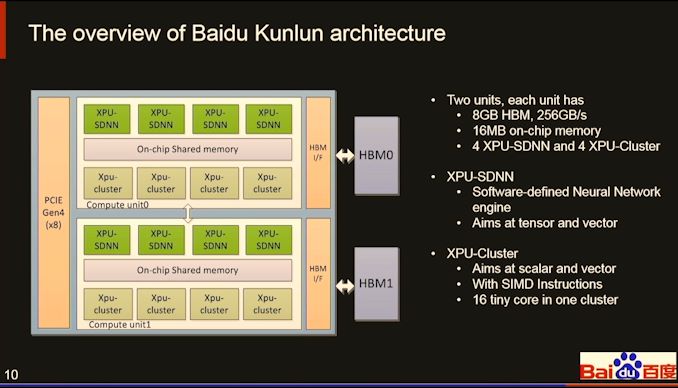

07:43PM EDT – Samsung Foundry 14nm

07:43PM EDT – Interposer package deal, 2 HBM, 512 GB/s

07:43PM EDT – PCIe 4.zero x8

07:43PM EDT – 150W / 256 TOPs

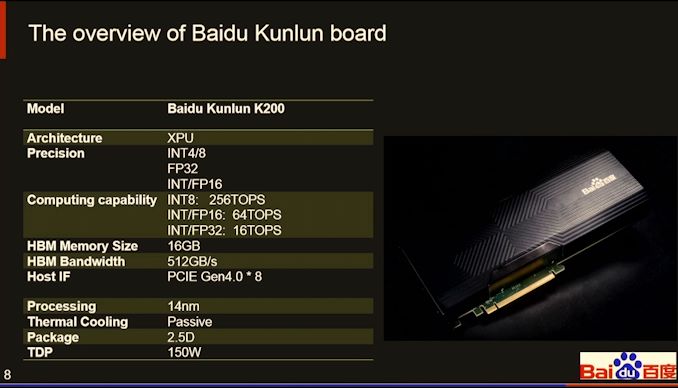

07:43PM EDT – PCIe card

07:44PM EDT – 256TOPs for INT8

07:44PM EDT – 16 GB HBM

07:44PM EDT – Passive cooling

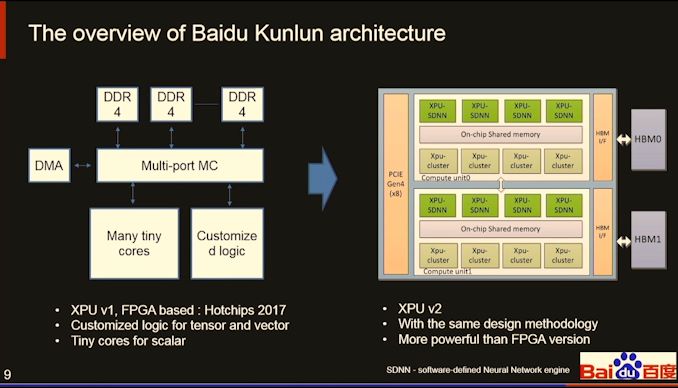

07:45PM EDT – Same format as XPUv1 proven in HotChips 2017

07:45PM EDT – XPU cluster

07:45PM EDT – Software outlined neural community engine

07:45PM EDT – XPU-SDNN

07:46PM EDT – XPU-SDNN does tensor and vector

07:46PM EDT – XPU-Cluster does scalar and vector

07:46PM EDT – Each cluster has 16 tiny cores

07:46PM EDT – every unit has 16 MB on-chip reminiscence

07:47PM EDT – (what are the tiny cores?)

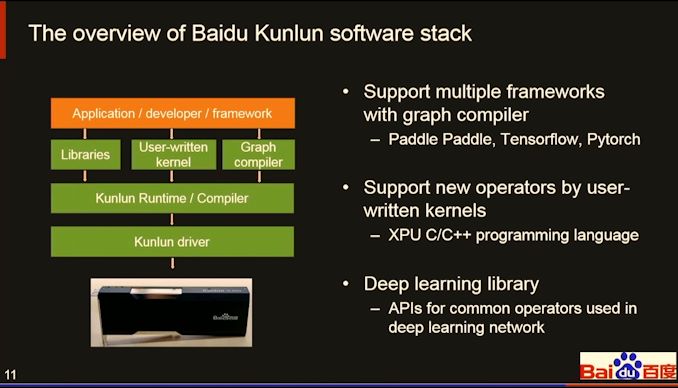

07:47PM EDT – Graph compiler

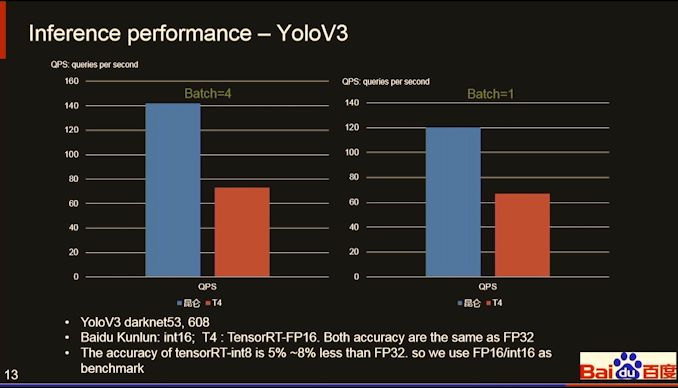

07:47PM EDT – helps PaddlePaddle, Tensorflow, pytorch

07:48PM EDT – XPU C/C++ for customized kernels

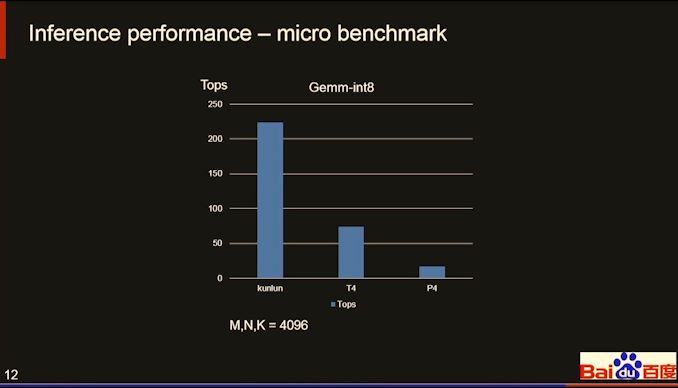

07:48PM EDT – 256 TOPs for 4096x4096x4096 GEMM INT8 inference

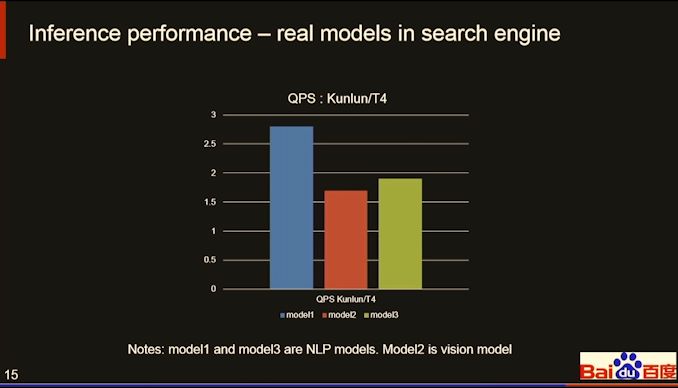

07:51PM EDT – These benchmarks are very odd

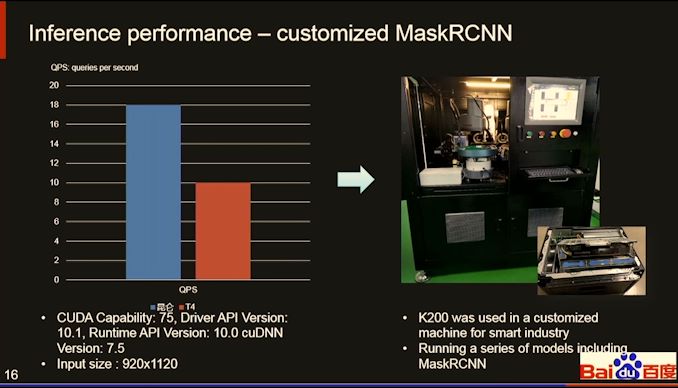

07:51PM EDT – massive edge = industrial

07:51PM EDT – Mask inspection

07:52PM EDT – Mask RCNN

07:52PM EDT – Available in Baidu Cloud

07:53PM EDT – Q&A time

07:54PM EDT – Q: {hardware} picture/video decode? A: No

07:55PM EDT – Q: INT4 throughput as INT8? A: INT4 identical as INT8, however INT4 and leverage extra of the capabilities

07:56PM EDT – Q: Size and BW of on-chip shared reminiscence? A: BW is 512 GB/s for every port every cluster (I do not assume that solutions the questions)

07:56PM EDT – Q: Static scheduling of sources? A: Yes

07:57PM EDT – Q:Power? A: Real Power 70-90W, virtually identical as T4, however TDP 150W

07:57PM EDT – That’s a wrap. Next discuss is Alibaba NPU