07:58PM EDT – Former Huawei GPU architect

07:59PM EDT – Development in early 2018

08:00PM EDT – Lots of enterprise on inferencing

08:00PM EDT – obtain high-throughput, low latency, excessive energy effectivity design

08:00PM EDT – Lots of Alibaba workloads are convolution-related

08:00PM EDT – Optimization for GEMM as nicely

08:00PM EDT – Flexible to assist future activation capabilities

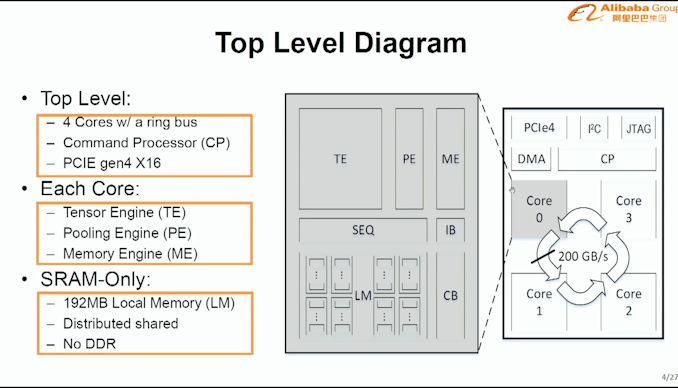

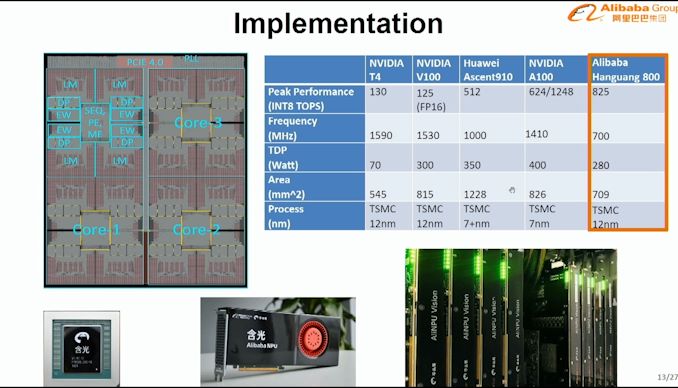

08:01PM EDT – Four cores with ring bus

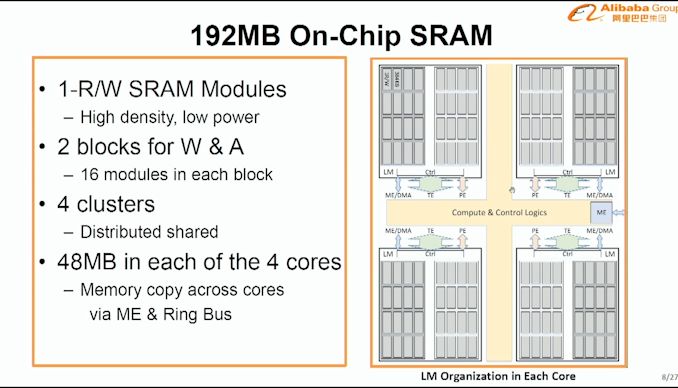

08:01PM EDT – 192 MB native reminiscence, distributed shared, no DDR

08:01PM EDT – Command processor above all 4 cores

08:01PM EDT – PCIe 4.zero x16

08:02PM EDT – Each core has three engines: Tensor, Pooling, Memory

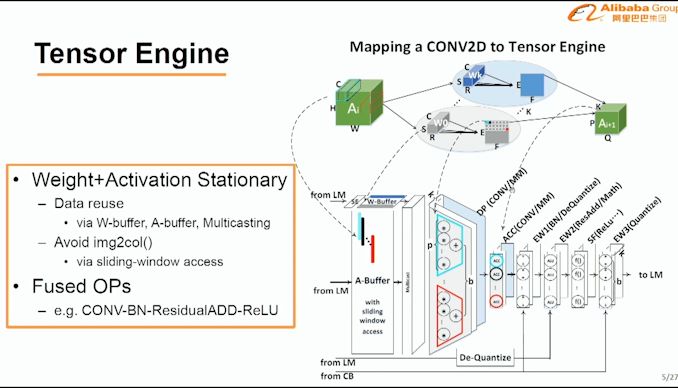

08:02PM EDT – This is the tensor engine throughput

08:02PM EDT – information reuse and fused ops

08:02PM EDT – reduce information motion

08:03PM EDT – Use sliding window to reduce entry

08:04PM EDT – Convert information to FP and push down the pipe

08:04PM EDT – on EW2 stage

08:05PM EDT – fp19 assist

08:05PM EDT – reminiscence engine can modify association of information

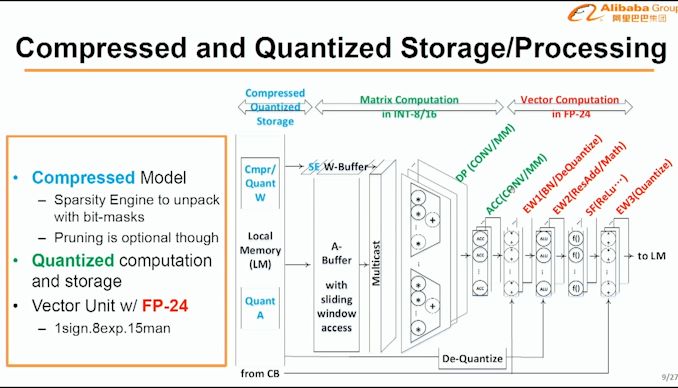

08:06PM EDT – Support for compressed fashions for sparse information

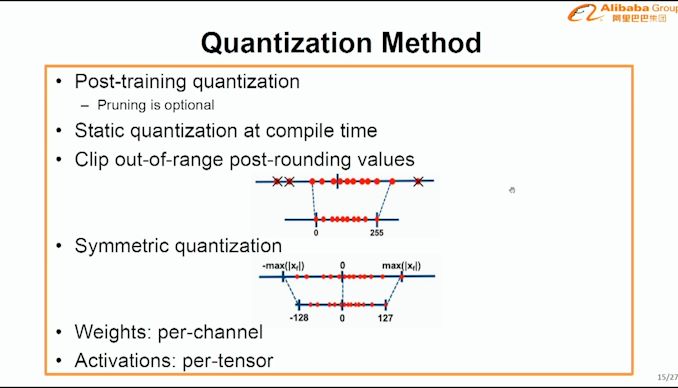

08:06PM EDT – Pruning is non-compulsory

08:06PM EDT – Quantized to INT16/INT8

08:06PM EDT – FP24 vector unit

08:07PM EDT – Way buffer

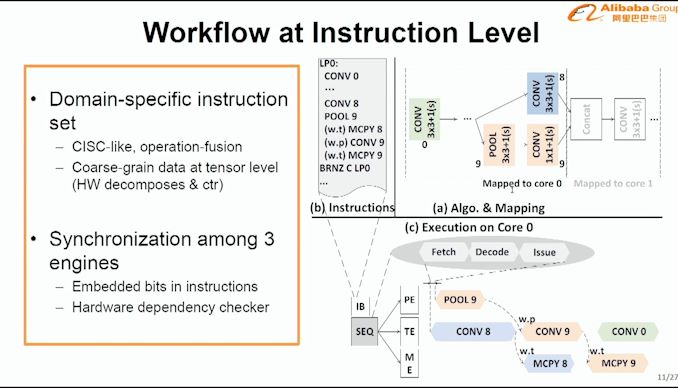

08:08PM EDT – This is a typical workflow

08:09PM EDT – Host CPU communicates to CP

08:09PM EDT – Domain particular instruction set

08:09PM EDT – operation fusion

08:09PM EDT – CISC-like

08:10PM EDT – 3-engine sync

08:10PM EDT – two syncs – at compiler or at {hardware}

08:11PM EDT – Scalable process mapping

08:12PM EDT – Use PCIe swap for multi-chip pipelining

08:12PM EDT – 825 TOPs INT8 at 280W

08:12PM EDT – 700 MHz

08:12PM EDT – 709 mm2

08:12PM EDT – TSMC 12nm

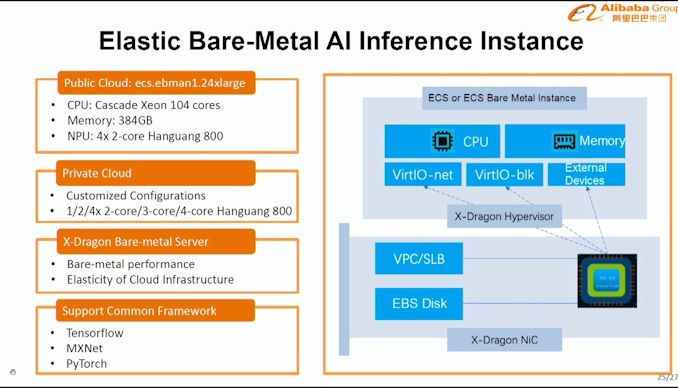

08:12PM EDT – Support most main frameworks

08:13PM EDT – Support for post-training quantization

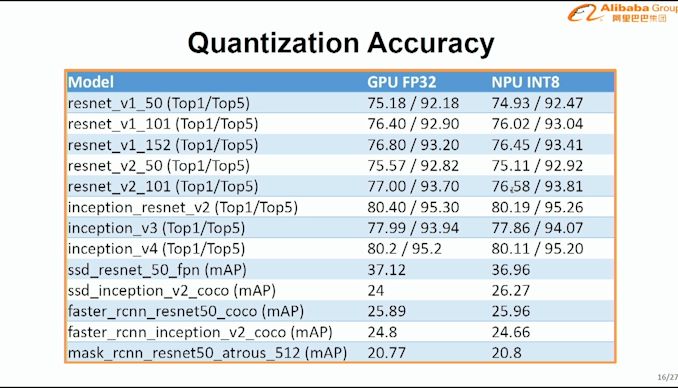

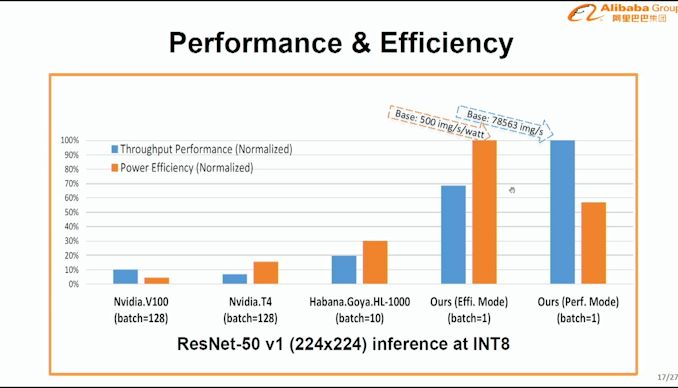

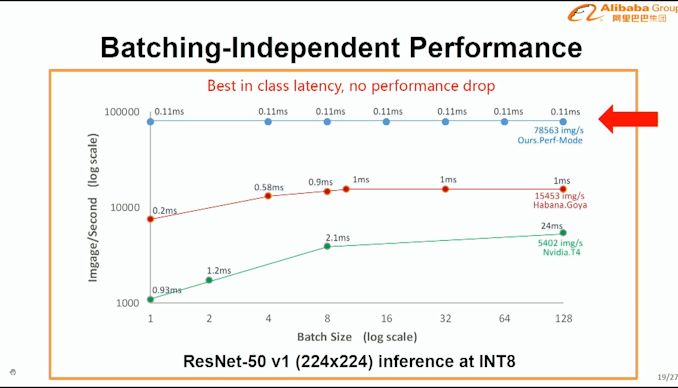

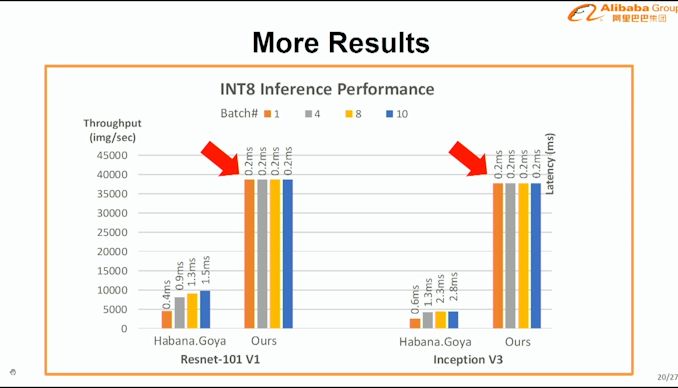

08:15PM EDT – At batch 1, NPU throughput outperfoms V100 at batch 128

08:15PM EDT – utilizing Resnet50 v1

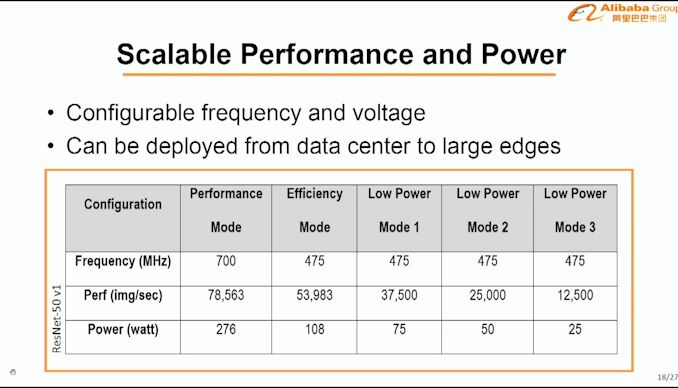

08:16PM EDT – Scalable perf and energy

08:16PM EDT – 25W to 280W

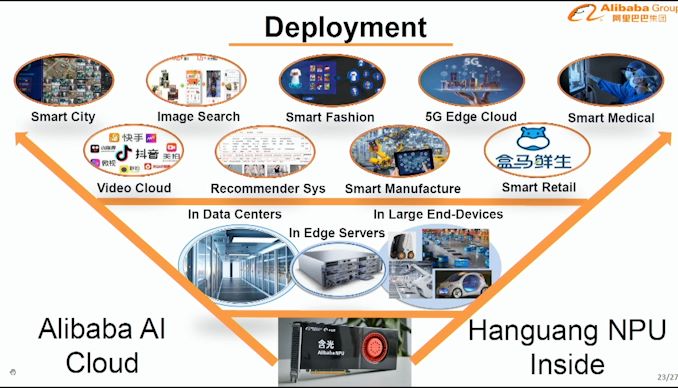

08:19PM EDT – Targeting a number of functions

08:21PM EDT – ecs.ebman1.24xlarge us Cascade 104 cores with 4×2-core Hanguang 800

08:21PM EDT – public cloud

08:23PM EDT – Q&A Time

08:23PM EDT – Q: Recommendation engines – what different targets? A: Primarily Computer imaginative and prescient, after the optimizations, it is nicely suited to suggestion and search as nicely.

08:24PM EDT – Q: Replacing the T4? A: Yes

08:24PM EDT – Q: Embedding tables in host reminiscence? A: appropriate

08:25PM EDT – Q: Support workloads > 192 MB? A: Can allow a number of chips and chip-to-chip by means of PCIe

08:25PM EDT – Q: Sparsity engine for weights and activations? A: Just weights

08:26PM EDT – Q: Non-2D convolution like Bert? A: We can map onto our chip and run it with precision to satisfy necessities, however efficiency isn’t glad. Size is an issue, so we want a number of chips which has a perf penalty

08:27PM EDT – Q: Why examine A100 and Goya at completely different batches to NPU? A: We can do single batch throughput higher whereas holding latency tremendous low

08:27PM EDT – Tjat

08:28PM EDT – That’s a wrap. Now for the ultimate discuss – silicon photonics!

08:28PM EDT – .