04:24AM EDT – I’m here at the first GTC Europe event, ready to go for the Keynote talk hosted by CEO Jen-Hsun Huang.

04:25AM EDT – This is a satellite event to the main GTC in San Francisco. By comparison the main GTC has 5000 attendees, this one has 1600-ish

04:25AM EDT – This is essentially GTC on the road – they’re doing 5 or 6 of these satellite events around the world after the main GTC

04:27AM EDT – We’re about to start

04:29AM EDT – Opening video

04:30AM EDT – ‘Deep Learning is helping farmers analyze crop data in days what used to take years’

04:30AM EDT – ‘Using AI to deliver relief in harsh conditions’ (drones)

04:30AM EDT – ‘Using AI to sort trash’

04:30AM EDT – Mentioning AlphaGO

04:31AM EDT – JSH to the stage

04:32AM EDT – ‘GPUs can do what normal computing cannot’

04:32AM EDT – ‘We’re at the beginning of something important, the 4th industrial revolution’

04:33AM EDT – ‘Several things at once came together to make the PC era something special’

04:33AM EDT – ‘In 2006, the mobile revolution and Amazon AWS happened’

04:33AM EDT – ‘We could put high performance compute technology in the hands of 3 billion people’

04:34AM EDT – ’10 years later, we have the AI revolution’

04:34AM EDT – ‘Now, we have software that writes software. Machines learn. And soon, machines will build machines.’

04:35AM EDT – ‘In each era of computing, a new computing platform emerged’

04:35AM EDT – ‘Windows, ARM, Android’

04:35AM EDT – ‘A brand new type of processor is needed for this revolution – it happened in 2012 with the Titan X’

04:37AM EDT – ‘Deep Learning was in the process, and the ability to generalize learning was a great thing, but it had a handicap’

04:37AM EDT – ‘It required a large amount of data to write its own software, which is computationally exhausting’

04:38AM EDT – ‘The handicap lasted two decades’

04:38AM EDT – ‘Deep Neural Nets were then developed on GPUs to solve this’

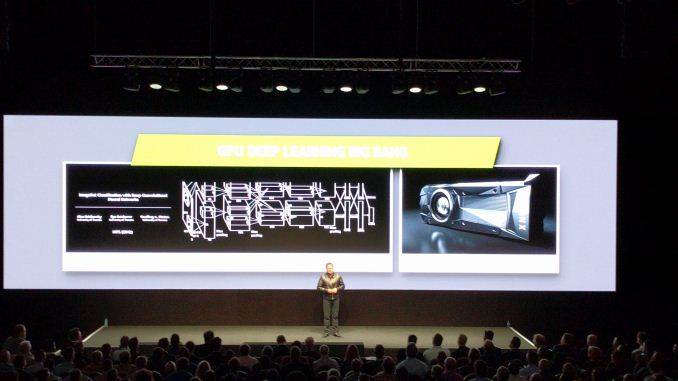

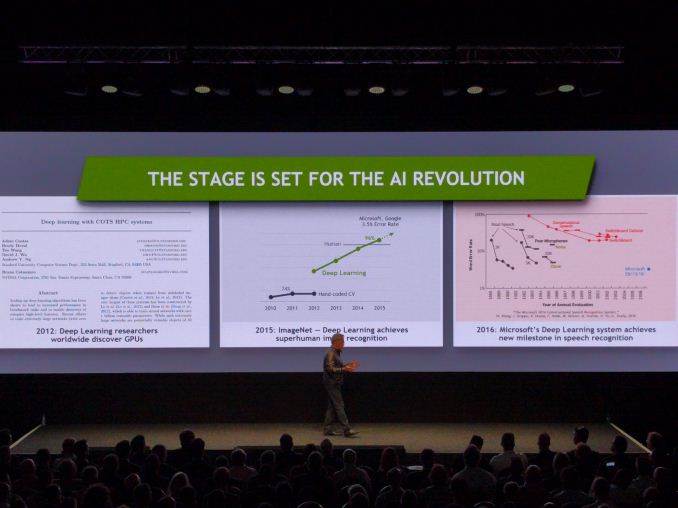

04:39AM EDT – ‘ImageNet Classification with Deep Convolutional Neural Networks’ by Alex Krizhevsky at the University of Toronto

04:40AM EDT – ‘The neural network out of that paper, ‘AlexNet’ beat seasoned Computer Vision veterans with hand tuned algorithms at ImageNet’

04:40AM EDT – ‘One of the most exciting events in computing for the last 25 years’

04:41AM EDT – ‘Now, not a week goes by when there’s a new breakthrough or milestone reached’

04:42AM EDT – ‘e.g., 2015 where Deep Learning beat humans at ImageNet, 2016 where speech recognition reaches sub-3% in conversational speech’

04:43AM EDT – ‘As we grow, the computational complexity of these networks becomes even greater’

04:44AM EDT – ‘Now, Deep Learning can beat humans at image recognition – it has achieved ‘Super Human’ levels’

04:44AM EDT – ‘One of the big challenges is autonomous vehicles’

04:44AM EDT – ‘Traditional CV approaches wouldn’t ever work for auto’

04:45AM EDT – ‘Speech recognition is one of the most researched areas in AI’

04:45AM EDT – ‘Speech will not only change how we interact with computers, but what computers can do’

04:47AM EDT – Correction, Microsoft hit 6.3% error rate in speech recognition

04:47AM EDT – ‘The English language is fairly difficult for computers to understand, especially in a noisy environment’

04:48AM EDT – ‘Reminder, humans don’t achieve 0% error rate’

04:48AM EDT – ‘These three achievements are great: we now have the ability to simulate human brains: learning, sight and sound’

04:49AM EDT – ‘The ability to perceive and the ability to learn are fundamentals of AI – we now have the three pillars to solve large-scale AI problems’

04:49AM EDT – ‘NVIDIA invented the GPU, and 10 years ago we invented GPU computing’

04:50AM EDT – ‘Almost all new high performance computers are accelerated, and NVIDIA is in 70% of them’

04:50AM EDT – ‘Virtual Reality is essentially computing human imagination’

04:51AM EDT – ‘Some people have called NVIDIA the AI Computing Company’

04:51AM EDT – JSH: ‘We’re still the fun computing company, solving problems, and most of the work we do is exciting for the future’

04:52AM EDT – ‘Merging simulation, VR, AR, and powered by AI, and scenes like Tony Stark in Iron Man captures what NVIDIA is going after’

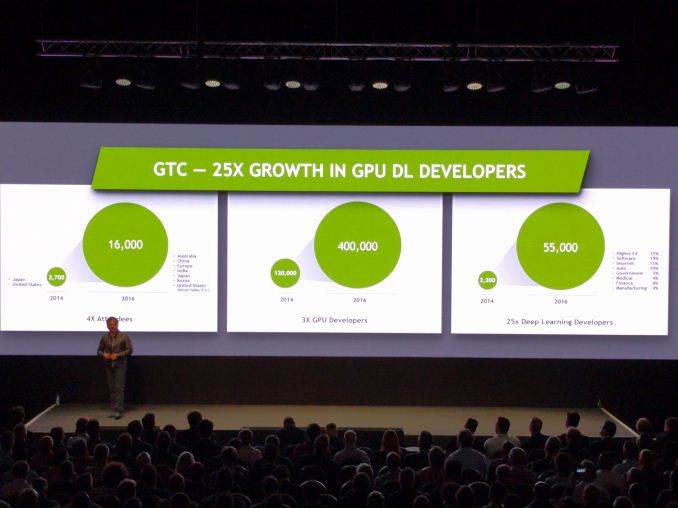

04:53AM EDT – GTC 2014 to 2016: 4x attendees, 3x GPU developers, 25x deep learning devs, moving from 2 events/year to 7 events/year worldwide

04:54AM EDT – The GPU devs and Deep Learning devs numbers are ‘industry metrics’, not GTC attendees

04:55AM EDT – ‘I want the developers to think about the Eiffel Tower – an iconic image in Europe’

04:56AM EDT – ‘The brain typically imagines an image of the tower – your brain did the graphics’

04:56AM EDT – ‘The brain thinks like a GPU, and the GPU is like a brain’

04:57AM EDT – ‘The computer industry has invested trillions of dollars into this’

04:57AM EDT – ‘The largest supercomputer has 16-18000 NVIDIA Tesla GPUs, over 25m CUDA cores’

04:58AM EDT – ‘GPUs are at the forefront of this’

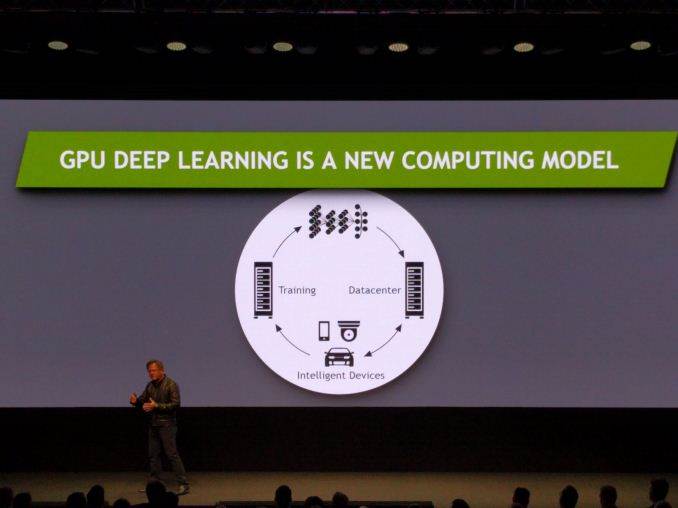

04:58AM EDT – ‘GPU Deep Learning is actually a new model’

04:59AM EDT – ‘Previously, software engineers write the software, QA engineers test it, and in production the software does what we expect’

04:59AM EDT – ‘GPU Deep Learning is a bit different’

04:59AM EDT – ‘Learning is important – a deep neural net has to gather data and learn from it’

05:00AM EDT – ‘This is the computationally intensive part of Deep Learning’

05:00AM EDT – ‘Then the devices infer, using the generated neural net’

05:00AM EDT – ‘GPUs have enabled larger and deeper neural networks that are better trained in shorter times’

05:01AM EDT – ‘A modern neural network has hundreds of hidden layers and learns a hierarchy of features’

05:01AM EDT – ‘Our brain has the ability to do that. Now we can do that in a computer context’

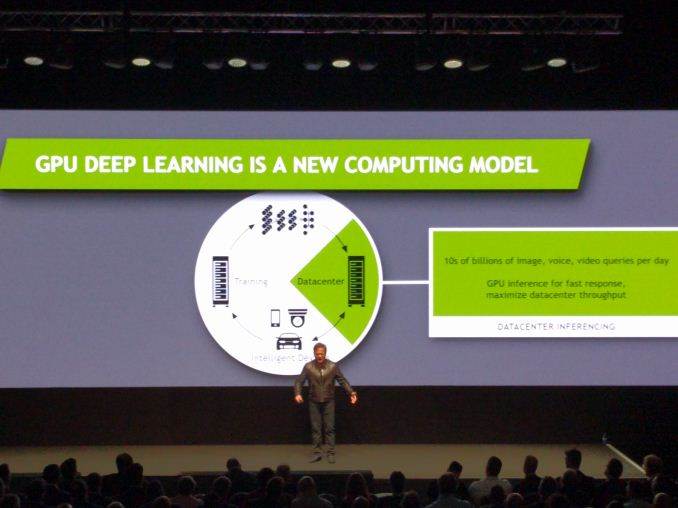

05:02AM EDT – ‘The trained network is placed into data centers for cloud inferencing with large libraries to answer questions from its database’

05:02AM EDT – ‘This is going to be big in the future. Every question in the future will be routed through an AI network’

05:03AM EDT – ‘GPU inferencing makes response times a lot faster’

05:03AM EDT – ‘This is the area of the intelligent device’

05:04AM EDT – ‘This is the technology for IoT’

05:04AM EDT – ‘AI is comparatively small coding with lots and lots of computation’

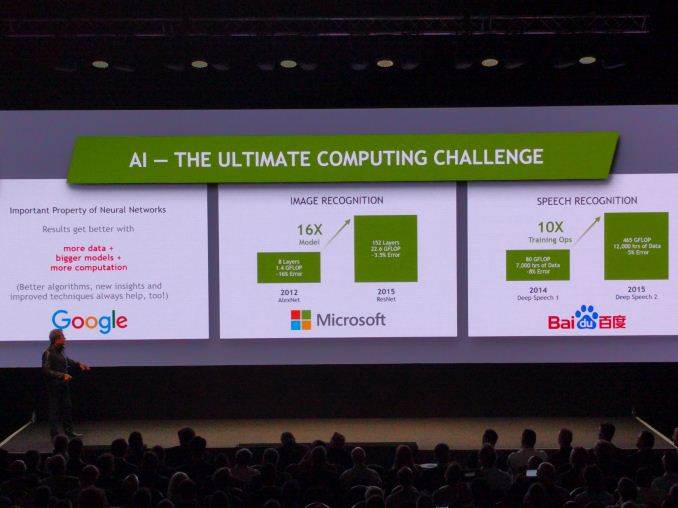

05:05AM EDT – ‘The important factor of neural nets is that they work better with larger datasets and more computation’

05:05AM EDT – ‘It’s about the higher quality network’

05:05AM EDT – 2012 AlexNet was 8-layers, 1.4 GFlops, 16% error

05:06AM EDT – 2015 ResNet is 152 Layers, 22.6 GFlops for 3.5% error

05:06AM EDT – The 2016 winner has improved this with a network 4x deeper

05:06AM EDT – Baidu in 2015, using 12k hours of data and 465 GFLOPs can do 5% speech recognition error

05:07AM EDT – ‘This requires a company to push hardware development at a faster pave than Moore’s Law’

05:07AM EDT – ‘So NVIDIA thought, why not us’

05:08AM EDT – ‘I want to dedicate my next 40 years to this endeavour’

05:08AM EDT – ‘The rate of change for deep learning has to grow, not diminish’

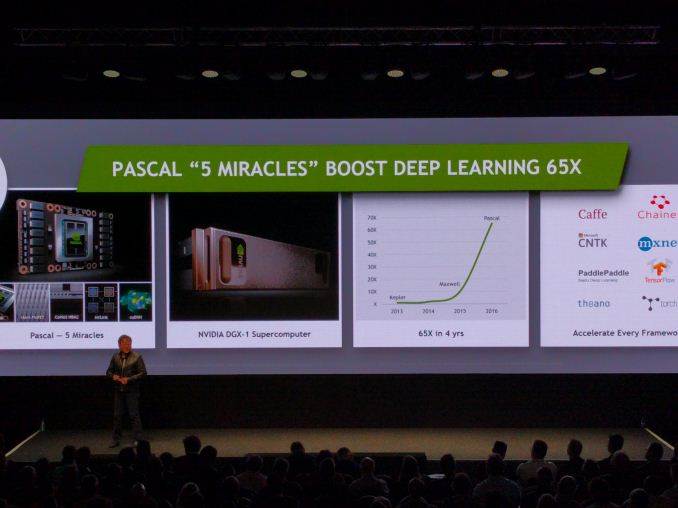

05:09AM EDT – ‘The first customer of Pascal is an open lab called OpenAI, and their mission is to democratize the AI field’

05:10AM EDT – ‘Our platform is so accessible – from a gaming PC to the cloud, supercomputer, or DGX-1’

05:11AM EDT – ‘You can buy it, rent it, anywhere in the world – if you are an AI researcher, this is your platform’

05:11AM EDT – ‘We can’t slow down against Moore’s Law, we have to hypercharge it’

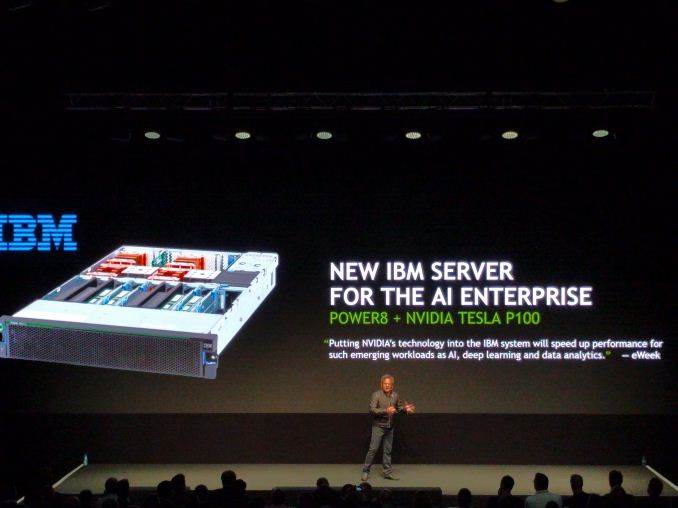

05:12AM EDT – ‘We also have partners, such as IBM with cognitive computing services’

05:13AM EDT – ‘We worked with IBM to develop NVLink to pair POWER8 with the NVIDIA P100 GPU to get a network of fast processors and fast GPUs, dedicated to solve AI problems’

05:13AM EDT – ‘Today we are announcing a new partner’

05:13AM EDT – ‘To apply AI for other companies worldwide’

05:14AM EDT – New partner is SAP

05:14AM EDT – ‘We want to bring AI to companies around the world as one of the biggest AI hardware/software collaborations’

05:14AM EDT – ‘We want SAP to be able to turbocharge their customers with Deep Learning’

05:15AM EDT – ‘The research budget of DGX-1 was $2b, with 10k engineer years of work’

05:15AM EDT – ‘DGX-1 is now up for sale. It’s in the hands of lots of high impact research teams’

05:17AM EDT – ‘We’re announcing two designated research centers for AI research in Europe – one in Germany, one in Switzerland, with access to DGX-1 hardware’

05:17AM EDT – Now Datacenter Inferencing

05:18AM EDT – ‘After the months and months of training of the largest networks, when the network is complete, it requires a hyperscale datacenter’

05:18AM EDT – ‘The market goes into 10s of millions of hyperscale servers’

05:18AM EDT – ‘With the right inference GPU, we can provide instantaneous solutions when billions of queries are applied at once’

05:19AM EDT – ‘NVIDIA can allow datacenters to support a factor million more load without ballooning costs or power a million times’

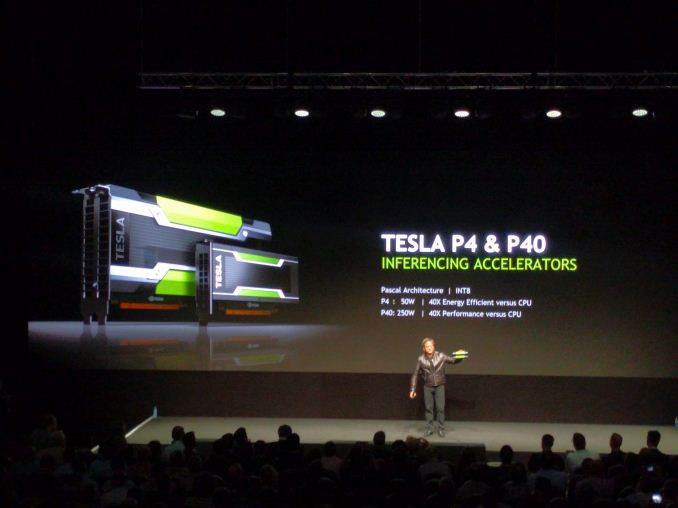

05:20AM EDT – ‘NVIDIA recently launched the Tesla P4 and P40 inferencing accelerators’

05:20AM EDT – ‘You can replace 3-4 racks with one GPU’

05:21AM EDT – Here’s the link to our write-up of the P4/P40 news: http://www.anandtech.com/show/10675/nvidia-announces-tesla-p40-tesla-p4

05:21AM EDT – P4 at 50W, P40 at 250W, using Pascal and using INT8

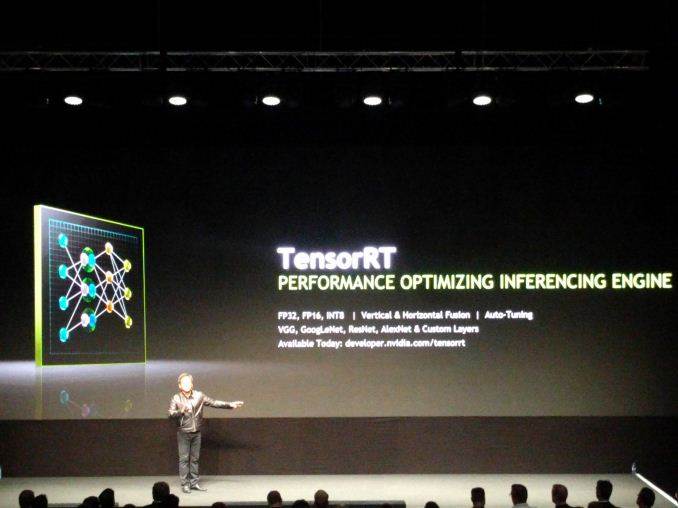

05:22AM EDT – Announcing TensorRT

05:22AM EDT – ‘Software package that compiles, fuses operations and autotuning, optimizing the code to the GPU for efficiency’

05:22AM EDT – ‘Support a number of networks today, plan to support all major networks in time’

05:23AM EDT – ‘Live video is the increasingly shared content of importance’

05:24AM EDT – ‘It would be nice if, as you are uploading the live video, it would identify which of your viewers/family would be interested’

05:24AM EDT – ‘AI should be able to do this’

05:24AM EDT – Example on stage of 90 streams at 720p30 running at once

05:25AM EDT – A human can do this relatively easily, but a bit slowly

05:25AM EDT – ‘A computer can learn from the videos what is happening and assign relevant tags’

05:26AM EDT – ‘The future needs the ability to filter based on tags generated…