With its algorithm alone not making the cut, Google is turning to real people to fight fake news.

Google’s so-called quality raters — a swarm of more than 10,000 independent contractors who check website credibility, report illegal images and push high-quality pages to the top of search results — received a new tool to banish hateful, false or upsetting information out of prominence in searches and make them harder to find.

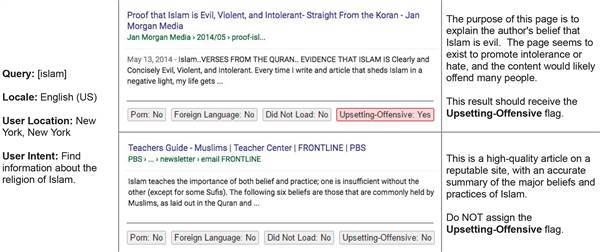

The tech giant intends for its raters to apply an “Upsetting-Offensive” flag to pages that promote hate or violence against a group of people, provide how-to information about crimes and include slurs or graphic violence, according to an updated version of the quality rater manual released Tuesday.

Other content that users in a rater’s locale would find offensive or upsetting could also be flagged.

The company did not immediately respond to a request to NBC News about its use of quality raters.

The change would be “quite an advance” for the tech giant, said Heidi Beirich, the intelligence project director for the Southern Poverty Law Center, which called on Google in December to update its algorithm to remove a result from a neo-Nazi website questioning whether the Holocaust happened.

Related: Google Changes Algorithm, Scrubs Neo-Nazi Site Disputing Holocaust in Top Search

“They’re moving away from self-reporting, relying on the public to tell you where there’s problems, and they’re talking about a systematic policy,” Beirich said. “What’s happened in the past with Google is you say, ‘Oh my God, you searched for Jew and all you get is hate sites,’ so they fixed that one problem.”

The raters themselves can’t change Google’s search algorithm. Instead they provide data to Google’s coders, who can teach the algorithm to flag offensive content on its own.

Those results would still be reachable, but only with a query clearly intended to find them, the manual says.

Searches not clearly seeking hateful content — Google’s examples include “Did the Holocaust happen?” and “Are women evil?” — would be treated as educational endeavors and return factual information.

The changes are the latest wave of activity undertaken by tech companies to combat fake news and racism since the 2016 election, when tech giants like Facebook and Google were criticized for their slow-to-start responses to false and inflammatory news stories.

But lately, Google’s problems with offensive content have outshined criticism around fake news.

The government of the United Kingdom and several British organizations, including retailers Sainsbury and Argos and the Guardian newspaper, pulled advertisements from Googles ad services Friday, after ads appeared next to extremist videos.

Related: Facebook Just Rolled Out Its Fake News Tool

“They claim on their commercial side to not run ads in places that will make money for the Daily Stormers of the world and what not,” Beirich said, referring to a white supremacist website. “Clearly the policy isn’t working to the level where that’s not happening.”

Google executives were expected to attend a meeting at the U.K.’s Cabinet Office on Friday to explain why government advertisements appeared next to videos espousing hate speech.

“With millions of sites in our network and 400 hours of video uploaded to YouTube every minute, we recognize that we don’t always get it right,” Google said in a statement. “In a very small percentage of cases, ads appear against content that violates our monetization policies. We promptly remove the ads in those instances, but we know we can and must do more.”

![[CES 2026] Samsung Unveils The First Look 2026 Teaser –](https://loginby.com/itnews/wp-content/uploads/2026/01/CES-2026-Samsung-Unveils-The-First-Look-2026-Teaser-–-100x75.jpg)