At Hot Chips final week, IBM introduced its new mainframe Z processor. It’s a giant attention-grabbing piece of package that I need to do a wider piece on sooner or later, however there was one characteristic of that core design that I need to pluck out and concentrate on particularly. IBM Z is understood for having massive L3 caches, backed with a separate international L4 cache chip that operates as a cache between a number of sockets of processors – with the brand new Telum chip, IBM has achieved away with that – there’s no L4, however apparently sufficient, there’s no L3. What they’ve achieved as an alternative is likely to be a sign of the way forward for on-chip cache design.

Caches: A Brief Primer

Any fashionable processor has a number of ranges of cache related to it. These are separated by capability, latency, and energy – the quickest cache closest to the execution ports tends to be small, after which additional out we’ve got bigger caches which are barely slower, after which maybe one other cache earlier than we hit primary reminiscence. Caches exist as a result of the CPU core desires information NOW, and if it was all held in DRAM it might take 300+ cycles every time to fetch information.

A contemporary CPU core will predict what information it wants prematurely, convey it from DRAM into its caches, after which the core can seize it so much quicker when it wants it. Once the cache line is used, it’s usually ‘evicted’ from the closest stage cache (L1) to the subsequent stage up (L2), or if that L2 cache is full, the oldest cache line within the L2 can be evicted to an L3 cache to make room. It implies that if that information line is ever wanted once more, it isn’t too distant.

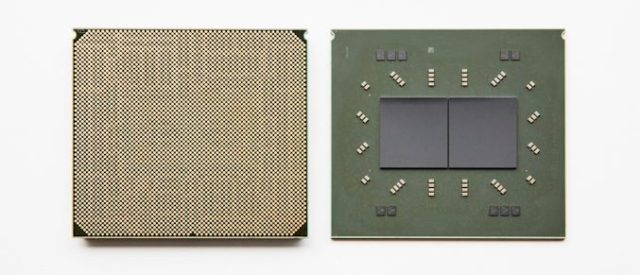

An instance of L1, L2, and a shared L3 on AMD’s First Gen Zen processors

There can also be the scope of personal and shared caches. A contemporary processor design has a number of cores, and inside these cores can be no less than one personal cache (the L1) that solely that core has entry to. Above that, a cache could both be a personal cache nonetheless native to the core, or a shared cache, which any core can use. An Intel Coffee Lake processor for instance has eight cores, and every core has a 256 KB personal L2 cache, however chip vast there’s a 16 MB shared L3 between all eight cores. This implies that if a single core desires to, it may preserve evicting information from its smaller L2 into the massive L3 and have a pool of assets if that information desires to be reused. Not solely this, but when a second core wants a few of that information as nicely, they will discover it within the shared L3 cache with out having to write down it out to primary reminiscence and seize it there. To complicate the matter, a ‘shared’ cache is not essentially shared between all cores, it’d solely be shared between a particular few.

The finish result’s that caches assist scale back time to execution, and produce in additional information from primary reminiscence in case it’s wanted or as it’s wanted.

Tradeoffs

With that in thoughts, you may ask why we don’t see 1 GB L1 or L2 caches on a processor. It’s a superbly legitimate query. There are numerous parts at play right here, involving die space, utility, and latency.

The die space is a straightforward one to sort out first – finally there could solely be an outlined area for every cache construction. When you design a core in silicon, there could also be a greatest method to lay the elements of the core out to have the quickest essential path. But the cache, particularly the L1 cache, must be near the place the info is required. Designing that structure with a four KB L1 cache in thoughts goes to be very totally different if you need a big 128 KB L1 cache as an alternative. So there’s a tradeoff there – past the L1, the L2 cache is typically a big client of die area, and whereas it (normally) isn’t as constrained by the remainder of the core design, it nonetheless must be balanced with what is required on the chip. Any giant shared cache, whether or not it finally ends up as a stage 2 cache or a stage three cache, can usually be the most important…