eWEEK content material and product suggestions are editorially unbiased. We could earn a living whenever you click on on hyperlinks to our companions. Learn More.

ChatGPT MD?

Get this: A Reddit person simply credited ChatGPT with most likely saving his spouse’s life.

Following a cyst elimination, she was feeling feverish, however instructed him she wished to “wait it out.”

The husband casually plucked all the main points into ChatGPT (as he usually did), and was stunned to see it urge them to get to the ER, ASAP.

He stated usually, GPT was rather more chill, so the urgency was a little bit of a shock.

And guess what? It was proper.

She had developed sepsis.

Naturally, the feedback exploded with related tales

AI catching blood clots, diagnosing gallbladder points, even recognizing uncommon circumstances that stumped 17 totally different docs. It was truthfully sort of superb to learn?? And by superb, we imply kiiiinda arduous to imagine.

Also, earlier than we go any additional, we must always state for the report:

None of that is medical recommendation, nor ought to or not it’s construed as an endorsement of utilizing AI to your healthcare. You also needs to most likely examine how AI firms maintain onto your knowledge and should not delete your chats once they say they do.

Now, earlier than you go designate ChatGPT as your new major care doctor, hearken to this:

A main new Oxford research with ~1,300 contributors revealed an important fact about AI medical recommendation: folks utilizing AI chatbots carried out worse than these simply utilizing Google.

The Oxford research examined GPT-4o, Llama 3, and Command R+ on medical situations with actual folks.

Here’s what they discovered

When the AIs labored alone, they scored 90-99% accuracy figuring out circumstances.

But when paired with people, customers might solely establish related circumstances 34.5% of the time—considerably worse than the 47% achieved by folks simply utilizing Google and their very own information.

The breakdown? Users present incomplete data, can’t distinguish good from unhealthy AI strategies, and even ignore right suggestions even when the AI will get it proper.

The Oxford research exposes a vital flaw in how we consider AI

Standard medical benchmarks confirmed 80%+ accuracy, whereas real-world efficiency with the identical AI was beneath 20% in some circumstances.

The AIs that scored 99% accuracy when examined alone fully failed when actual folks tried to make use of them.

Another complete 2025 meta-analysis of 83 research discovered that generative AI fashions have an total diagnostic accuracy of simply 52.1%—performing no higher than non-expert physicians however considerably worse than skilled physicians.

The knowledge on ChatGPT’s medical accuracy is everywhere in the map… from 49% accuracy in a single research to outperforming emergency division physicians in one other.

But the Oxford paper identified that the actual concern isn’t medical information — it’s the folks expertise.

As one medical skilled put it: “LLMs have the knowledge but lack social skills.”

In one sense, the bottleneck isn’t medical experience however human-computer interplay design.

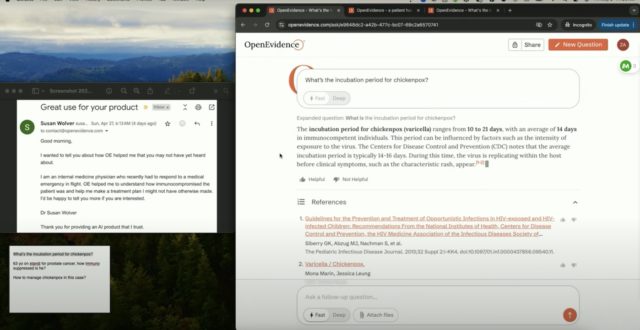

People are already utilizing ChatGPT as a “frontline triage tool” and solely performing when the AI says “see doctor ASAP.” But common non-docs battle to speak successfully, even with pretend situations the place they haven’t any cause to lie or withhold data.

This mirrors broader AI growth patterns. Two years in the past, “prompt engineering” was hyped throughout tech firms, then disappeared as fashions tailored to typical person questions.

But this hasn’t translated to medical conversations with non-professionals, the place “sometimes do X” directions fail when circumstances are fuzzy.

What about reasoning fashions (o1, o3, and so on)?

Recent research on newer fashions like GPT-4o and ChatGPT o1 present improved efficiency, with newer fashions reaching 60% accuracy in comparison with GPT-3.5’s 47.8% for…

![[Interview] [Galaxy Unpacked 2026] Maggie Kang on Making](https://loginby.com/itnews/wp-content/uploads/2026/02/Interview-Galaxy-Unpacked-2026-Maggie-Kang-on-Making-100x75.jpg)