To date, a lot of the new AI {hardware} coming into the market has been a ‘purchase necessary’ involvement. For any enterprise seeking to go down the route of utilizing specialised AI {hardware}, they should pay money for a take a look at system, see how straightforward it’s emigrate their workflow, then compute the price/work/way forward for taking place that route, if possible. Most AI startups are flush with VC funding that they’re keen to place the leg work in for it, hoping to snag an enormous buyer sooner or later to make that enterprise worthwhile. One easy reply can be to supply the {hardware} within the cloud, however it takes loads for a Cloud Service Provider (CSP) to chunk and provide that {hardware} as an choice to their clients. Today’s announcement between Cerebras and Cirrascale is that as a CSP, Cirrascale will start to supply wafer-scale cases based mostly on Cerebras’ WSE2.

Cerebras WSE2 and CS-2

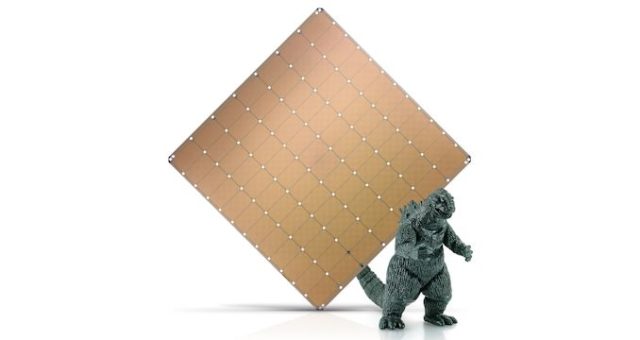

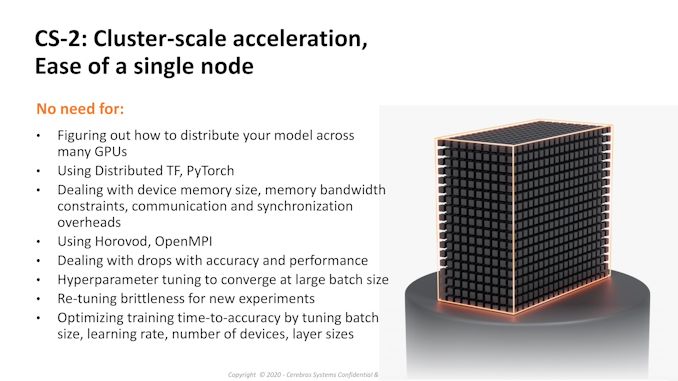

The Cerebras Wafer Scale Engine 2 is a single AI chip the dimensions of a wafer. Using TSMC N7 and quite a lot of patented applied sciences referring to cross-reticle connectivity and packaging, a single 46225 mm2 chip has over 800000 cores and a couple of.6 trillion transistors. With 40 GB of SRAM on board, WSE2 is designed to seize giant machine studying fashions for coaching with out the necessity to cut up the coaching throughout a number of nodes. Rather than utilizing a distributed TensorFlow or Pytorch mannequin with MPI or synchronization, the goal of WSE2 is to suit your complete mannequin onto a single chip, dashing up communications between the cores, and making the software program simpler to handle as fashions are scaling quickly.

The WSE2 sits on the coronary heart of a CS-2 system, a 15U rack system with a customized machined aluminium entrance panel. Connectivity comes via 12 x 100 gigabit Ethernet ports, and the chip inside makes use of a customized packaging and water cooling system with redundancy. A single chip is rated at 14 kW typical, 23 kW peak, nevertheless there are 12 x four kW energy provides inside. Current clients of CS-2 items embody nationwide laboratories, supercomputing facilities, pharmacology, biotechnology, the navy, and different intelligence companies. At value of a number of million every, it’s a big chunk to take , therefore the announcement right now.

Cerebras x Cirrascale: WSE2 In The Cloud

Today’s announcement is that Cirrascale, a cloud companies supplier specializing in GPU clouds for AI and machine studying, will deploy a CS-2 system at its facility in Santa Clara. It can be supplied to clients as an entire system occasion, somewhat than a partitioned system like a CPU/GPU may be, on the idea that the form of clients considering a CS-2 can be clients who’ve giant fashions for which a portion of a CS-2 isn’t sufficient. Cerebras CEO Andrew Feldman defined that clients CS-2 know that their workload scales to so many GPUs they want a unique avenue to get their fashions to suit on a single system.

Currently that is solely a single system, and somewhat than having a number of customers, Cirrascale can be providing a first-come, first serve system. Normally a single CS-2 system is a number of million to buy, nevertheless cloud rental prices at Cirrascale will run to $60ok every week, or $180ok a month, and additional reductions if longer is required. The minimal rental time is every week, and if a buyer needs, their occasion information will be saved regionally by Cirrascale for a future rental window.

Cirrascale’s CEO PJ Go defined that a few of the curiosity they’ve had within the system comes from giant monetary companies seeking to analyze their inner databases or buyer companies, in addition to pharmacology, and these companies are inclined to provoke lengthy contracts once they’ve discovered the best resolution for his or her prolonged ongoing workflow.

Those who’re within the system will be capable to use Cirrascale’s…