The intensive calls for of synthetic intelligence, machine studying and deep studying purposes problem information middle efficiency, reliability and scalability–particularly as architects mimic the design of public clouds to simplify the transition to hybrid cloud and on-premise deployments.

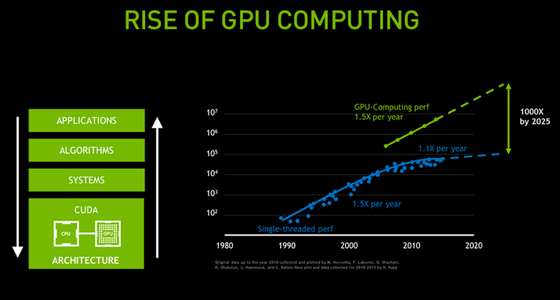

GPU (graphics processing unit) servers are actually frequent, and the ecosystem round GPU computing is quickly evolving to extend the effectivity and scalability of GPU workloads. Yet there are methods to maximizing the extra expensive GPU utilization whereas avoiding potential choke factors in storage and networking.

In this version of eWEEK Data Points, Sven Breuner, area CTO, and Kirill Shoikhet, chief architect, at Excelero, provide 9 greatest practices on getting ready information facilities for AI, ML and DL.

Data Point No. 1: Know your goal system efficiency, ROI and scalability plans.

This is to allow them to dovetail with information middle objectives. Most organizations begin with an initially small funds and small coaching information units, and put together the infrastructure for seamless and fast system development as AI turns into a precious a part of the core enterprise. The chosen {hardware} and software program infrastructure must be constructed for versatile scale-out to keep away from disruptive modifications with each new development section. Close collaboration between information scientists and system directors is vital to study concerning the efficiency necessities and get an understanding of how the infrastructure would possibly have to evolve over time.

Data Point No. 2: Evaluate clustering a number of GPU programs, both now or for the longer term.

Having a number of GPUs inside a single server permits environment friendly information sharing and communication contained in the system in addition to cost-effectiveness, with reference designs presuming a future clustered use with help for as much as 16 GPUs inside a single server. A multi-GPU server must be ready to learn incoming information at a really excessive charge to maintain the GPUs busy, which means it wants an ultra-high-speed community connection during to the storage system for the coaching database. However, in some unspecified time in the future a single server will now not be sufficient to work by way of the grown coaching database in cheap time, so constructing a shared storage infrastructure into the design will make it simpler so as to add GPU servers as AI/ML/DL use expands.

Data Point No. 3: Assess chokepoints throughout the AI workflow phases.

The information middle infrastructure wants to have the ability to cope with all phases of the AI workflow on the similar time. Having a stable idea for useful resource scheduling and sharing is vital for value efficient information facilities, in order that whereas one group of knowledge scientists get new information that must be ingested and ready, others will practice on their out there information, whereas elsewhere, beforehand generated fashions will probably be utilized in manufacturing. Kubernetes has turn out to be a well-liked answer to this drawback, making cloud expertise simply out there on premises and making hybrid deployments possible.

Data Point No. 4: Review methods for optimizing GPU utilization and efficiency.

The computationally intensive nature of many AI/ML/DL purposes make GPU-based servers a standard alternative. However, whereas GPUs are environment friendly at loading…