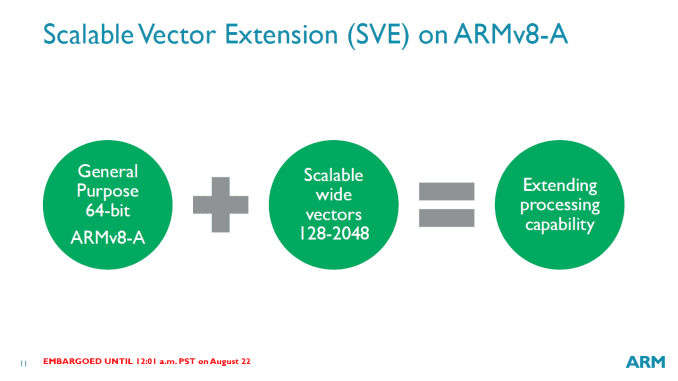

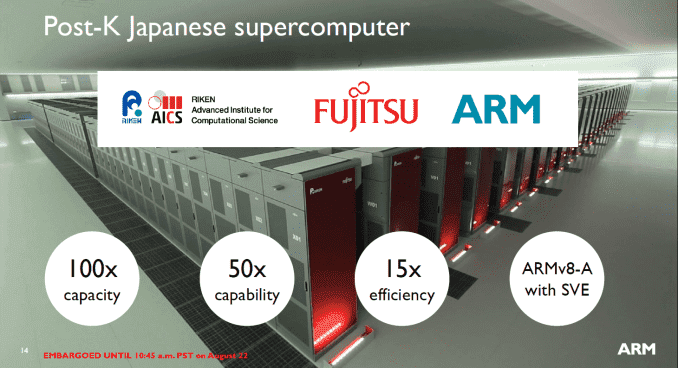

Today ARM is announcing an update to their line of architecture license products. With the goal of moving ARM more into the server, the data center, and high-performance computing, the new license add-on tackles a fundamental data center and HPC issue: vector compute. ARM v8-A with Scalable Vector Extensions won’t be part of any ARM microarchitecture license today, but for the semiconductor companies that build their own cores with the instruction set, this could see ARM move up into the HPC markets. Fujitsu is the first public licensee on board, with plans to include ARM v8-A cores with SVE in the Post-K RIKEN supercomputer in 2020.

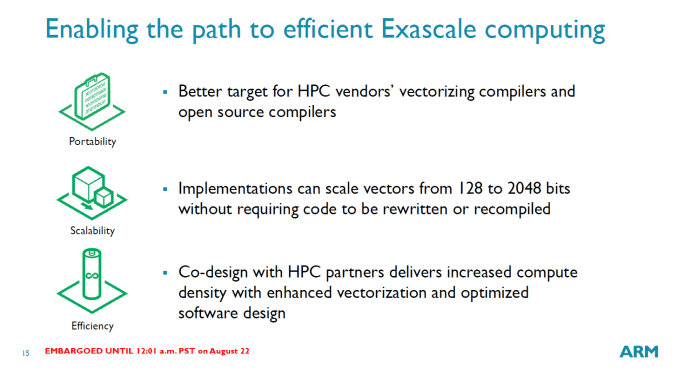

Scalable Vector Extensions (SVE) will be a flexible addition to the ISA, and support from 128-bit to 2048-bit. ARM has included the extensions in a way that if included in the hardware, the hardware is scalable: it doesn’t matter if the code being run calls for 128-bit, 512-bit or 2048-bit, the scheduler will arrange the calculations to compensate for the hardware that is available. Thus a 2048-bit code run on a 128-bit SVE core will manage the instructions in such a way to complete the calculation, or a 128-bit code on a 2048-bit core will attempt to improve IPC by bundling 128-bit calculations together. ARM’s purpose here is to move the vector calculation problem away from software and into hardware.

This is different to NEON, which works on 64-bit and 128-bit vectors. ARM is soon submitting patches to GCC and LLVM to support the auto-vectorization for VSE, either by directives or detecting applicable command sequences.

Performance metrics performed in ARMs labs show significant speed up for certain data sets already and expect that over time more code paths will be able to take advantage of SVE. ARM is encouraging semiconductor architecture licensees that need fine-grained HPC control to adopt SVE in both hardware and code such that as the nature of the platform adapts over time both sides will see a benefit as the instructions are scalable.