In a packed presentation kicking off this yr’s Computex commerce present, AMD CEO Dr. Lisa Su spent loads of time focusing with regards to AI. And whereas the majority of that focus was on AMD’s impending consumer merchandise, the corporate can be at the moment having fun with the speedy progress of their Instinct lineup of accelerators, with the MI300 persevering with to interrupt gross sales projections and progress information quarter after quarter. It’s no shock then that AMD is seeking to transfer rapidly then within the AI accelerator house, each to capitalize in the marketplace alternatives amidst the present AI mania, in addition to to remain aggressive with the various chipmakers massive and small who’re additionally making an attempt to stake a declare within the house.

To that finish, as a part of this night’s bulletins, AMD laid out their roadmap for his or her Instinct product lineup for each the quick and long run, with new merchandise and new architectures in improvement to hold AMD by 2026 and past.

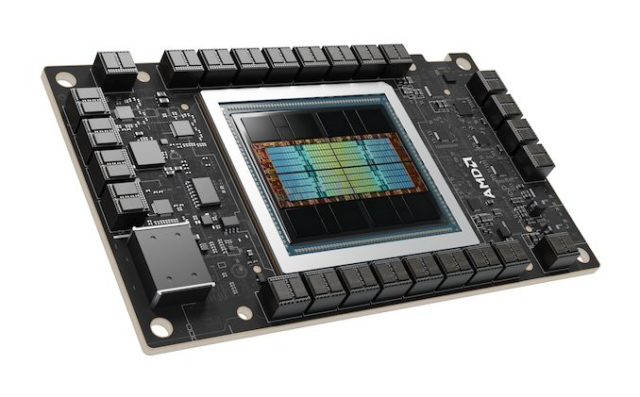

On the product aspect of issues, AMD is saying a brand new Instinct accelerator, the HBM3E-equipped MI325X. Based on the identical computational silicon as the corporate’s MI300X accelerator, the MI325X swaps out HBM3 reminiscence for quicker and denser HBM3E, permitting AMD to provide accelerators with as much as 288GB of reminiscence, and native reminiscence bandwidths hitting 6TB/second.

Meanwhile, AMD additionally showcased their first new CDNA structure/Instinct product roadmap in two years, laying out their plans by 2026. Over the subsequent two years AMD shall be shifting in a short time certainly, launching two new CDNA architectures and related Instinct merchandise in 2025 and 2026, respectively. The CDNA 4-powered MI350 collection shall be launched in 2025, and that shall be adopted up by the much more bold MI400 collection in 2026, which shall be based mostly on the CDNA “Next” structure.

AMD Instinct MI325X: Maximum Memory Monster

Starting issues off, let’s check out AMD’s up to date MI325X accelerator. As with a number of different accelerator distributors (e.g. NVIDIA’s H200), AMD is releasing a mid-generation refresh of their MI300X accelerator to make the most of the provision of newer HBM3E reminiscence. Itself a mid-generation replace to the HBM customary, HBM3 provides each greater clockspeeds and larger reminiscence densities.

Using 12-Hi stacks, all three of the key reminiscence distributors are (or shall be) transport 36GB stacks of reminiscence, which is 50% larger capability than their present top-of-the-line 24GB HBM3 stacks. Which for the eight stack MI300 collection, will convey it from a most reminiscence capability of 192GB to a whopping 288GB on a single accelerator.

HBM3E brings quicker reminiscence clockspeeds as nicely. Micron and SK hynix anticipating to ultimately promote stacks that clock as excessive as 9.2Gbps/pin, and Samsung desires to go to 9.8Gbps/pin, over 50% quicker than the 6.4Gbps information charge of standard HBM3 reminiscence. With that mentioned, it stays to be seen if and once we’ll see merchandise utilizing reminiscence working at these speeds – we’ve but to see an accelerator run HBM3 at 6.4Gbps – however regardless, HBM3E will afford chip distributors extra badly-needed reminiscence bandwidth.

| AMD Instinct Accelerators | ||||||

| MI325X | MI300X | MI250X | MI100 | |||

| Compute Units | 304 | 304 | 2 x 110 | 120 | ||

| Matrix Cores | 1216 | 1216 | 2 x 440 | 480 | ||

| Stream Processors | 19456 | 19456 | 2 x 7040 | 7680 | ||

| Boost Clock | 2100MHz | 2100MHz | 1700MHz | 1502MHz | ||

| FP64 Vector | 81.7 TFLOPS | 81.7 TFLOPS | 47.9 TFLOPS | 11.5 TFLOPS | ||

| FP32 Vector | 163.Four TFLOPS | 163.Four TFLOPS | 47.9 TFLOPS | 23.1 TFLOPS | ||

| FP64 Matrix | 163.Four TFLOPS | 163.Four TFLOPS | 95.7 TFLOPS | 11.5 TFLOPS | ||

| FP32 Matrix | 163.Four TFLOPS | 163.Four TFLOPS | 95.7 TFLOPS | 46.1 TFLOPS | ||

| FP16 Matrix | 1307.Four TFLOPS | 1307.Four TFLOPS | 383 TFLOPS | 184.6 TFLOPS | ||

| INT8 Matrix | 2614.9 TOPS | 2614.9… | ||||