In what has become something of an annual tradition for AMD’s Radeon Technologies Group, their Game Developers Conference Capsaicin & Cream event just wrapped up. Unlike the company’s more outright consumer-facing events such as their major product launches, AMD’s Capsaicin events are focused more on helping the company further their connections with the game development community. This a group that on the one hand has been banging away on the Graphics Core Next architecture in consoles for a few years now, and on the other hand operates in a world where, in the PC space, NVIDIA is still 75% of the dGPU market even with AMD’s Polaris-powered gains. As a result, despite the sometimes-playful attitude of AMD at these events, they are part of a serious developer outreach effort for the company.

For this year’s GDC then, AMD had a few announcements in store. It bears repeating that this is a developers’ conference, so most of this is aimed at developers, but even if you’re not making the next Doom it gives everyone a good idea of what AMD’s status is and where they’re going.

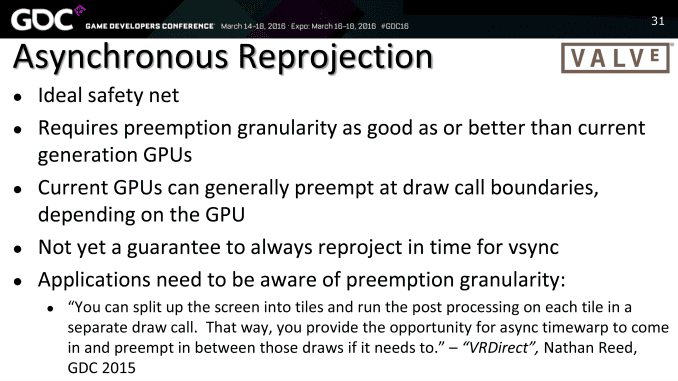

Vive/SteamVR Asynchronous Reprojection Support Coming in March

On the VR front, the company has announced that they are nearly ready to launch GPU support for the Vive/SteamVR’s asynchronous reprojection feature. Analogous to Oculus’s asynchronous timewarp feature, which was announced just under a year ago, asynchronous reprojection is a means of reprojecting a combination of old frame data and new input data to generate a new frame on the fly if a proper new frame will not be ready by the refresh deadline. The idea behind this feature is that rather than redisplaying an old frame and introducing judder to the user – which can make the experience anything from unpleasant to stomach-turning – instead a warped version of the previous frame is generated, based on the latest input data, so that the world still seems to be updating around the user and matching their head motions. It’s not a true solution to a lack of promptly rendered frames, but it can make VR more bearable if a game can’t quite keep up with the 90Hz refresh rate the headset demands.

As to how this related to AMD, this feature relies rather heavily on the GPU, as the SteamVR runtime and GPU driver need to quickly dispatch and execute the command for reprojection to make sure it gets done in time for the next display refresh. In AMD’s case, they not only want to catch this scenario but improve upon it, by using their asynchronous execution capabilities to get it done sooner. Valve launched this feature back in November, however at the time this feature was only available on NVIDIA-based video cards. So for AMD Vive owners, this will be a welcome addition. AMD in turn will be enabling this in a future release of their Radeon Software, with a target release date of March.

Forward Rendering Support for Unreal Engine 4

Moving on, since the last GDC AMD has been working on some new deals and partnerships, which they have announced at this year’s Capsaicin event. On the VR front, the company has been working with long-time partner (and everyone’s pal) Epic Games on improving VR support in the Unreal Engine. Being demoed at GDC is a new forward rendering path for Unreal Engine 4.15.

Traditional forward rendering has fallen out of style in recent years as its popular alternative, deferred rendering, allows for cheap screen space effects (think ambient occlusion and the like). The downside to deferred rendering is that it pretty much breaks any form of real anti-aliasing, such as MSAA. This hasn’t been too big of a problem for traditional games, where faux/post-process AA like FXAA can hide the issue enough to please most people. But it’s not good enough for VR; VR needs real, sub-pixel focused AA in order to properly hide jaggies and other aliasing effects on what is perceptually a rather low density display.

By bringing back forward rendering in a performance-viable form, AMD believes they can help solve the AA issue, and in a smarter, smoother manner than hacking MSAA into deferred rendering. The payoff of course being that Unreal Engine remains one of the most widely used game engines out there, so features that they can get into the core engine upstream with Epic are features that become available to developers downstream who are using the engine.

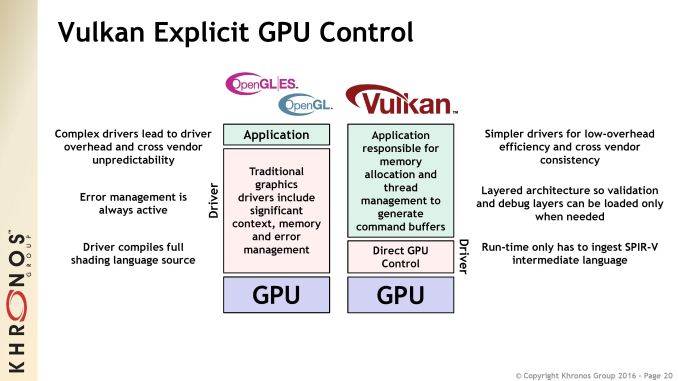

Partnering with Bethesda: Vulkan Everywhere

Meanwhile, the company has also announced that they have inked a major technology and marketing partnership deal with publisher Bethesda. Publisher deals in one form or another are rather common in this industry – with both good and bad outcomes for all involved – however what makes the AMD deal notable is the scale. In what AMD is calling a “first of its kind” deal, the companies aren’t inking a partnership over just one or two games, but rather they have formed what AMD is presenting as a long term, deep technology partnership, making this partnership much larger than the usual deals.

The biggest focus here for the two companies is on Vulkan, Khronos’s low-level graphics API. Vulkan has been out for just over a year now and due to the long development cycle for games is still finding its footing. The most well-known use right now is as an alternative rendering path for Bethesda/id’s Doom. AMD and Bethesda want to get Vulkan in all of Bethesda’s games in order to leverage the many benefits of low-level graphics APIs we’ve been talking about over the past few years. For AMD this not only stands to improve the performance of games on their graphics cards (though it should be noted, not exclusively), but it also helps to spur the adoption of better multi-threaded rendering code. And AMD has an 8-core processor they’re chomping at the bit to start selling in a few days…

From a deal making perspective, the way most of these deals work is that this means AMD will be providing Bethesda’s studios with engineers and other resources to help integrate Vulkan support and whatever other features the two entities want to add to the resulting games. Not talked about in much detail at the Capsaicin event was the marketing side of the equation. I’d expect that AMD has a lock on including Bethesda games as part of promotional game bundles, but I’m curious whether there will be anything else to it or not.

AMD Vega GPUs to Power LiquidSky Game Streaming Service

Finally, while AMD isn’t releasing any extensive new details about their forthcoming Vega GPUs at the show (sorry gang), they are announcing that they’ve already landed a deal with a commercial buyer to use these forthcoming GPUs. LiquidSky, a game service provider who is currently building and beta-testing an Internet-based game streaming service, is teaming up with AMD to use their Vega GPUs with their service.

The industry as a whole is still working to figure out the technology and the economics of gaming-on-demand services, but a commonly identified component is using virtualization and other means to share hardware over multiple users to keep costs down. And while this is generally considered a solved issue for server compute tasks – one needs only to see the likes of Microsoft Azure and Amazon Web Services – it’s still a work in progress for game streaming, where the inclusion of GPUs and the overall need for consistent performance coupled with real-time responsiveness adds a couple of wrinkles. AMD believes they have a good solution the form of their GPUs’ Single Root Input/Output Virtualization (SR-IOV) support.

From what I’ve been told, besides their Vega GPUs being a good fit for their high performance and user-splitting needs, LiquidSky is also looking to take advantage of AMD’s Radeon Virtualized Encode, which is a new Vega-specific feature. Unfortunately AMD isn’t offering a ton of detail on this feature, but from what I’ve been able to gather AMD has implemented an optimized video encoding path for virtualized environments on their GPUs. A game streaming service requires that the contents of upwards of several virtual machines be encoded quickly, so this would be the logical next step for AMD’s on-board video encoder (VCE) by making it efficiently work with virtualization.