Data facilities and HPCs utilizing Radeon Instinct accelerators haven’t any use for the GPU’s precise graphics rendering capabilities. And so, at a silicon stage, AMD is eradicating the raster graphics {hardware}, the show and multimedia engines, and different related elements that in any other case take up important quantities of die space. In their place, AMD is including fixed-function tensor compute {hardware}, much like the tensor cores on sure NVIDIA GPUs.

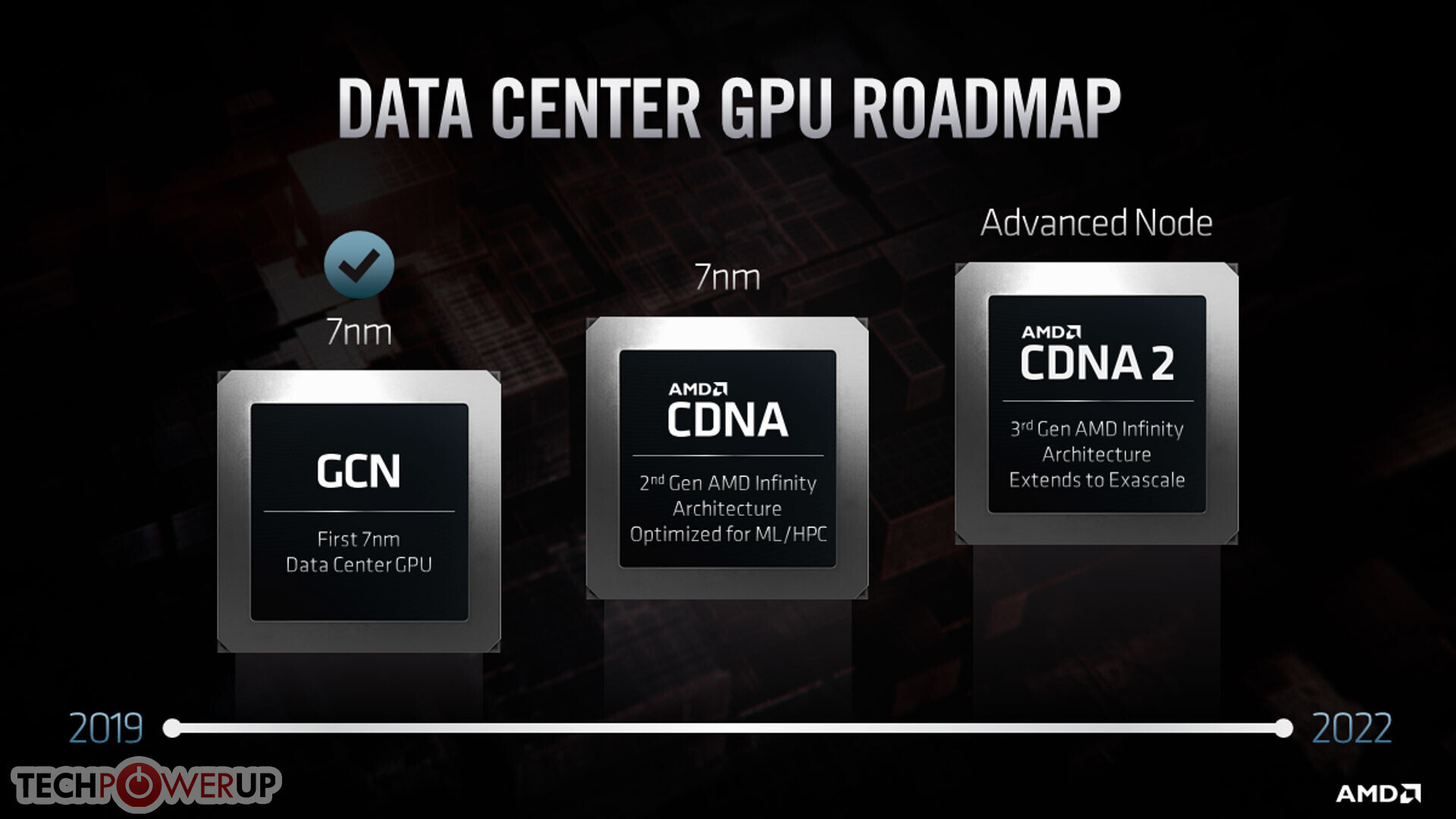

AMD additionally talked about giving its compute GPUs superior HBM2e reminiscence interfaces, Infinity Fabric interconnect along with PCIe, and many others. The firm detailed a quick roadmap of CDNA trying as far into the longer term as 2021-22. The firm’s current-generation compute accelerators are primarily based on the dated “Vega” architectures, and are primarily reconfigured “Vega 20” GPUs that lack tensor {hardware}.

Later this 12 months, the corporate will introduce its first CDNA GPU primarily based on “7 nm” course of, compute unit IPC rivaling RDNA, and tensor {hardware} that accelerates AI DNN constructing and coaching.

Somewhere between 2021 and 2022, AMD will introduce its up to date CDNA2 structure primarily based on an “advanced process” that AMD hasn’t finalized but. The firm is pretty assured that “Zen4” CPU microarchitecture will leverage 5 nm, however hasn’t been clear about the identical for CDNA2 (each launch across the similar time). Besides ramping up IPC, compute items, and different issues, the design focus with CDNA2 will probably be hyper-scalability (the flexibility to scale GPUs throughout huge reminiscence swimming pools spanning 1000’s of nodes). AMD will leverage its third technology Infinity Fabric interconnect and cache-coherent unified reminiscence to perform this.

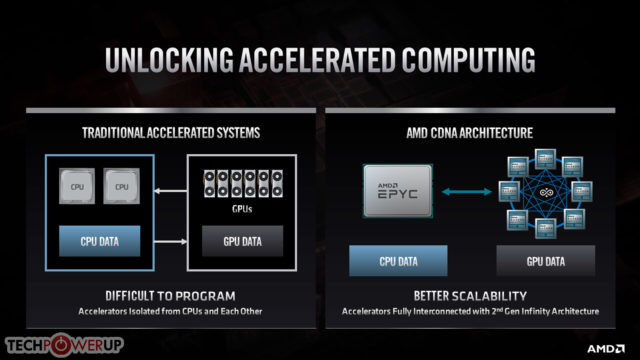

Much like Intel’s Compute eXpress Link (CXL) and PCI-Express gen 5.0, Infinity Fabric 3.Zero will help shared reminiscence swimming pools between CPUs and GPUs, enabling scalability of the sort required by exascale supercomputers such because the US-DoE’s upcoming “El Capitan” and “Frontier.” Cache coherent unified reminiscence reduces pointless data-transfers between the CPU-attached DRAM reminiscence and the GPU-attached HBM. CPU cores will be capable of instantly course of numerous serial-compute phases of a GPU compute operation by instantly speaking to the GPU-attached HBM and never pulling information to its personal foremost reminiscence. This vastly reduces I/O stress. “El Capitan” is an “all-AMD” supercomputer with as much as 2 exaflops (that is 2,000 petaflops or 2 million TFLOPs) peak throughput. It combines AMD EPYC “Genoa” CPUs primarily based on the “Zen4” microarchitecture, with GPUs probably primarily based on CDNA2, and Infinity Fabric 3.Zero dealing with I/O.

Oh the software program facet of issues, AMD’s newest ROCm open-source software program infrastructure will carry CDNA and CPUs collectively, by offering a unified programming mannequin rivaling Intel’s OneAPI and NVIDIA CUDA. A platform-agnostic API suitable with any GPU will probably be mixed with a CUDA to HIP translation layer.