Deep Java Library (DJL), is an open-source library created by Amazon to develop machine studying (ML) and deep studying (DL) fashions natively in Java whereas simplifying the usage of deep studying frameworks.

I lately used DJL to develop a footwear classification mannequin and located the toolkit tremendous intuitive and simple to make use of; it’s apparent a number of thought went into the design and the way Java builders would use it. DJL APIs summary generally used capabilities to develop fashions and orchestrate infrastructure administration. I discovered the high-level APIs used to coach, check and run inference allowed me to make use of my information of Java and the ML lifecycle to develop a mannequin in lower than an hour with minimal code.

Footwear classification mannequin

The footwear classification mannequin is a multiclass classification laptop imaginative and prescient (CV) mannequin, skilled utilizing supervised studying that classifies footwear in one in every of 4 class labels: boots, sandals, footwear, or slippers.

About the info

The most essential a part of creating an correct ML mannequin is to make use of information from a good supply. The information supply for the footwear classification mannequin is the UTZappos50okay dataset supplied by The University of Texas at Austin and is freely out there for tutorial, non-commercial use. The shoe dataset consists of 50,025 labeled catalog photographs collected from Zappos.com.

Train the footwear classification mannequin

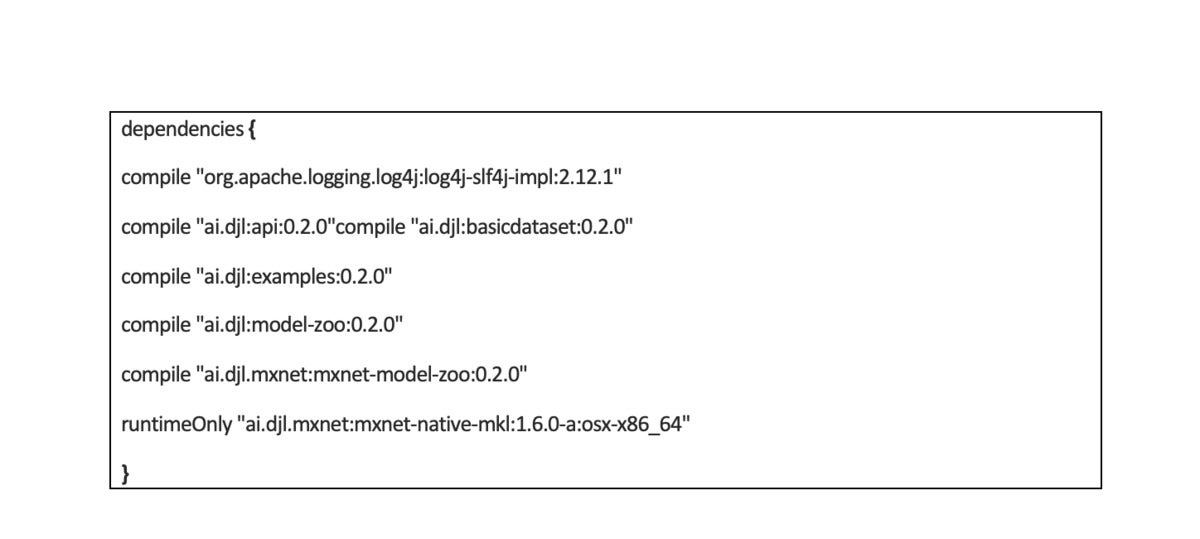

Training is the method to supply an ML mannequin by giving a studying algorithm coaching information to review. The time period mannequin refers back to the artifact produced through the coaching course of; the mannequin accommodates patterns discovered within the coaching information and can be utilized to make a prediction (or inference). Before I began the coaching course of, I arrange my native surroundings for improvement. You will want JDK 8 (or later), IntelliJ, an ML engine for coaching (like Apache MXNet), an surroundings variable pointed to your engine’s path and the construct dependencies for DJL.

AWS

AWSDJL stays true to Java’s motto, “write as soon as, run wherever (WORA)”, by being engine and deep studying framework-agnostic. Developers can write code as soon as that runs on any engine. DJL at the moment offers an implementation for Apache MXNet, an ML engine that eases the event of deep neural networks. DJL APIs use JNA, Java Native Access, to name the corresponding Apache MXNet operations. From a {hardware} perspective, coaching occurred domestically on my laptop computer utilizing a CPU. However, for the most effective efficiency, the DJL staff recommends…